Overview

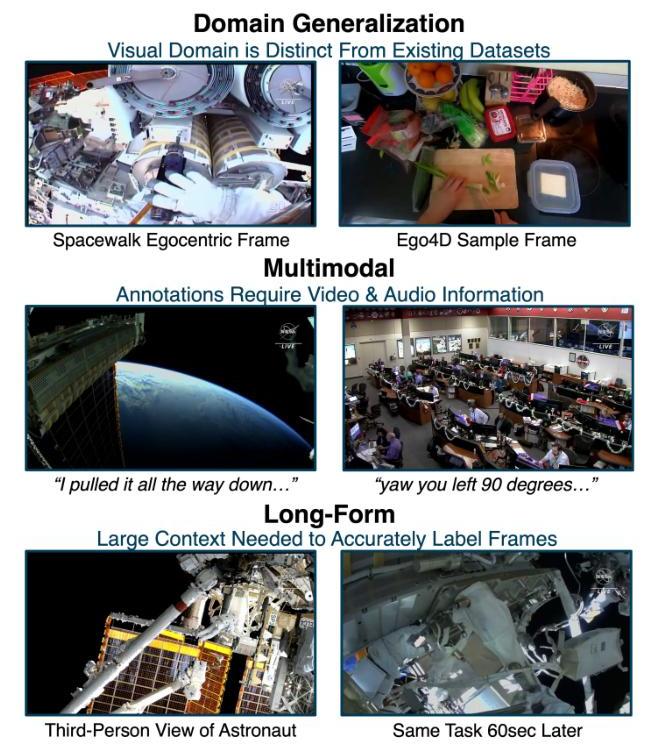

Spacewalk-18 is a benchmark dataset designed to evaluate multimodal, long-form, and procedural video understanding in novel domains. The benchmark uses extravehicular activities (EVAs) conducted outside the International Space Station as its core domain, providing a significant departure from typical household-oriented procedural video datasets. The benchmark tests how well video-language models can generalize to visually distinct environments while processing complex, temporal sequences that require both visual and auditory information.

Key Specifications

Dataset Scale:

- 18 spacewalk recordings spanning 96 hours of densely annotated video

- 4,000 temporal segments with step-level annotations

- 376 question-answering pairs for temporal reasoning evaluation

- Data split: 10 training videos, 2 validation videos, 6 test videos

- Coverage: Spacewalk missions from 2019 to 2023

Technical Characteristics:

- Temporal certificate: 140 seconds (1.4x longer than comparable datasets)

- Average video clip duration: 89 seconds

- Each video ranges from 7-10+ hours in length

- Multimodal annotations include visual content and speech transcripts

Tasks:

- Step Recognition: Classify procedural steps at given timestamps

- Question Answering: Answer multiple-choice questions about temporal events

- Intra-video Retrieval: Identify clips belonging to the same procedural step

Data Examples

Step Recognition Example

Input: Video segment from June 16, 2021 spacewalk

- Context window: 5 minutes centered around timestamp

- Visual frames showing astronaut activity

- Speech transcript: "US spacewalk eighty one will be conducted by EV one, Josh Cassada, and EV two, Frank Rubio. EV one exits the airlock and gets handed one of the bags in preparation for the EVA..."

- Step options: 31 predefined procedural steps plus "Irrelevant"

Target Answer: Step 1 - "EV1 and EV2 exit airlock"

Question Answering Example

Input: Hour-long video segment

- Visual frames at 0, 6, 18, 42, and 60 minutes

- Question: "Which of the following tasks happens after the astronaut leaves from the robotic arm?"

- Options: (A) Robotic arm takes Luca to AMS, (B) Luca & Drew connect power and data cables, (C) Drew move to ELC 2, (D) Drew hands Luca the pump system

Target Answer: (B) Luca & Drew connect power and data cables

Significance

Spacewalk-18 addresses critical limitations in current procedural video understanding benchmarks by introducing true domain generalization challenges. The benchmark reveals significant performance gaps between current state-of-the-art models and human capabilities:

Performance Gaps:

- Human accuracy on step recognition: 67.0%

- Best model accuracy: 28.49% (Caption-enhanced LLM)

- Question answering barely exceeds random chance (32.45% vs 25%)

Key Findings:

- Models struggle with extreme domain shifts despite pre-training on large datasets

- Fine-tuning provides only marginal improvements

- "Adaptation via summarization" (providing step summaries as context) significantly boosts performance

- Long-form Feature Bank methods outperform naive frame sampling for temporal understanding

- Multimodal fusion remains challenging, with some models performing better on text-only inputs

The benchmark establishes Spacewalk-18 as the longest temporal certificate dataset (140 seconds) and demonstrates that current models cannot effectively leverage very long temporal contexts without specialized architectures.

Usage

Access: The benchmark is publicly available at https://brown-palm.github.io/Spacewalk-18/

Evaluation Metrics:

- Accuracy: Percentage of correct step/answer predictions

- mAP: Mean Average Precision for confidence-based methods

- IoU: Intersection over Union for temporal segmentation quality

The benchmark supports zero-shot evaluation, last-layer fine-tuning, and full model fine-tuning scenarios, making it suitable for testing both domain adaptation capabilities and architectural innovations in long-form video understanding.