Overview

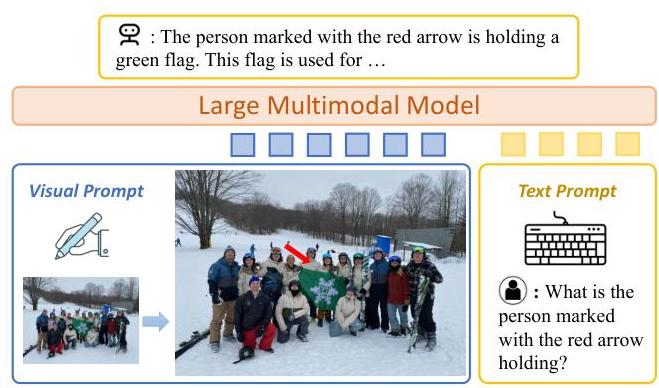

ViP-Bench is a comprehensive benchmark for evaluating large multimodal models' ability to understand arbitrary visual prompts in images. The benchmark tests region-specific comprehension across six key dimensions: recognition, OCR (optical character recognition), knowledge, math, relationship reasoning, and language generation. Unlike existing benchmarks that rely on textual coordinates or fixed geometric shapes, ViP-Bench evaluates models' understanding of diverse visual cues such as arrows, circles, scribbles, masks, and free-form drawings directly overlaid onto images.

Key Specifications

ViP-Bench contains 303 unique image-question pairs collected from MM-Vet, MMBench, and Visual Genome datasets. The benchmark includes both synthesized visual prompts (tight bounding boxes) and human-drawn prompts (arrows, circles, scribbles, etc.) to test different levels of complexity.

Evaluation Dimensions:

- Recognition (Rec): 240 examples requiring object identification within prompted regions

- OCR: 89 examples testing text extraction from marked areas

- Knowledge (Know): 59 examples requiring world knowledge about prompted regions

- Math: 31 examples involving mathematical operations on visual information

- Relationship (Rel): 28 examples testing understanding between multiple prompted objects

- Language Generation (Lang): 16 examples requiring descriptive text generation

The benchmark uses GPT-4 as an automated judge, scoring free-form responses on a 0-1 scale with 0.1 increments based on ground truth annotations.

Data Examples

Example 1: Relationship Reasoning

Image: Group photo with three objects marked by red, blue, and green mask contours

Question: "Between Object 1: the object within the red mask contour, Object 2: the object within the blue mask contour, and Object 3: the object within the green mask contour, which one has something on top of it?"

Ground Truth: "Object 2"

Example 2: Recognition with Scribble

Image: Plate of food with yellow scribble marking

Question: "What does the step marked with the yellow scribble suggest?"

Ground Truth: "identify your audience"

Significance

ViP-Bench addresses a critical gap in multimodal evaluation by focusing on region-specific understanding with arbitrary visual prompts that mirror natural human interaction. Current large vision-language models primarily handle whole-image understanding, but struggle with precise region-level comprehension using intuitive visual cues. The benchmark reveals significant limitations in existing models - even GPT-4V achieves only 59.9-60.7% overall performance, highlighting substantial room for improvement.

The benchmark's multi-dimensional assessment provides granular insights into model capabilities, showing that current models particularly struggle with OCR, mathematical reasoning, and language generation tasks at the regional level. This makes ViP-Bench valuable for diagnosing specific weaknesses and guiding future research directions.

Usage

ViP-Bench is publicly available through the project website at https://vip-llava.github.io. The benchmark includes the complete dataset with images, visual prompts, questions, and human-validated ground truth answers. Researchers can use the provided GPT-4 evaluation framework to assess their models' region-level understanding capabilities across the six defined dimensions. The benchmark supports evaluation with both synthesized tight bounding boxes and human-drawn arbitrary visual prompts to test different levels of visual prompt complexity.