Overview

MEBench is a synthetic benchmark designed to evaluate mutual exclusivity (ME) bias in state-of-the-art Vision-Language Models (VLMs). Mutual exclusivity is a cognitive phenomenon where children assume each object has a unique label, enabling rapid acquisition of new words by associating novel labels with unknown objects. The benchmark assesses whether VLMs can replicate this fundamental learning principle through three core tasks: object detection, novel label assignment, and spatial reasoning.

Key Specifications

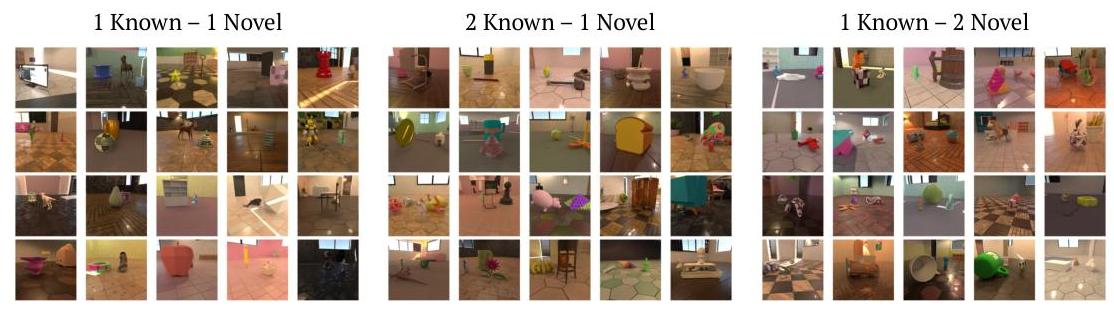

MEBench evaluates models across three experimental settings with varying complexity:

- 1K-1U: 1 known object, 1 novel object

- 2K-1U: 2 known objects, 1 novel object

- 1K-2U: 1 known object, 2 novel objects (with spatial disambiguation)

The benchmark uses procedurally generated synthetic data comprising:

- Known objects: 4,179 instances from Toys4K dataset across 105 toy categories

- Novel objects: 64 procedurally generated abstract objects using Blender

- Scenes: Realistic room environments with natural lighting and backgrounds

Each experimental setting contains 100 scenes with 25 viewpoints per scene. Models are evaluated using standard AP@0.5 for object localization and novel metrics including Normalized ME Score, Spatial Reasoning Score, and Ambiguity Score.

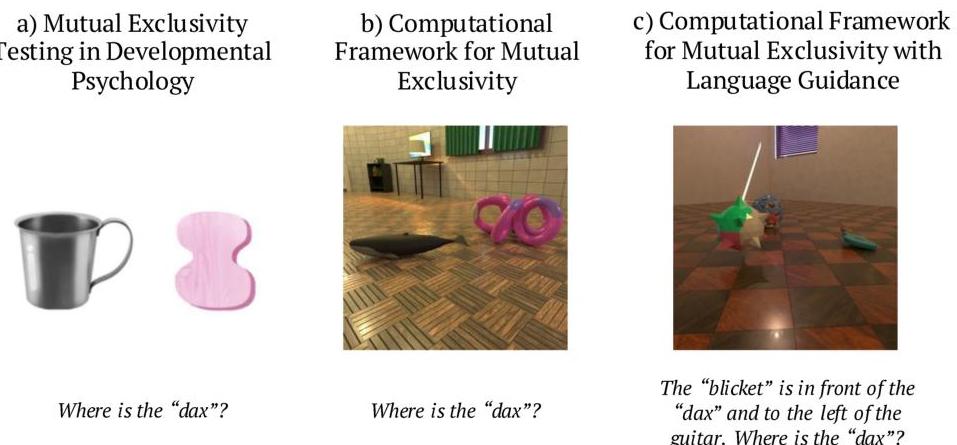

Figure 1: MEBench framework showing the progression from developmental psychology testing to computational implementation with language guidance for spatial disambiguation.

Data Examples

Example 1: Novel Label Assignment (1K-1U Setting)

- Input: Image containing a known cat and a novel abstract object

- Query: "Where is the dax?"

- Expected output: Bounding box around the novel object (not the cat)

- Evaluation: Tests whether the model applies mutual exclusivity by associating the novel label "dax" with the novel object rather than the known cat

Example 2: Spatial Reasoning (1K-2U Setting)

- Input: Image with a known fan and two novel objects

- Query: "The fan is in front of the blicket and to the left of the toma. Where is the toma?"

- Expected output: Bounding box around the correct novel object based on spatial relationships

- Evaluation: Tests spatial disambiguation when multiple novel objects are present

Figure 2: Examples of generated scenes across different experimental settings showing varying numbers of known and novel objects.

Significance

MEBench addresses a critical gap in evaluating cognitive biases in AI systems. Current VLMs show weak mutual exclusivity bias, with most models frequently misassigning novel labels to known objects rather than applying the exclusivity principle. The benchmark reveals that no existing model seamlessly integrates strong object localization, ME bias, and spatial reasoning capabilities.

Key findings include:

- CogVLM demonstrates the strongest ME bias (0.472 in 1K-1U setting) but struggles with spatial reasoning

- Most models show negative ME bias in complex scenes (2K-1U setting)

- All models benefit from spatial context, with Gemini showing exceptional spatial reasoning improvement (11.167 Spatial Reasoning Score)

The benchmark bridges cognitive science and AI research, providing systematic evaluation of human-like learning principles essential for zero-shot generalization in real-world applications.

Usage

MEBench is publicly available through GitHub at https://github.com/ngailapdi/MEBench. The repository includes:

- Generated evaluation data for all experimental settings

- Open-source data generation pipeline code

- Inference and evaluation code for all VLM baselines

- Complete experimental setup for reproducibility

The benchmark can be extended to explore additional factors influencing mutual exclusivity bias and supports controlled experimentation through its flexible synthetic data generation pipeline.