Overview

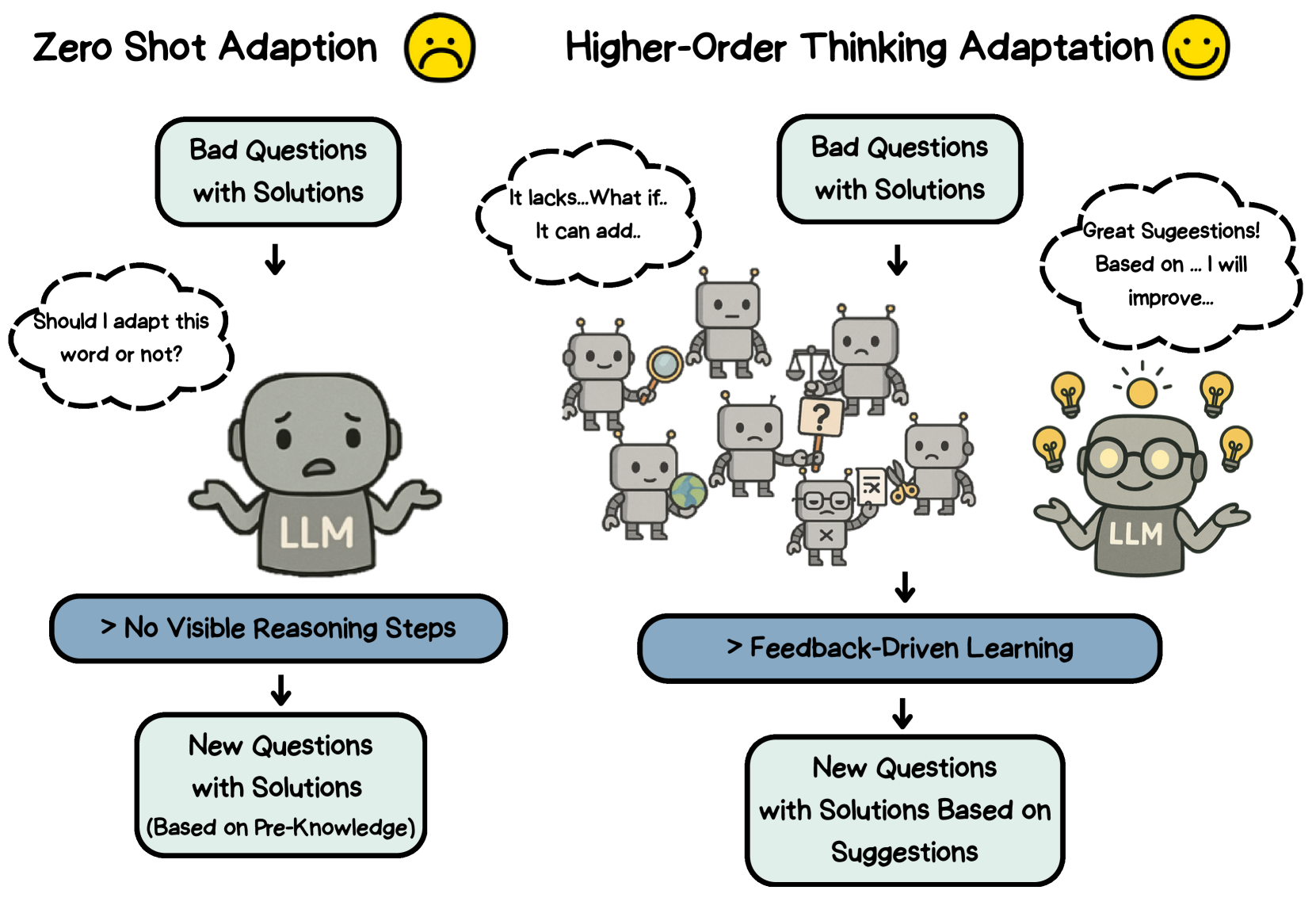

THiNK is a benchmark that evaluates large language models' higher-order thinking skills through iterative mathematical problem refinement. Unlike traditional accuracy-focused benchmarks, THiNK employs a multi-agent evaluation system grounded in Bloom's Taxonomy to assess cognitive processes like reasoning, analysis, and creative problem-solving. The benchmark uses a "think-aloud" protocol where models must iteratively improve flawed mathematical word problems based on structured feedback from specialized evaluation agents.

Key Specifications

The benchmark consists of 120 mathematical problems divided into two categories: 20 poorly constructed problems manually crawled from social media platforms and 100 synthetically generated flawed problems. The evaluation framework employs seven GPT-4O agents - six aligned with Bloom's cognitive levels (Remembering, Understanding, Applying, Analyzing, Evaluating, Creating) and one holistic agent providing improvement suggestions.

Evaluation Metrics:

- Pass Rate (PR): Proportion of agents rating a problem above threshold (τ = 85)

- Agent Agreement (AA): Inter-agent consistency using Cohen's Kappa

- Average Confidence (AC): Mean confidence scores across agents

- Composite Quality Score: Q(p_i) = 0.5×PR + 0.3×AA + 0.2×AC

- RoundsToPass: Number of iterations required to exceed quality threshold

- AvgQualityScore: Mean quality across all refinement steps

Data Examples

Example 1: Orchestra Problem Refinement

Original Flawed Problem: Question: "An orchestra of 120 players takes 40 minutes to play Beethoven's 9th Symphony. How long would it take for 60 players to play the symphony?" Solution: Assumes inverse proportionality, suggesting 80 minutes for 60 players.

THiNK-Guided Revision: Question: "An orchestra of 120 musicians performs Beethoven's 9th Symphony in 40 minutes. Assuming equal contribution, how long would it take 60 musicians to complete the same symphony?" Solution: Since performance duration does not depend on the number of musicians (as long as all parts are covered), it would still take 40 minutes for 60 musicians.

Example 2: Performance Results (GPT-4O)

- Remembering: 86.92 (↑ 26.92 improvement)

- Understanding: 82.96 (↑ 5.79 improvement)

- Applying: 76.71 (↓ 0.46 decline)

- Analyzing: 83.50 (↑ 4.21 improvement)

- Evaluating: 83.54 (↑ 2.92 improvement)

- Creating: 82.62 (↑ 4.21 improvement)

- Average Quality Score: 82.46%

- RoundsToPass: 2.35 iterations

Significance

THiNK addresses a critical gap in LLM evaluation by moving beyond surface-level accuracy to assess deeper cognitive processes. The benchmark reveals that while models excel at lower-order thinking tasks (Remembering, Understanding), they consistently struggle with knowledge application in realistic contexts. This finding has important implications for deploying LLMs in educational settings where reasoning quality matters more than computational correctness.

The framework demonstrates that structured feedback significantly improves higher-order thinking skills, with models showing consistent improvements in analysis, evaluation, and creative problem generation. The systematic decline in "Applying" scores across all models highlights a fundamental limitation in current LLMs' ability to transfer abstract knowledge to concrete problem-solving contexts.

Usage

The THiNK framework is publicly available on GitHub at https://github.com/Michaelyya/THiNK. The benchmark requires access to GPT-4O for the multi-agent evaluation system, though the problems can be solved by any language model. Experiments were conducted on NVIDIA A6000 GPUs for open-source models, with OpenAI API costs of approximately $300 for comprehensive evaluation across multiple models.