Overview

The "Missed Connections" benchmark evaluates large language models' ability to solve the New York Times Connections puzzle, which requires identifying four groups of four related words from a bank of sixteen words. The benchmark tests abstract reasoning, lateral thinking, and the ability to recognize subtle semantic and non-semantic relationships that go beyond simple word similarity.

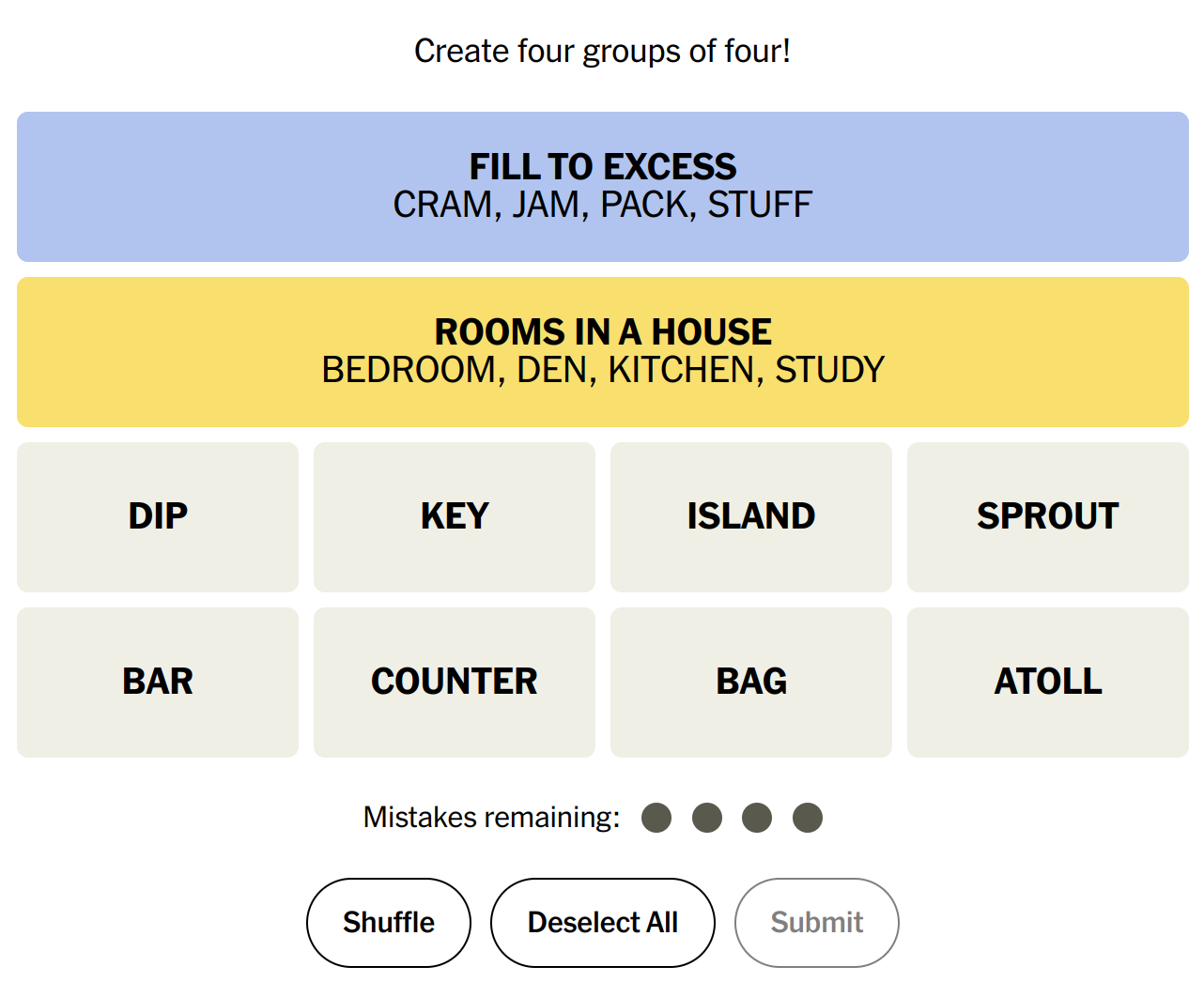

Figure 1: Example of the Connections puzzle interface showing solved categories (blue and yellow) and remaining words to be grouped

Figure 1: Example of the Connections puzzle interface showing solved categories (blue and yellow) and remaining words to be grouped

Key Specifications

The benchmark consists of 250 puzzles collected from June 2023 to February 2024, ensuring models were not exposed to these specific puzzles during training. Each puzzle contains 16 words that must be grouped into four categories of increasing difficulty: yellow (easiest), green, blue, and purple (trickiest). The benchmark includes two variants:

- Standard Game: Iterative guessing with feedback (correct, nearly correct, or incorrect)

- Challenge Variant: All four groups must be submitted simultaneously with only pass/fail feedback

Models are allowed up to 4 incorrect guesses in the standard game before failure. Success rate is the primary metric, calculated as the proportion of puzzles solved correctly.

Data Examples

Example 1 - Simple Category:

- Words: Bass, Flounder, Salmon, Trout

- Category: FISH

- Difficulty: Yellow (straightforward semantic grouping)

Example 2 - Tricky Category:

- Words: Ant, Drill, Island, Opal

- Category: FIRE___ (completing phrases: fire ant, fire drill, fire island, fire opal)

- Difficulty: Purple (requires recognizing words as parts of compound phrases)

The puzzles often include distractors - words that appear to belong to one category but actually belong to another, testing models' ability to prioritize the strongest connections.

Significance

This benchmark addresses a critical gap in evaluating abstract reasoning capabilities. Unlike traditional semantic similarity tasks, Connections requires models to:

- Identify non-semantic properties (e.g., words that read the same rotated 180°)

- Recognize contextual usage patterns (e.g., words that complete "___ paper")

- Handle highly abstract connections (e.g., "members of a septet")

- Manage distractors and competing hypotheses

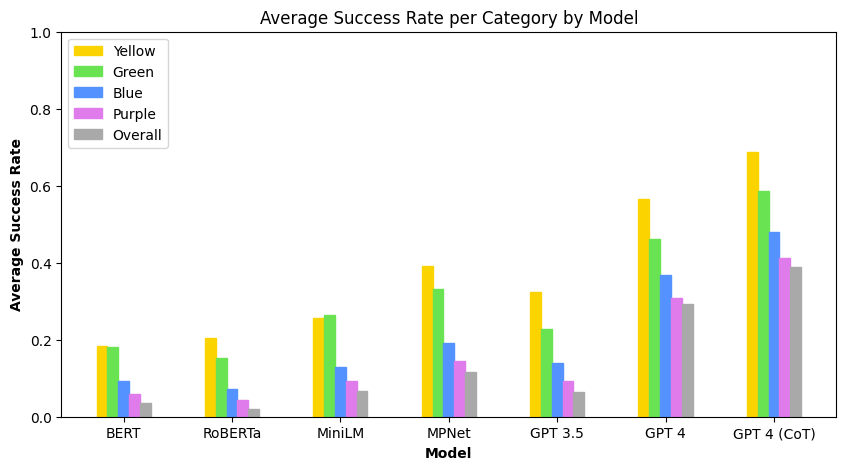

The results reveal significant limitations in current LLMs. GPT-4 Turbo with chain-of-thought prompting achieved the highest success rate at only 38.93%, while GPT-3.5 Turbo managed just 6.43%. Performance dropped dramatically when initial guesses were incorrect, suggesting models struggle with error recovery and can fall into reasoning "rabbit holes."

Figure 2: Success rates across difficulty categories show consistent performance degradation from yellow to purple categories for all models

Figure 2: Success rates across difficulty categories show consistent performance degradation from yellow to purple categories for all models

Usage

The puzzle data is accessible through the online archive at https://connections.swellgarfo.com/archive. The benchmark can be implemented using either sentence embedding approaches (clustering words by cosine similarity) or LLM prompting strategies. Chain-of-thought prompting significantly improves performance, particularly for recovery from incorrect initial guesses. The challenge variant provides an even more stringent test of reasoning capabilities, with most models showing substantial performance drops in this format.