Overview

AVUT (Audio-centric Video Understanding Benchmark without Text Shortcut) is a comprehensive benchmark designed to evaluate multimodal large language models (MLLMs) on their ability to understand auditory information in videos. Unlike existing video understanding benchmarks that primarily focus on visual content, AVUT specifically targets audio-centric tasks that require genuine integration of audio and visual information.

Key Specifications

The benchmark consists of 2,662 carefully selected YouTube videos with an average duration of 67.8 seconds, spanning 18 audio-centric domains including music, sports, TED speeches, cooking, and entertainment. AVUT provides 11,609 question-answer pairs across 8 distinct tasks organized into two main categories:

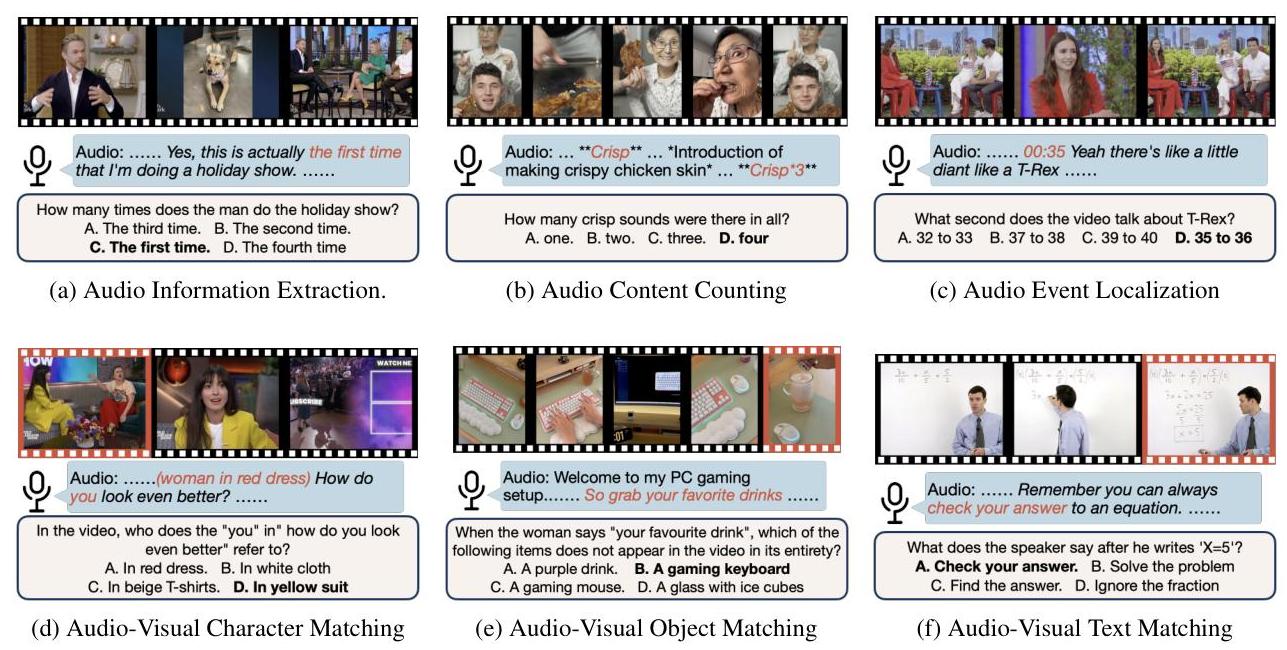

- Audio Content Understanding: Audio Information Extraction (AIE), Audio Content Counting (ACC), Audio Event Localization (AEL)

- Audio-Visual Alignment: Audio-Visual Character Matching (AVCM), Audio-Visual Object Matching (AVOM), Audio-Visual Text Matching (AVTM), Audio-Visual Segment Matching (AVSM), Audio-Visual Speaker Diarization (AVDiar)

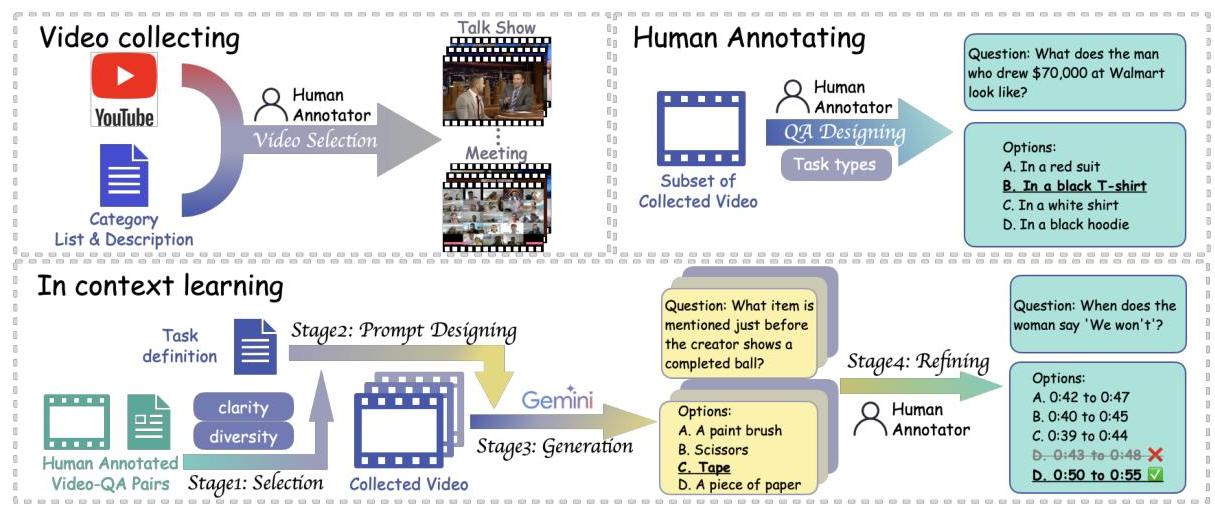

The benchmark includes two datasets: AV-Human (698 videos, 1,734 QA pairs with expert human annotation) and AV-Gemini (1,964 videos, 9,875 QA pairs using semi-automatic annotation with Gemini 1.5 Pro).

Data Examples

Here are concrete examples from AVUT's task types:

Audio Information Extraction (Multiple Choice)

Question: The person in the audio said when they would have a trip from Central Africa to the farthest edge of Norway?

(A) The spring of 2025

(B) The summer of 2025

(C) The spring of 2026

(D) The summer of 2026

Audio-Visual Character Matching (Multiple Choice)

Question: Who says "there's no way of knowing who did it" in the video?

(A) The woman on the bottom right of the screen

(B) The man on the top right of the screen

(C) The man on the top left of the screen

(D) The woman on the bottom left of the screen

Significance

AVUT addresses critical gaps in current video understanding evaluation:

Text Shortcut Mitigation: AVUT implements a novel answer permutation-based filtering mechanism that ensures models cannot achieve high performance by relying solely on question text. While existing benchmarks show text-only accuracy rates of 32-68%, AVUT maintains text-only accuracy near random chance (25-30%).

Audio-Centric Focus: The benchmark demonstrates that audio information is crucial for comprehensive video understanding. When audio is removed from Gemini 1.5 Pro, performance drops from 78.34% to 62.78%, and even detailed transcriptions cannot fully replace raw audio (69.23% accuracy).

Rigorous Evaluation: Current state-of-the-art models show significant room for improvement, with Gemini 1.5 Pro achieving the highest overall accuracy of 75.67%. Most open-source models struggle particularly with fine-grained audio understanding tasks like Audio Content Counting (ACC) and Audio Event Localization (AEL).

Usage

AVUT is publicly available through the GitHub repository at https://github.com/lark-png/AVUT. The benchmark supports evaluation of audio-visual MLLMs, visual-only MLLMs, and audio-only models, providing a standardized testing framework with unified prompting templates and automated evaluation metrics. Models are tested using multiple-choice accuracy for six tasks, specialized metrics for Audio-Visual Segment Matching (pair and full sequence accuracy), and Diarization Word Error Rate (DWER) for speaker diarization tasks.