Cornerstone Robotics Limited

28 May 2025

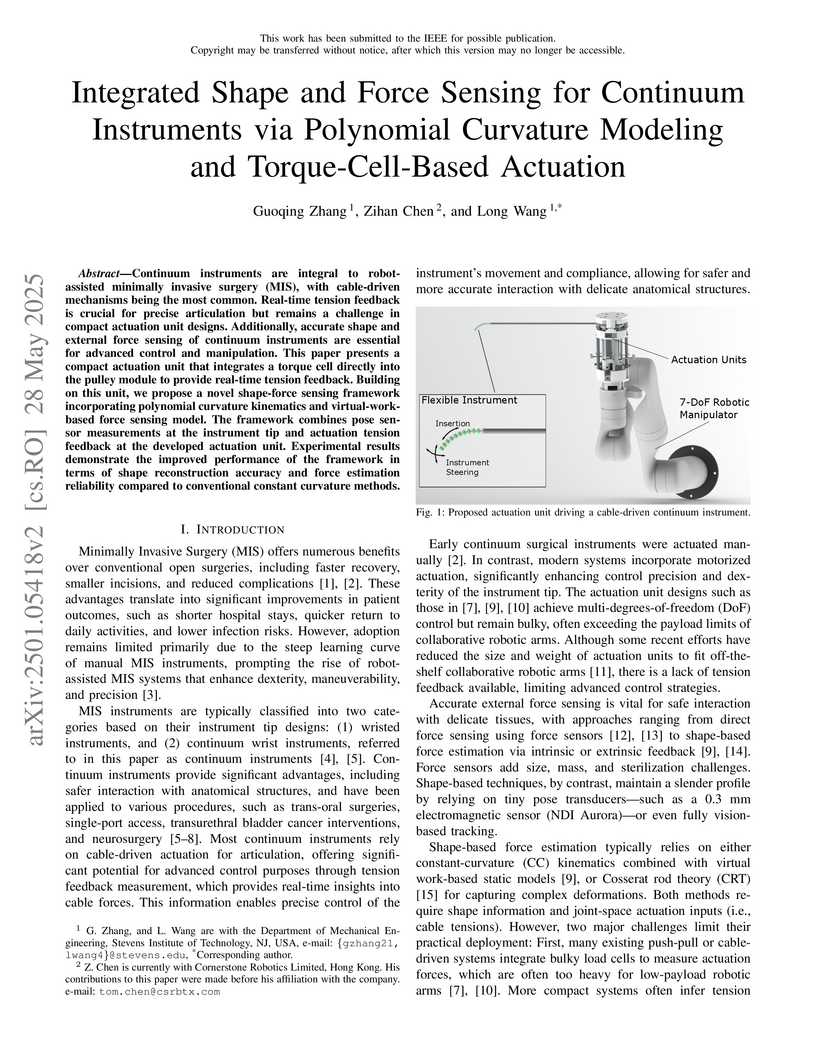

Continuum instruments are integral to robot-assisted minimally invasive surgery (MIS), with cable-driven mechanisms being the most common. Real-time tension feedback is crucial for precise articulation but remains a challenge in compact actuation unit designs. Additionally, accurate shape and external force sensing of continuum instruments are essential for advanced control and manipulation. This paper presents a compact actuation unit that integrates a torque cell directly into the pulley module to provide real-time tension feedback. Building on this unit, we propose a novel shape-force sensing framework incorporating polynomial curvature kinematics and virtual-work-based force sensing model. The framework combines pose sensor measurements at the instrument tip and actuation tension feedback at the developed actuation unit. Experimental results demonstrate the improved performance of the framework in terms of shape reconstruction accuracy and force estimation reliability compared to conventional constant curvature methods.

11 Nov 2023

Robotic skill learning has been increasingly studied but the demonstration

collections are more challenging compared to collecting images/videos in

computer vision and texts in natural language processing. This paper presents a

skill learning paradigm by using intuitive teleoperation devices to generate

high-quality human demonstrations efficiently for robotic skill learning in a

data-driven manner. By using a reliable teleoperation interface, the da Vinci

Research Kit (dVRK) master, a system called dVRK-Simulator-for-Demonstration

(dS4D) is proposed in this paper. Various manipulation tasks show the system's

effectiveness and advantages in efficiency compared to other interfaces. Using

the collected data for policy learning has been investigated, which verifies

the initial feasibility. We believe the proposed paradigm can facilitate robot

learning driven by high-quality demonstrations and efficiency while generating

them.

There are no more papers matching your filters at the moment.