Eyeline Labs

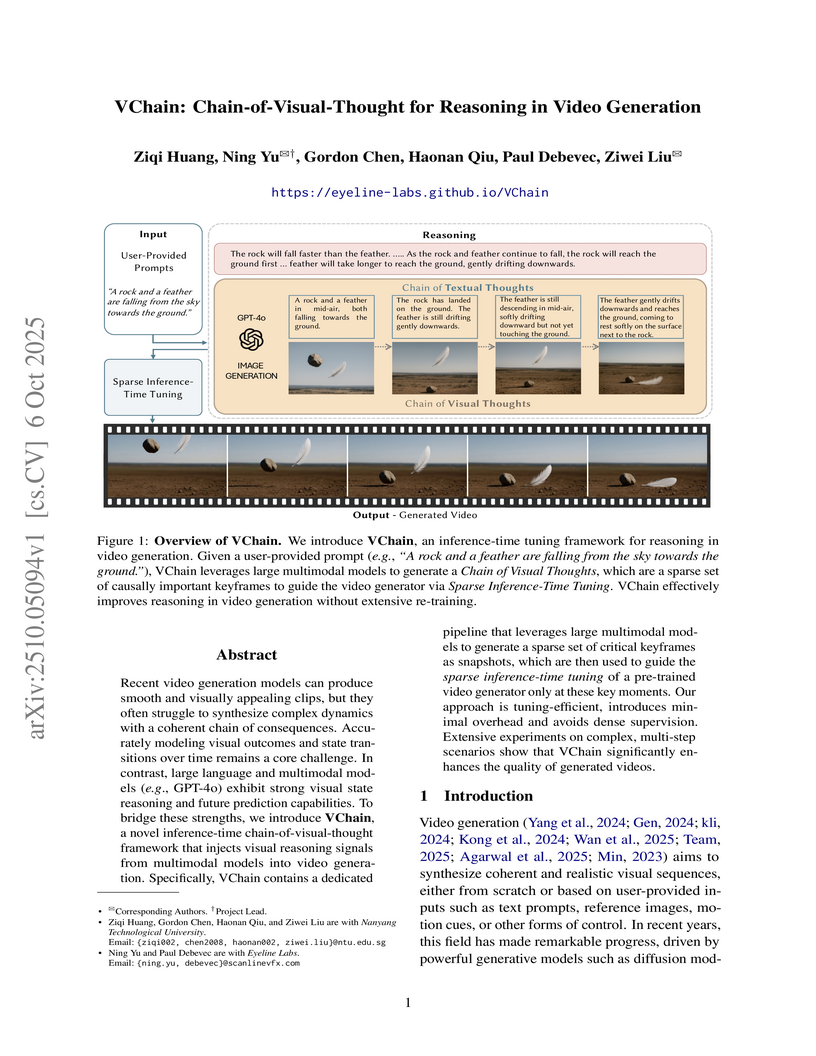

The VChain framework integrates a large multimodal model's reasoning capabilities into video generation through a three-stage, inference-time process, generating videos with enhanced causal and physical coherence. It employs "chain-of-visual-thought" keyframes to guide pre-trained diffusion models, leading to more logically consistent and plausible dynamic sequences.

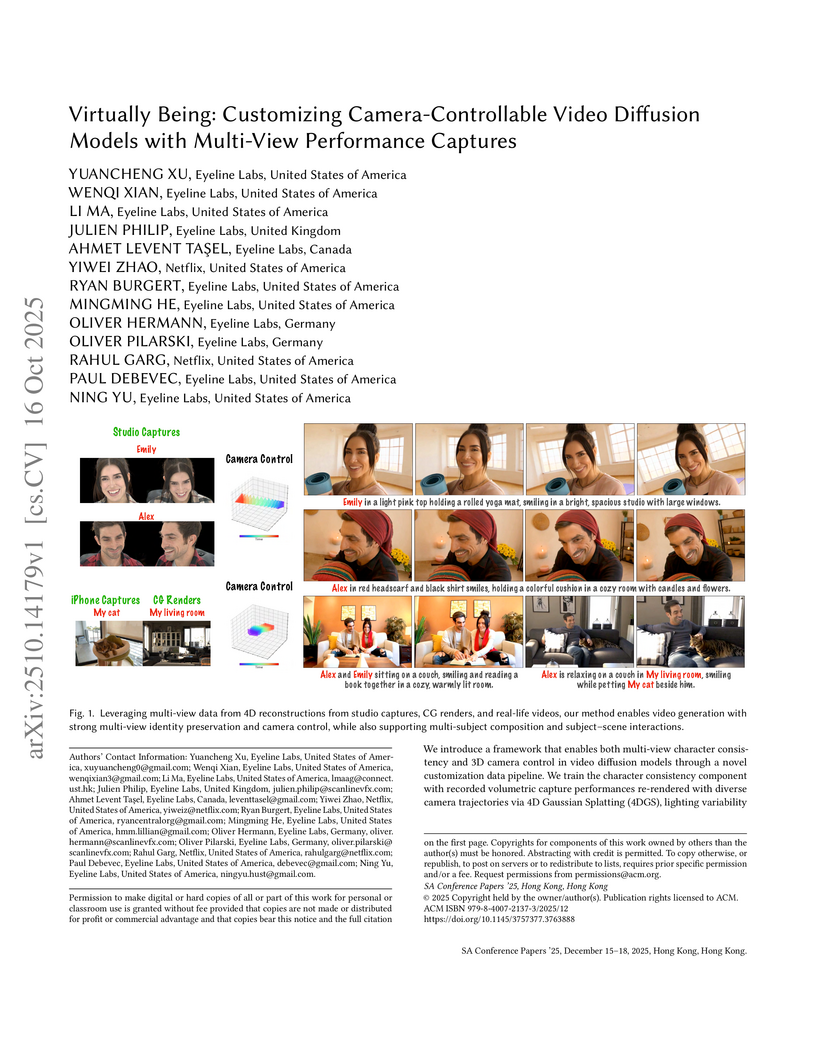

The "Virtually Being" framework introduces a method for customizing camera-controllable video diffusion models using multi-view performance captures. It achieves superior multi-view identity preservation and precise 3D camera control, enabling high-fidelity character generation and interaction tailored for virtual production workflows.

There are no more papers matching your filters at the moment.