Korea Culture Technology Institute (KCTI)

Visual perception in autonomous driving is a crucial part of a vehicle to

navigate safely and sustainably in different traffic conditions. However, in

bad weather such as heavy rain and haze, the performance of visual perception

is greatly affected by several degrading effects. Recently, deep learning-based

perception methods have addressed multiple degrading effects to reflect

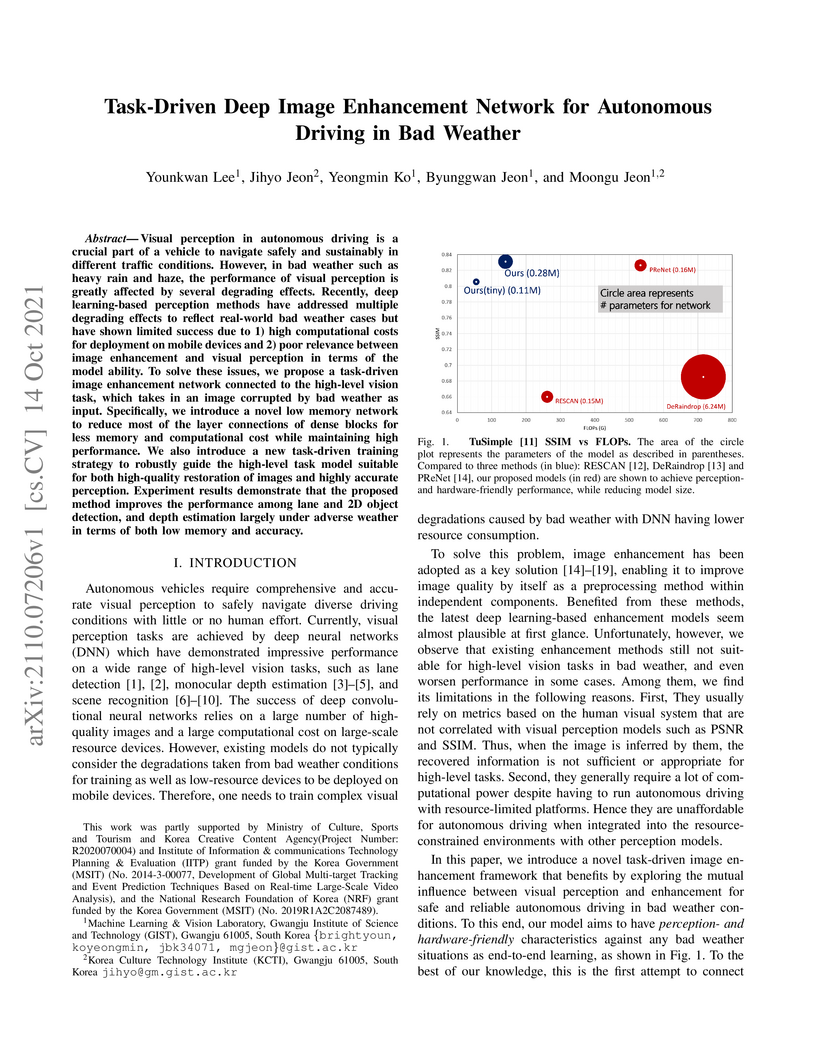

real-world bad weather cases but have shown limited success due to 1) high

computational costs for deployment on mobile devices and 2) poor relevance

between image enhancement and visual perception in terms of the model ability.

To solve these issues, we propose a task-driven image enhancement network

connected to the high-level vision task, which takes in an image corrupted by

bad weather as input. Specifically, we introduce a novel low memory network to

reduce most of the layer connections of dense blocks for less memory and

computational cost while maintaining high performance. We also introduce a new

task-driven training strategy to robustly guide the high-level task model

suitable for both high-quality restoration of images and highly accurate

perception. Experiment results demonstrate that the proposed method improves

the performance among lane and 2D object detection, and depth estimation

largely under adverse weather in terms of both low memory and accuracy.

There are no more papers matching your filters at the moment.