autonomous-vehicles

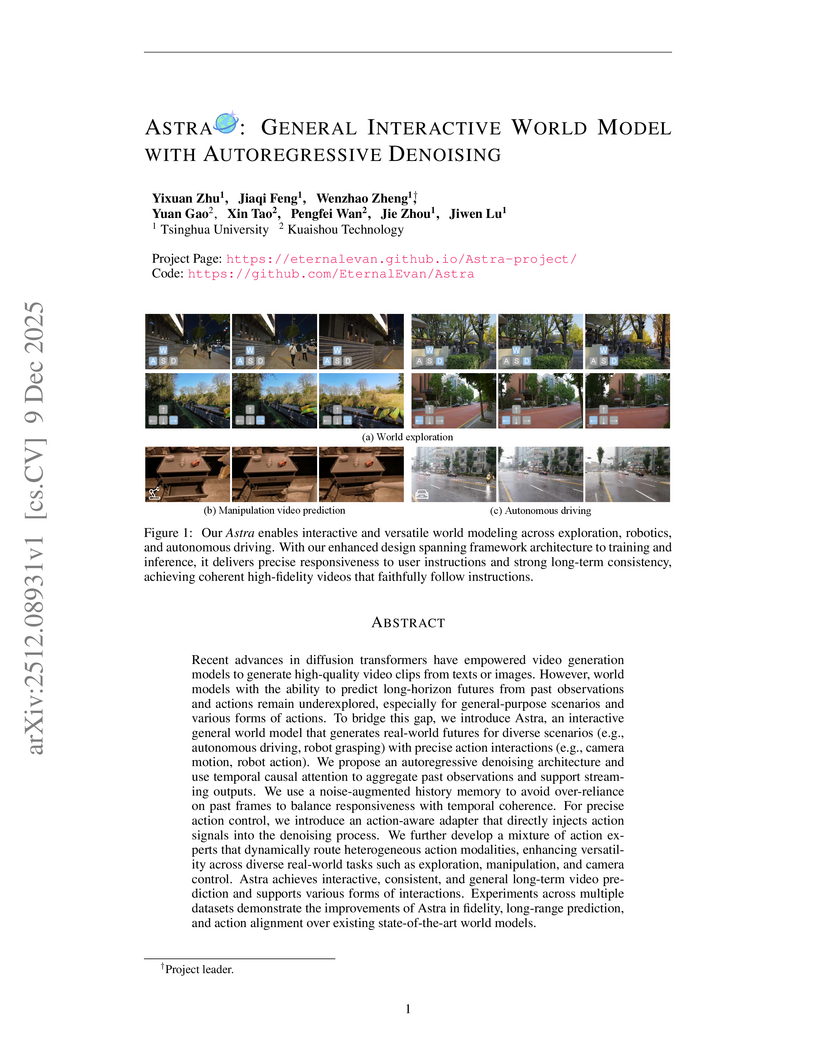

Astra, a collaborative effort from Tsinghua University and Kuaishou Technology, introduces an interactive general world model using an autoregressive denoising framework to generate real-world futures with precise action interactions. The model achieves superior performance in instruction following and visual fidelity across diverse simulation scenarios while efficiently extending a pre-trained video diffusion backbone.

10 Dec 2025

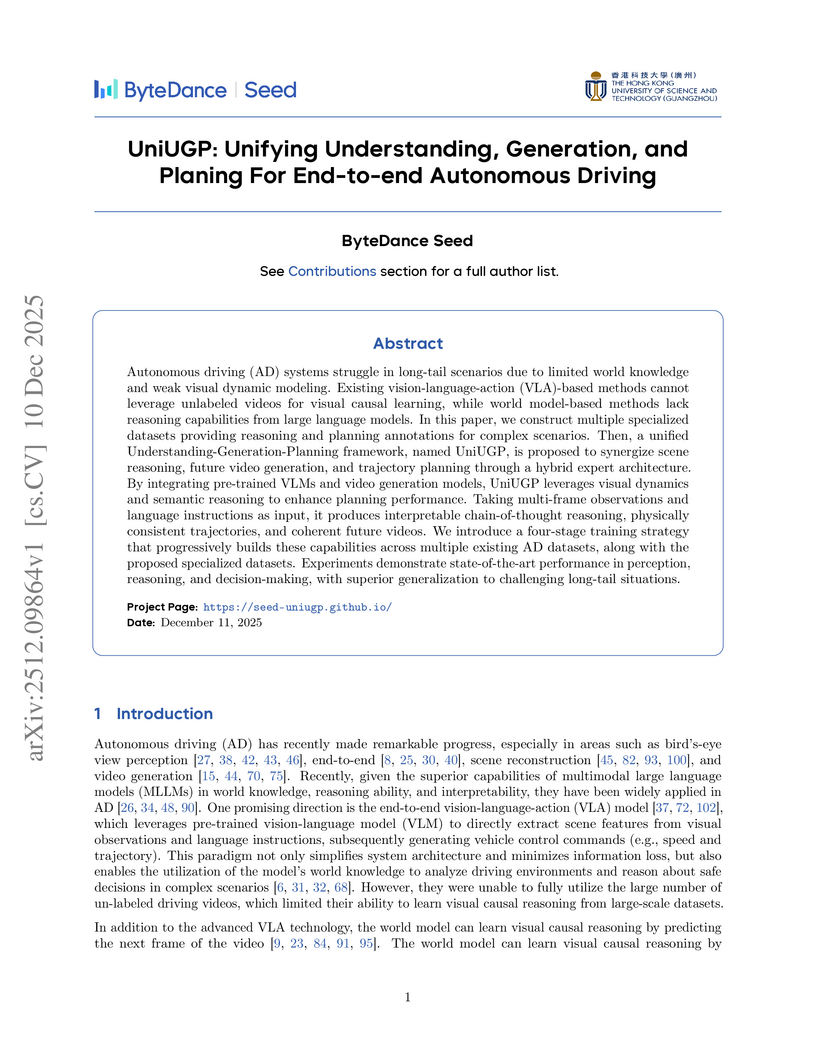

UniUGP presents a unified framework for end-to-end autonomous driving, integrating scene understanding, future video generation, and trajectory planning through a hybrid expert architecture. This approach enhances interpretability with Chain-of-Thought reasoning and demonstrates state-of-the-art performance in challenging long-tail scenarios and multimodal capabilities across various benchmarks.

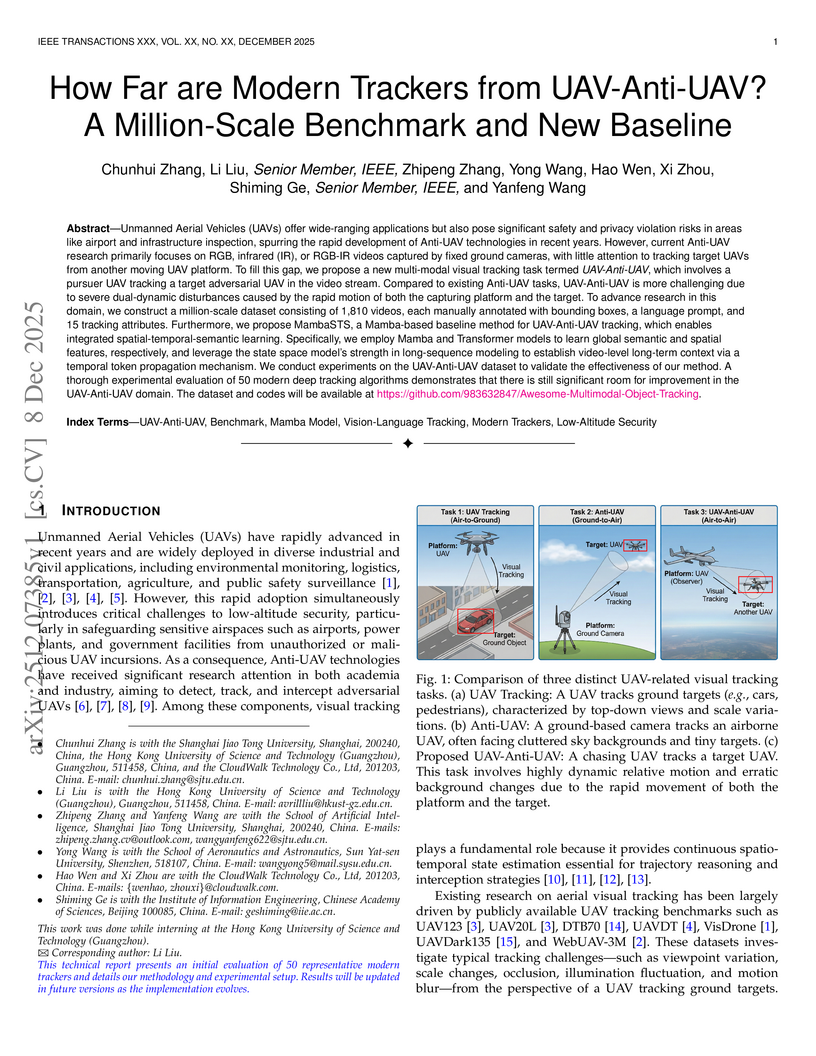

Researchers introduce the novel UAV-Anti-UAV tracking task, where a pursuer drone tracks an adversarial one, and create the first million-scale benchmark dataset for this challenging air-to-air scenario. They also propose MambaSTS, a new baseline tracker that integrates spatial, temporal, and semantic learning using Mamba and Transformer architectures, achieving a Mean Accuracy (mACC) of 0.443, which is 6.6 percentage points higher than the next best method on the new benchmark.

The Khalasi framework implements an end-to-end reinforcement learning pipeline, enabling autonomous surface vehicles (ASVs) to navigate energy-efficiently in complex, vortical flow fields using only local sensor data. This approach achieves a 43.37% energy saving over baselines and demonstrates robust generalization to unseen synthetic and real-world ocean currents.

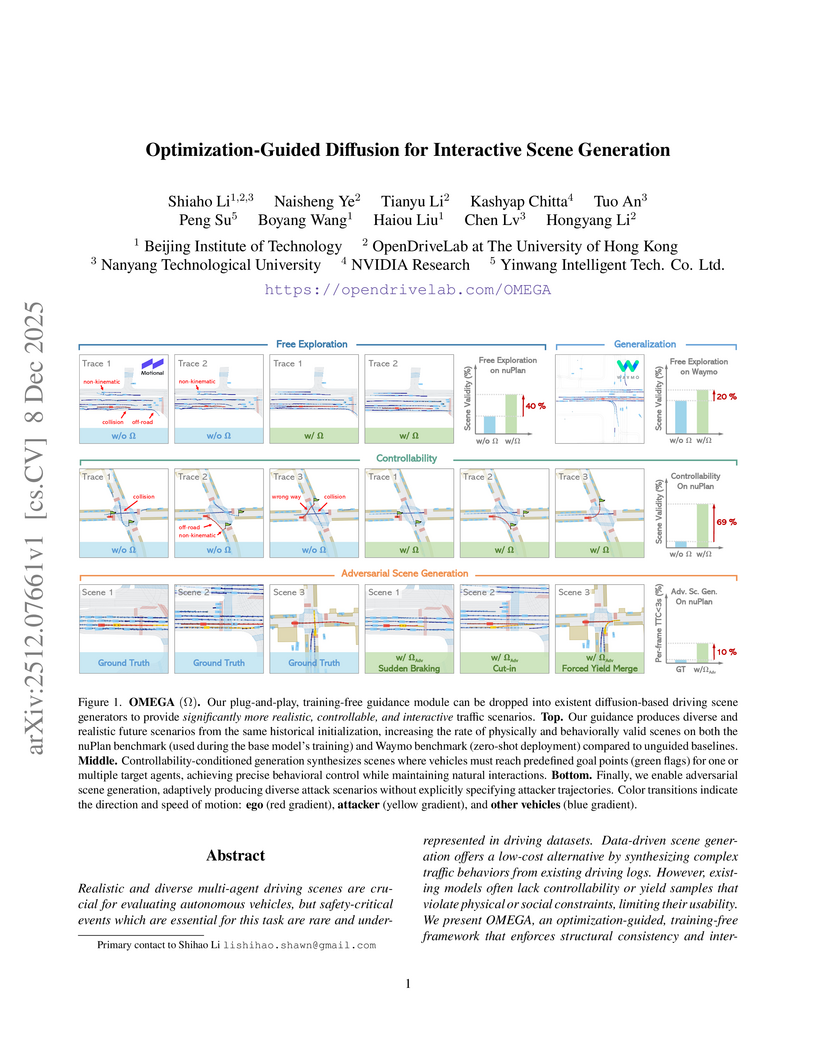

The OMEGA framework introduces a training-free method to enhance diffusion models for multi-agent driving scene generation, significantly boosting scenario realism, structural consistency, and controllability. It achieves a 72.27% valid scene rate on nuPlan, a 39.92 percentage point increase over baselines, and generates 5 times more near-collision frames for adversarial testing while maintaining plausibility.

The advancement of camera-only Bird's-Eye-View(BEV) perception is currently impeded by a fundamental tension between state-of-the-art performance and on-vehicle deployment tractability. This bottleneck stems from a deep-rooted dependency on computationally prohibitive view transformations and bespoke, platform-specific kernels. This paper introduces FastBEV++, a framework engineered to reconcile this tension, demonstrating that high performance and deployment efficiency can be achieved in unison via two guiding principles: Fast by Algorithm and Deployable by Design. We realize the "Deployable by Design" principle through a novel view transformation paradigm that decomposes the monolithic projection into a standard Index-Gather-Reshape pipeline. Enabled by a deterministic pre-sorting strategy, this transformation is executed entirely with elementary, operator native primitives (e.g Gather, Matrix Multiplication), which eliminates the need for specialized CUDA kernels and ensures fully TensorRT-native portability. Concurrently, our framework is "Fast by Algorithm", leveraging this decomposed structure to seamlessly integrate an end-to-end, depth-aware fusion mechanism. This jointly learned depth modulation, further bolstered by temporal aggregation and robust data augmentation, significantly enhances the geometric fidelity of the BEV this http URL validation on the nuScenes benchmark corroborates the efficacy of our approach. FastBEV++ establishes a new state-of-the-art 0.359 NDS while maintaining exceptional real-time performance, exceeding 134 FPS on automotive-grade hardware (e.g Tesla T4). By offering a solution that is free of custom plugins yet highly accurate, FastBEV++ presents a mature and scalable design philosophy for production autonomous systems. The code is released at: this https URL

Researchers from Fudan University developed a unified framework for Aerial Vision-Language Navigation, enabling UAVs to follow natural language instructions using only monocular RGB observations. This framework achieves state-of-the-art performance among RGB-only methods and demonstrates competitive capabilities compared to systems relying on additional sensors.

Convolutional Neural Networks (CNNs) have proven highly effective for edge and mobile vision tasks due to their computational efficiency. While many recent works seek to enhance CNNs with global contextual understanding via self-attention-based Vision Transformers, these approaches often introduce significant computational overhead. In this work, we demonstrate that it is possible to retain strong global perception without relying on computationally expensive components. We present GlimmerNet, an ultra-lightweight convolutional network built on the principle of separating receptive field diversity from feature recombination. GlimmerNet introduces Grouped Dilated Depthwise Convolutions(GDBlocks), which partition channels into groups with distinct dilation rates, enabling multi-scale feature extraction at no additional parameter cost. To fuse these features efficiently, we design a novel Aggregator module that recombines cross-group representations using grouped pointwise convolution, significantly lowering parameter overhead. With just 31K parameters and 29% fewer FLOPs than the most recent baseline, GlimmerNet achieves a new state-of-the-art weighted F1-score of 0.966 on the UAV-focused AIDERv2 dataset. These results establish a new accuracy-efficiency trade-off frontier for real-time emergency monitoring on resource-constrained UAV platforms. Our implementation is publicly available at this https URL.

Researchers from Tsinghua University and Xiaomi EV developed DGGT, a feedforward, pose-free framework for 4D reconstruction of dynamic driving scenes. The method achieves 27.41 dB PSNR and 0.846 SSIM on the Waymo Open Dataset for novel view synthesis, outperforming prior work while processing scenes in 0.39 seconds and demonstrating strong zero-shot generalization across diverse datasets.

Traffic accidents result in millions of injuries and fatalities globally, with a significant number occurring at intersections each year. Traffic Signal Control (TSC) is an effective strategy for enhancing safety at these urban junctures. Despite the growing popularity of Reinforcement Learning (RL) methods in optimizing TSC, these methods often prioritize driving efficiency over safety, thus failing to address the critical balance between these two aspects. Additionally, these methods usually need more interpretability. CounterFactual (CF) learning is a promising approach for various causal analysis fields. In this study, we introduce a novel framework to improve RL for safety aspects in TSC. This framework introduces a novel method based on CF learning to address the question: ``What if, when an unsafe event occurs, we backtrack to perform alternative actions, and will this unsafe event still occur in the subsequent period?'' To answer this question, we propose a new structure causal model to predict the result after executing different actions, and we propose a new CF module that integrates with additional ``X'' modules to promote safe RL practices. Our new algorithm, CFLight, which is derived from this framework, effectively tackles challenging safety events and significantly improves safety at intersections through a near-zero collision control strategy. Through extensive numerical experiments on both real-world and synthetic datasets, we demonstrate that CFLight reduces collisions and improves overall traffic performance compared to conventional RL methods and the recent safe RL model. Moreover, our method represents a generalized and safe framework for RL methods, opening possibilities for applications in other domains. The data and code are available in the github this https URL.

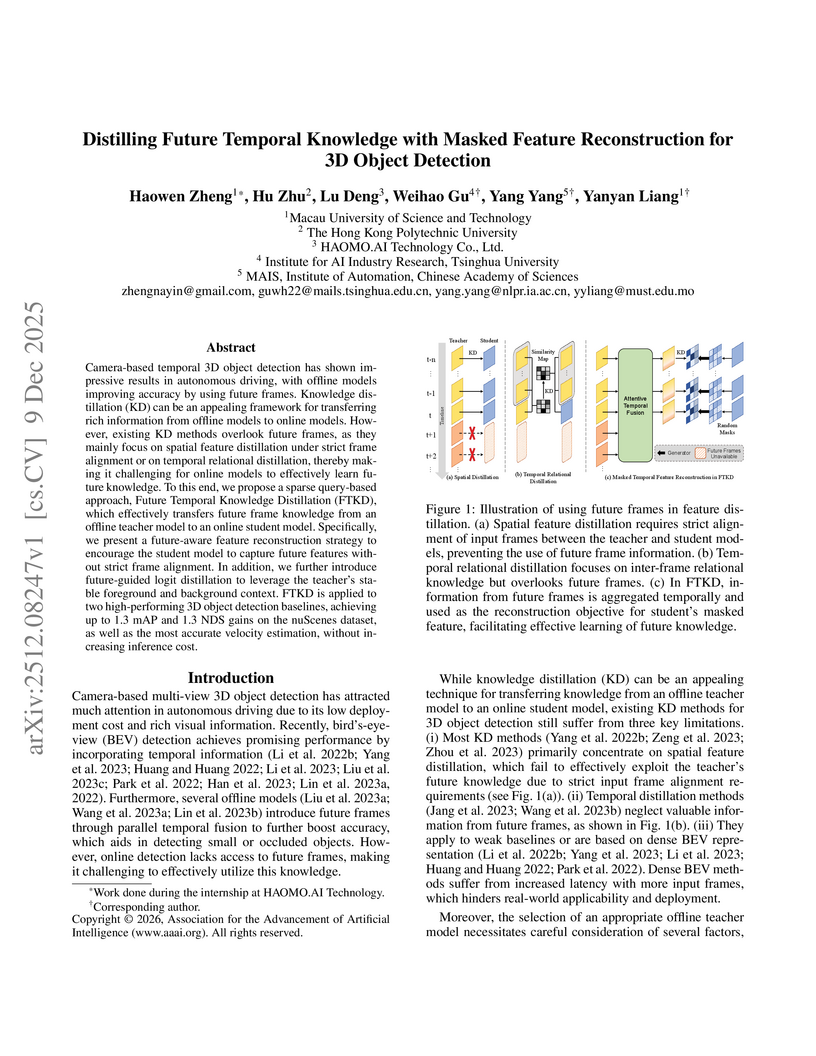

Camera-based temporal 3D object detection has shown impressive results in autonomous driving, with offline models improving accuracy by using future frames. Knowledge distillation (KD) can be an appealing framework for transferring rich information from offline models to online models. However, existing KD methods overlook future frames, as they mainly focus on spatial feature distillation under strict frame alignment or on temporal relational distillation, thereby making it challenging for online models to effectively learn future knowledge. To this end, we propose a sparse query-based approach, Future Temporal Knowledge Distillation (FTKD), which effectively transfers future frame knowledge from an offline teacher model to an online student model. Specifically, we present a future-aware feature reconstruction strategy to encourage the student model to capture future features without strict frame alignment. In addition, we further introduce future-guided logit distillation to leverage the teacher's stable foreground and background context. FTKD is applied to two high-performing 3D object detection baselines, achieving up to 1.3 mAP and 1.3 NDS gains on the nuScenes dataset, as well as the most accurate velocity estimation, without increasing inference cost.

10 Dec 2025

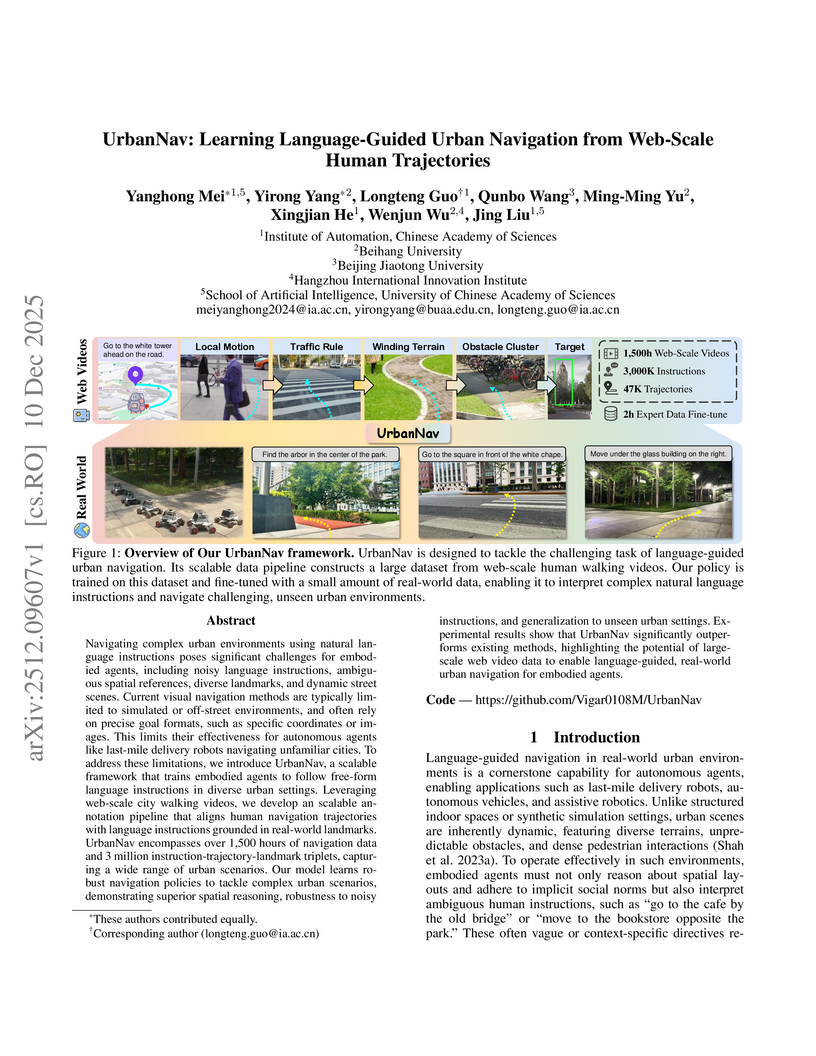

Navigating complex urban environments using natural language instructions poses significant challenges for embodied agents, including noisy language instructions, ambiguous spatial references, diverse landmarks, and dynamic street scenes. Current visual navigation methods are typically limited to simulated or off-street environments, and often rely on precise goal formats, such as specific coordinates or images. This limits their effectiveness for autonomous agents like last-mile delivery robots navigating unfamiliar cities. To address these limitations, we introduce UrbanNav, a scalable framework that trains embodied agents to follow free-form language instructions in diverse urban settings. Leveraging web-scale city walking videos, we develop an scalable annotation pipeline that aligns human navigation trajectories with language instructions grounded in real-world landmarks. UrbanNav encompasses over 1,500 hours of navigation data and 3 million instruction-trajectory-landmark triplets, capturing a wide range of urban scenarios. Our model learns robust navigation policies to tackle complex urban scenarios, demonstrating superior spatial reasoning, robustness to noisy instructions, and generalization to unseen urban settings. Experimental results show that UrbanNav significantly outperforms existing methods, highlighting the potential of large-scale web video data to enable language-guided, real-world urban navigation for embodied agents.

04 Dec 2025

MindDrive is an integrated framework for end-to-end autonomous driving that combines world models for "what-if" future scene simulations with vision-language models for human-aligned, multi-objective trajectory evaluation. The system achieved state-of-the-art performance on the NAVSIM benchmark, reaching an 88.9 PDMS on NAVSIM-v1 Navtest and a 30.5 EPDMS on NAVSIM-v2 Navhard, demonstrating enhanced safety and robustness.

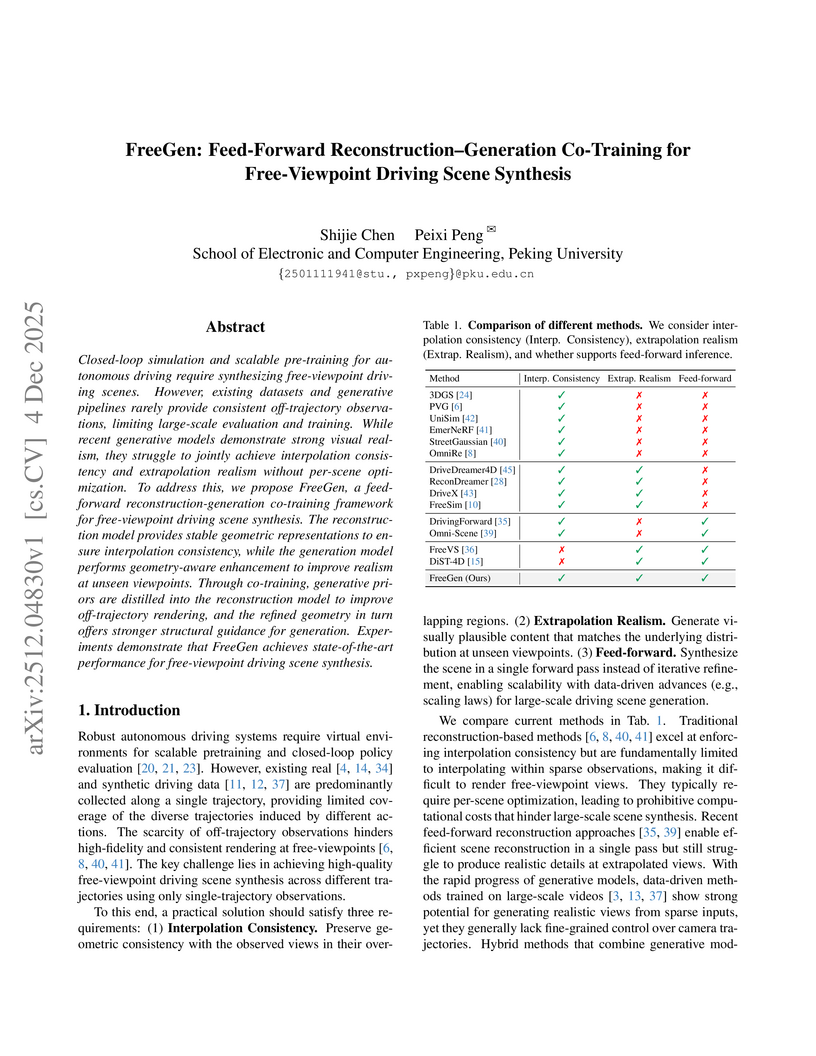

FreeGen introduces a feed-forward reconstruction-generation co-training framework that integrates 3D Gaussian Splatting with geometry-aware diffusion models for free-viewpoint driving scene synthesis. It achieves state-of-the-art performance on the nuScenes dataset, with an FID of 11.34 and FVD of 44.98 at a 12m lateral shift, outperforming previous methods without requiring auxiliary LiDAR or 3D bounding box annotations.

Chinese Academy of Sciences

Chinese Academy of Sciences Fudan University

Fudan University University of Science and Technology of China

University of Science and Technology of China Shanghai Jiao Tong UniversityAerospace Information Research InstituteInstitute of Trustworthy Embodied AI, Fudan UniversityKey Laboratory of Target Cognition and Application Technology, Aerospace Information Research Institute, Chinese Academy of Sciences

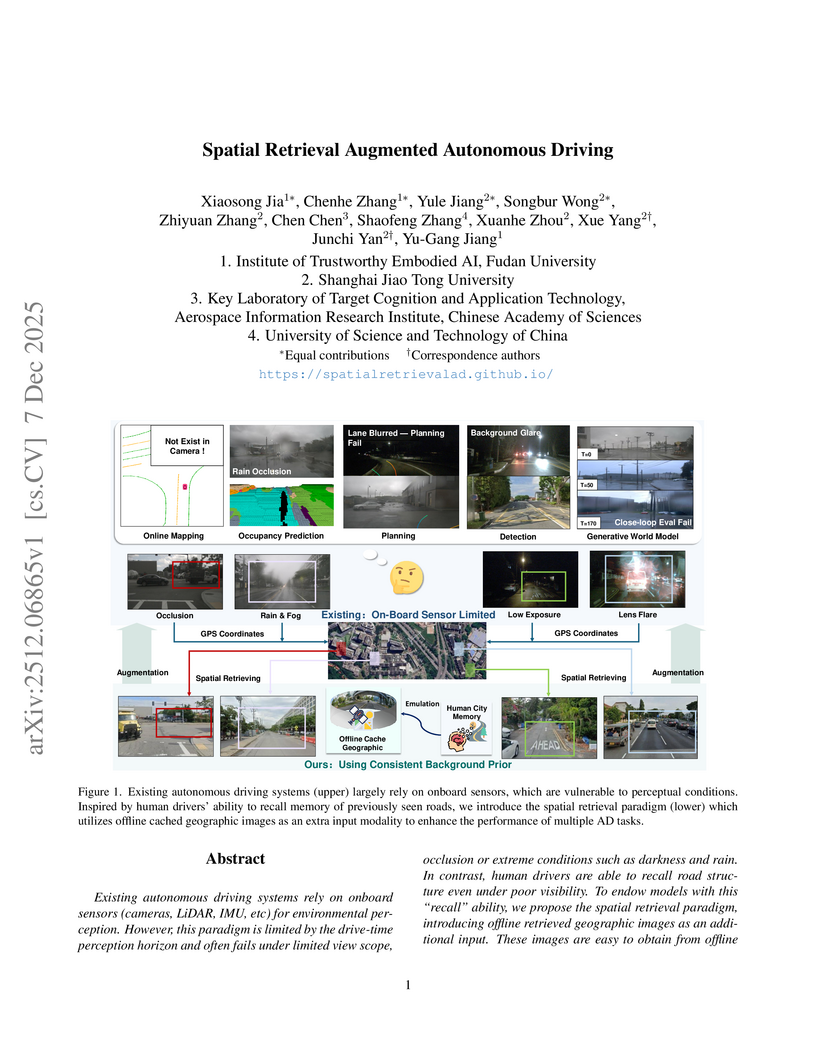

Shanghai Jiao Tong UniversityAerospace Information Research InstituteInstitute of Trustworthy Embodied AI, Fudan UniversityKey Laboratory of Target Cognition and Application Technology, Aerospace Information Research Institute, Chinese Academy of SciencesResearchers from Fudan University and Shanghai Jiao Tong University established a spatial retrieval augmented autonomous driving paradigm, integrating offline geographic images to enhance autonomous vehicle robustness. This approach boosted online mapping performance by up to 11.9% mAP and reduced collision rates in planning, particularly under challenging conditions.

Mimir introduces a hierarchical dual-system for end-to-end autonomous driving that incorporates Laplace distribution-based uncertainty propagation for goal points and a multi-rate guidance mechanism. This framework achieves a 20% improvement in the EPDMS driving score on the Navhard benchmark and a 1.6x speedup for its high-level guidance module.

01 Dec 2025

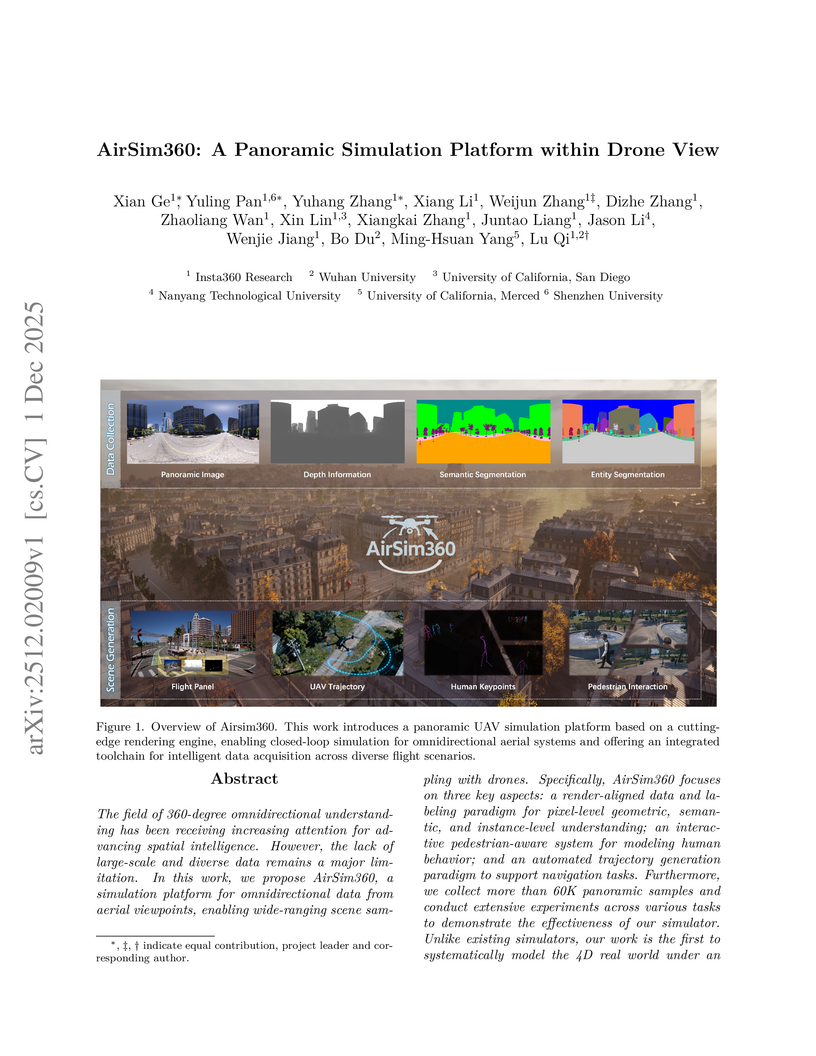

Insta360 Research and its collaborators developed AirSim360, a panoramic simulation platform for drones, which generates high-fidelity 360-degree aerial data with rich, render-aligned annotations. The platform's synthetic data notably improved monocular pedestrian distance estimation by reducing average angular error from 21.21° to 17.02° and enhanced performance in panoramic depth and segmentation tasks.

Researchers at the University of Wisconsin-Madison, NVIDIA, Stanford University, and Johns Hopkins University developed dVLM-AD, a diffusion-based Vision-Language Model for autonomous driving. This model improves the consistency between high-level reasoning and low-level planning while providing strong robustness against prompt manipulation, achieving top performance on the WOD-E2E benchmark.

The rapid development of Vision-Language models (VLMs) and Multimodal Language Models (MLLMs) in autonomous driving research has significantly reshaped the landscape by enabling richer scene understanding, context-aware reasoning, and more interpretable decision-making. However, a lot of existing work often relies on either single-view encoders that fail to exploit the spatial structure of multi-camera systems or operate on aggregated multi-view features, which lack a unified spatial representation, making it more challenging to reason about ego-centric directions, object relations, and the wider context. We thus present BeLLA, an end-to-end architecture that connects unified 360° BEV representations with a large language model for question answering in autonomous driving. We primarily evaluate our work using two benchmarks - NuScenes-QA and DriveLM, where BeLLA consistently outperforms existing approaches on questions that require greater spatial reasoning, such as those involving relative object positioning and behavioral understanding of nearby objects, achieving up to +9.3% absolute improvement in certain tasks. In other categories, BeLLA performs competitively, demonstrating the capability of handling a diverse range of questions.

Traditional sea exploration faces significant challenges due to extreme conditions, limited visibility, and high costs, resulting in vast unexplored ocean regions. This paper presents an innovative AI-powered Autonomous Underwater Vehicle (AUV) system designed to overcome these limitations by automating underwater object detection, analysis, and reporting. The system integrates YOLOv12 Nano for real-time object detection, a Convolutional Neural Network (CNN) (ResNet50) for feature extraction, Principal Component Analysis (PCA) for dimensionality reduction, and K-Means++ clustering for grouping marine objects based on visual characteristics. Furthermore, a Large Language Model (LLM) (GPT-4o Mini) is employed to generate structured reports and summaries of underwater findings, enhancing data interpretation. The system was trained and evaluated on a combined dataset of over 55,000 images from the DeepFish and OzFish datasets, capturing diverse Australian marine environments. Experimental results demonstrate the system's capability to detect marine objects with a mAP@0.5 of 0.512, a precision of 0.535, and a recall of 0.438. The integration of PCA effectively reduced feature dimensionality while preserving 98% variance, facilitating K-Means clustering which successfully grouped detected objects based on visual similarities. The LLM integration proved effective in generating insightful summaries of detections and clusters, supported by location data. This integrated approach significantly reduces the risks associated with human diving, increases mission efficiency, and enhances the speed and depth of underwater data analysis, paving the way for more effective scientific research and discovery in challenging marine environments.

There are no more papers matching your filters at the moment.