SHI Labs @ Georgia TechOregon

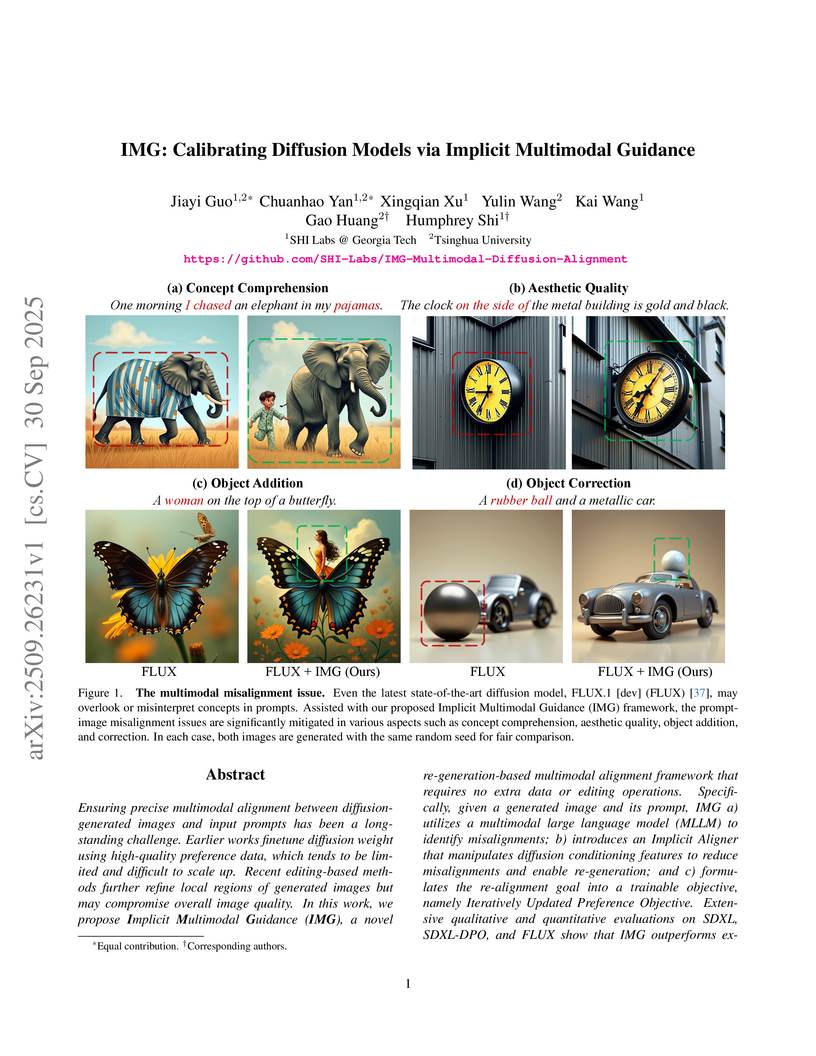

Researchers from SHI Labs at Georgia Tech and Tsinghua University developed Implicit Multimodal Guidance (IMG), a re-generation framework that leverages a finetuned MLLM and an "Implicit Aligner" to refine diffusion model conditioning. The method significantly improves prompt-image alignment in models like SDXL and FLUX, achieving an 84.6% win rate over base SDXL and outperforming editing-based approaches while preserving image quality.

Test-time adaptation (TTA) aims to improve the performance of source-domain

pre-trained models on previously unseen, shifted target domains. Traditional

TTA methods primarily adapt model weights based on target data streams, making

model performance sensitive to the amount and order of target data. The

recently proposed diffusion-driven TTA methods mitigate this by adapting model

inputs instead of weights, where an unconditional diffusion model, trained on

the source domain, transforms target-domain data into a synthetic domain that

is expected to approximate the source domain. However, in this paper, we reveal

that although the synthetic data in diffusion-driven TTA seems

indistinguishable from the source data, it is unaligned with, or even markedly

different from the latter for deep networks. To address this issue, we propose

a \textbf{S}ynthetic-\textbf{D}omain \textbf{A}lignment (SDA) framework. Our

key insight is to fine-tune the source model with synthetic data to ensure

better alignment. Specifically, we first employ a conditional diffusion model

to generate labeled samples, creating a synthetic dataset. Subsequently, we use

the aforementioned unconditional diffusion model to add noise to and denoise

each sample before fine-tuning. This Mix of Diffusion (MoD) process mitigates

the potential domain misalignment between the conditional and unconditional

models. Extensive experiments across classifiers, segmenters, and multimodal

large language models (MLLMs, \eg, LLaVA) demonstrate that SDA achieves

superior domain alignment and consistently outperforms existing

diffusion-driven TTA methods. Our code is available at

this https URL

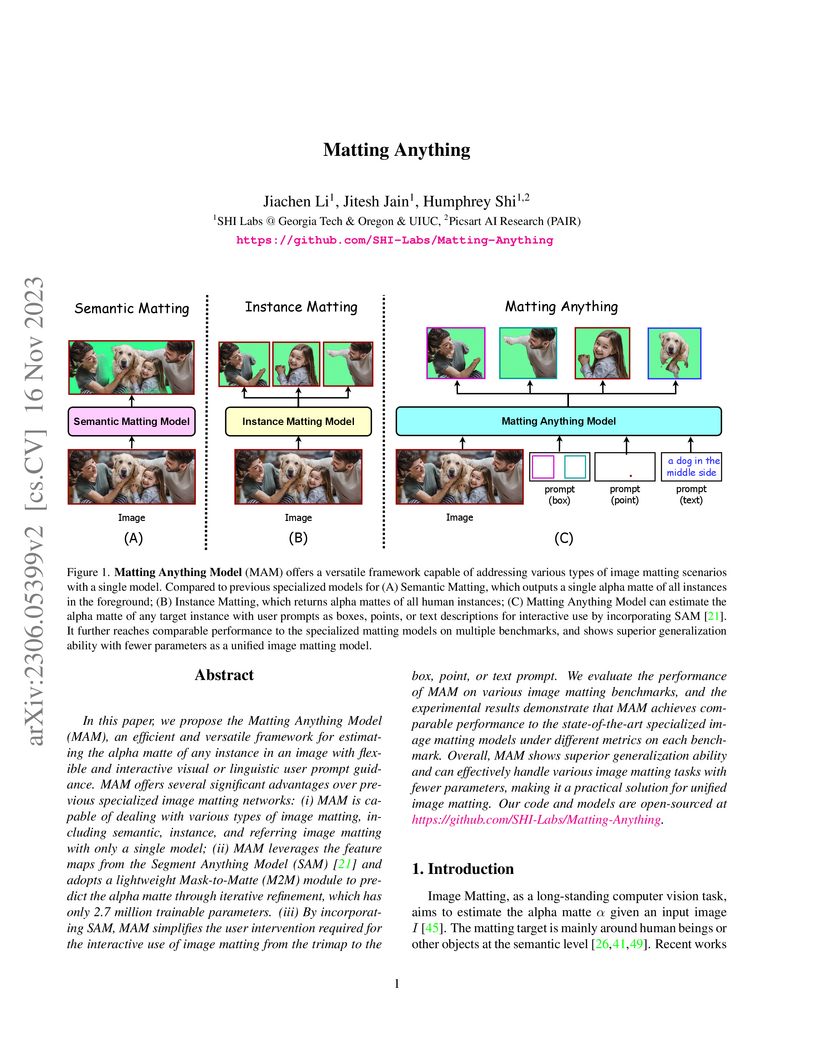

The Matting Anything Model (MAM) offers a unified and versatile solution for image matting by leveraging a frozen Segment Anything Model (SAM) with a lightweight, trainable Mask-to-Matte (M2M) module. This approach enables prompt-based interactive matting across semantic, instance, and referring categories, achieving performance comparable to or exceeding specialized models while maintaining high parameter efficiency.

There are no more papers matching your filters at the moment.