YuanZe University

In response to the threat of adversarial examples, adversarial training

provides an attractive option for enhancing the model robustness by training

models on online-augmented adversarial examples. However, most of the existing

adversarial training methods focus on improving the robust accuracy by

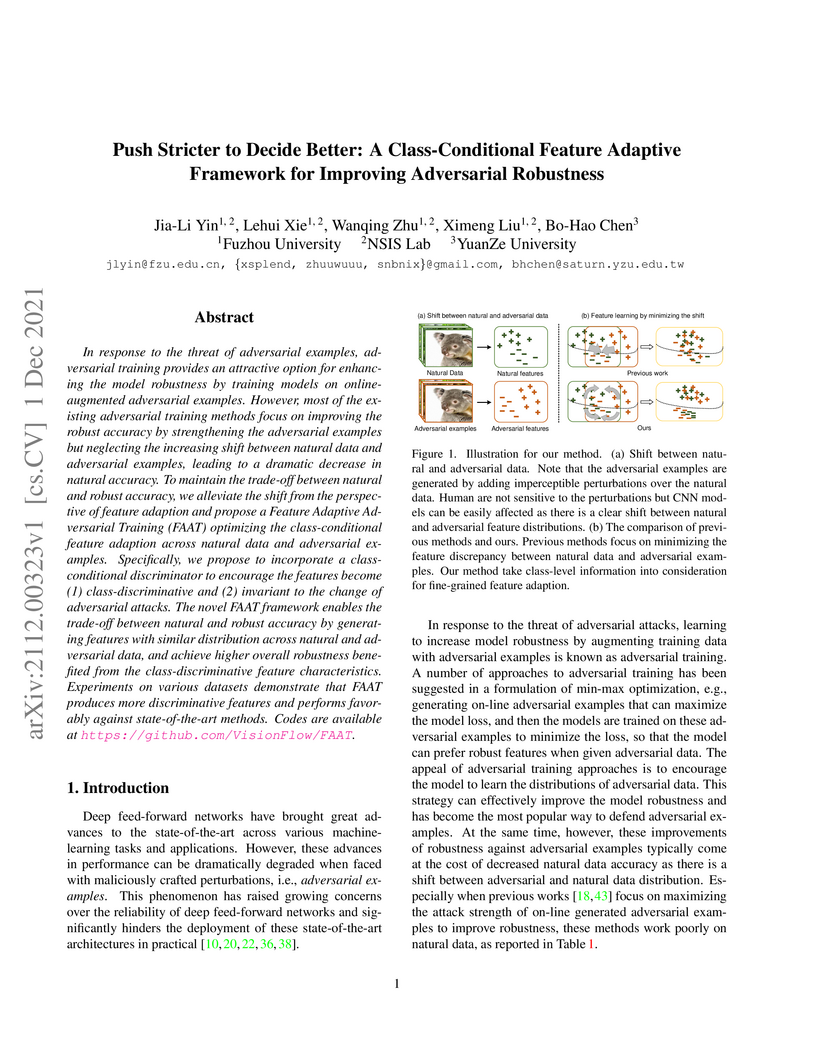

strengthening the adversarial examples but neglecting the increasing shift

between natural data and adversarial examples, leading to a dramatic decrease

in natural accuracy. To maintain the trade-off between natural and robust

accuracy, we alleviate the shift from the perspective of feature adaption and

propose a Feature Adaptive Adversarial Training (FAAT) optimizing the

class-conditional feature adaption across natural data and adversarial

examples. Specifically, we propose to incorporate a class-conditional

discriminator to encourage the features become (1) class-discriminative and (2)

invariant to the change of adversarial attacks. The novel FAAT framework

enables the trade-off between natural and robust accuracy by generating

features with similar distribution across natural and adversarial data, and

achieve higher overall robustness benefited from the class-discriminative

feature characteristics. Experiments on various datasets demonstrate that FAAT

produces more discriminative features and performs favorably against

state-of-the-art methods. Codes are available at

this https URL

01 Jan 2025

Studies have indicated that personality is related to achievement, and several personality assessment models have been developed. However, most are either questionnaires or based on marker systems, which entails limitations. We proposed a physiological signal based model, thereby ensuring the objectivity of the data and preventing unreliable responses. Thirty participants were recruited from the Department of Electrical Engineering of Yuan Ze University in Taiwan. Wearable sensors were used to collect physiological signals as the participants watched and summarized a video. They then completed a personality questionnaire based on the big five factor markers system. The results were used to construct a personality prediction model, which revealed that galvanic skin response and heart rate variance were key factors predicting extroversion; heart rate variance also predicted agreeableness and conscientiousness. The results of this experiment can elucidate students personality traits, which can help educators select the appropriate pedagogical methods.

There are no more papers matching your filters at the moment.