Ariel AI

In this work we introduce Lifting Autoencoders, a generative 3D surface-based

model of object categories. We bring together ideas from non-rigid structure

from motion, image formation, and morphable models to learn a controllable,

geometric model of 3D categories in an entirely unsupervised manner from an

unstructured set of images. We exploit the 3D geometric nature of our model and

use normal information to disentangle appearance into illumination, shading and

albedo. We further use weak supervision to disentangle the non-rigid shape

variability of human faces into identity and expression. We combine the 3D

representation with a differentiable renderer to generate RGB images and append

an adversarially trained refinement network to obtain sharp, photorealistic

image reconstruction results. The learned generative model can be controlled in

terms of interpretable geometry and appearance factors, allowing us to perform

photorealistic image manipulation of identity, expression, 3D pose, and

illumination properties.

Monocular 3D reconstruction of deformable objects, such as human body parts, has been typically approached by predicting parameters of heavyweight linear models. In this paper, we demonstrate an alternative solution that is based on the idea of encoding images into a latent non-linear representation of meshes. The prior on 3D hand shapes is learned by training an autoencoder with intrinsic graph convolutions performed in the spectral domain. The pre-trained decoder acts as a non-linear statistical deformable model. The latent parameters that reconstruct the shape and articulated pose of hands in the image are predicted using an image encoder. We show that our system reconstructs plausible meshes and operates in real-time. We evaluate the quality of the mesh reconstructions produced by the decoder on a new dataset and show latent space interpolation results. Our code, data, and models will be made publicly available.

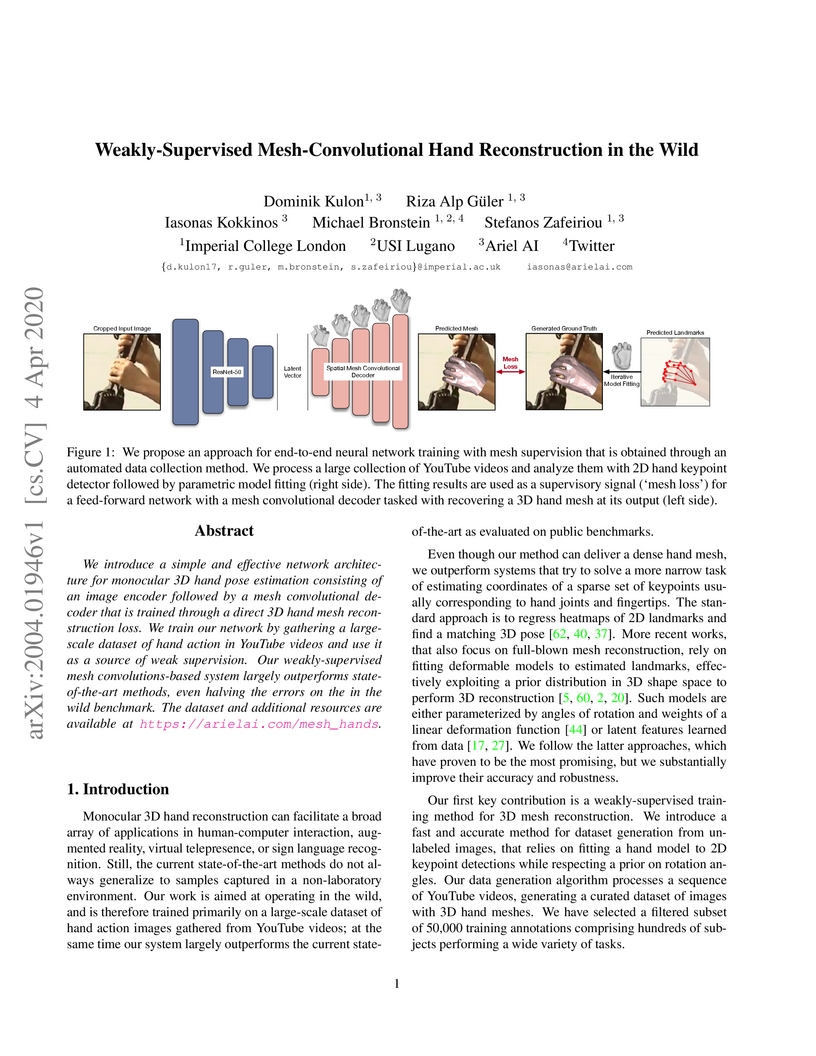

We introduce a simple and effective network architecture for monocular 3D

hand pose estimation consisting of an image encoder followed by a mesh

convolutional decoder that is trained through a direct 3D hand mesh

reconstruction loss. We train our network by gathering a large-scale dataset of

hand action in YouTube videos and use it as a source of weak supervision. Our

weakly-supervised mesh convolutions-based system largely outperforms

state-of-the-art methods, even halving the errors on the in the wild benchmark.

The dataset and additional resources are available at

this https URL

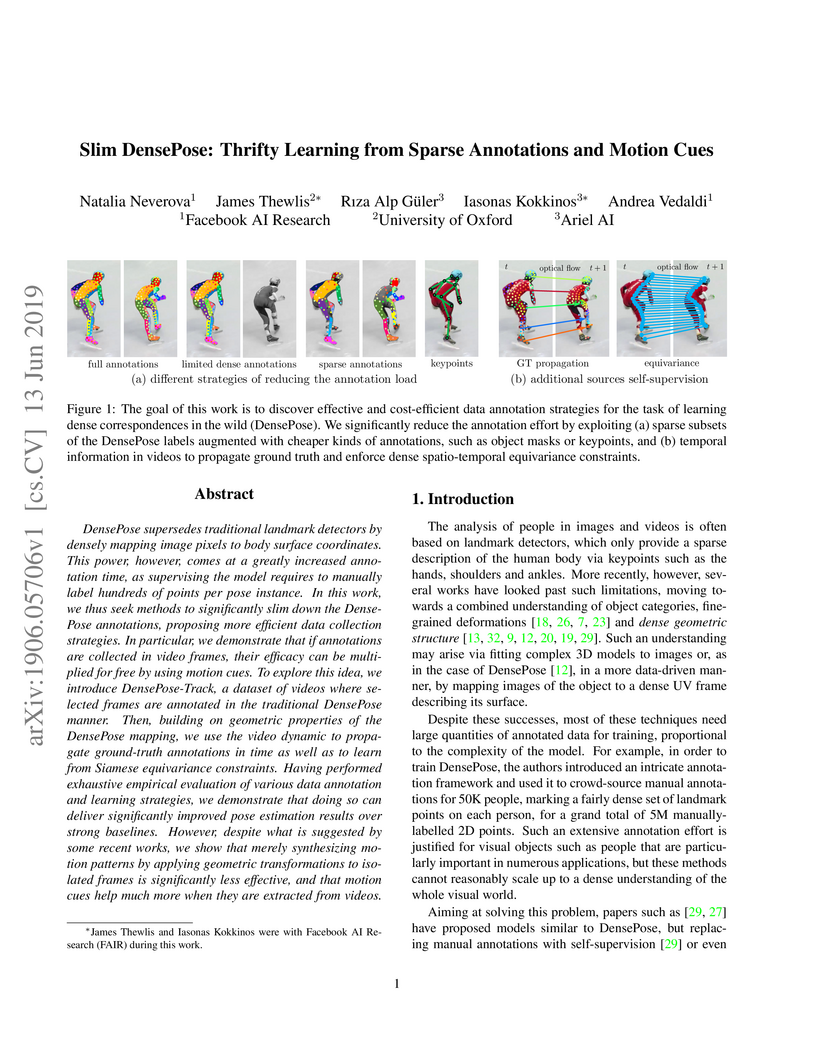

DensePose supersedes traditional landmark detectors by densely mapping image pixels to body surface coordinates. This power, however, comes at a greatly increased annotation time, as supervising the model requires to manually label hundreds of points per pose instance. In this work, we thus seek methods to significantly slim down the DensePose annotations, proposing more efficient data collection strategies. In particular, we demonstrate that if annotations are collected in video frames, their efficacy can be multiplied for free by using motion cues. To explore this idea, we introduce DensePose-Track, a dataset of videos where selected frames are annotated in the traditional DensePose manner. Then, building on geometric properties of the DensePose mapping, we use the video dynamic to propagate ground-truth annotations in time as well as to learn from Siamese equivariance constraints. Having performed exhaustive empirical evaluation of various data annotation and learning strategies, we demonstrate that doing so can deliver significantly improved pose estimation results over strong baselines. However, despite what is suggested by some recent works, we show that merely synthesizing motion patterns by applying geometric transformations to isolated frames is significantly less effective, and that motion cues help much more when they are extracted from videos.

There are no more papers matching your filters at the moment.