Astribot Inc.

19 Jun 2025

ControlVLA introduces a novel framework that enables robots to acquire new manipulation skills from a few demonstrations by integrating pre-trained Vision-Language-Action models with object-centric representations. The method utilizes a ControlNet-style fine-tuning approach with zero-initialized layers, achieving a 76.7% success rate across diverse short-horizon tasks with 10-20 demonstrations and demonstrating robust performance on long-horizon and generalized tasks.

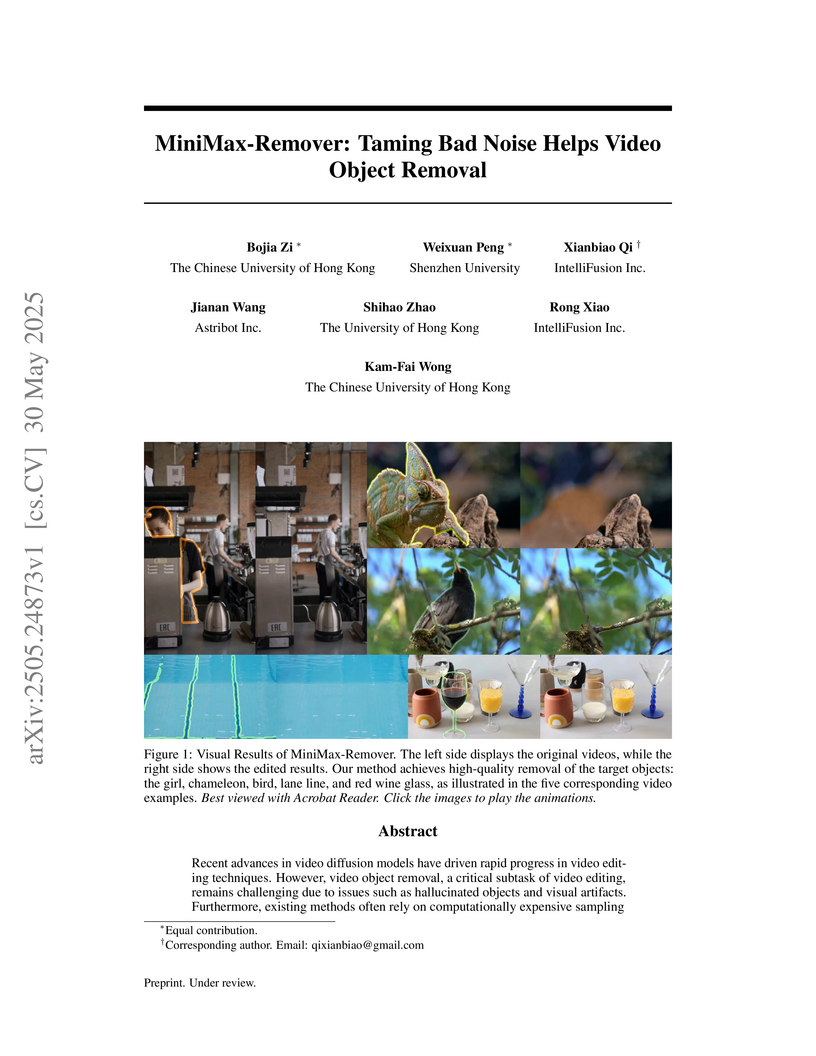

MiniMax-Remover introduces a two-stage diffusion-based framework that achieves state-of-the-art video object removal quality with high efficiency, reducing inference steps to 6 and eliminating Classifier-Free Guidance. The method achieves an impressive latency of 0.18 seconds for a 33-frame, 360P video while significantly improving success rates and user preference over prior approaches.

Recent advances in diffusion models have brought remarkable progress in image and video editing, yet some tasks remain underexplored. In this paper, we introduce a new task, Object Retexture, which transfers local textures from a reference object to a target object in images or videos. To perform this task, a straightforward solution is to use ControlNet conditioned on the source structure and the reference texture. However, this approach suffers from limited controllability for two reasons: conditioning on the raw reference image introduces unwanted structural information, and it fails to disentangle the visual texture and structure information of the source. To address this problem, we propose Refaçade, a method that consists of two key designs to achieve precise and controllable texture transfer in both images and videos. First, we employ a texture remover trained on paired textured/untextured 3D mesh renderings to remove appearance information while preserving the geometry and motion of source videos. Second, we disrupt the reference global layout using a jigsaw permutation, encouraging the model to focus on local texture statistics rather than the global layout of the object. Extensive experiments demonstrate superior visual quality, precise editing, and controllability, outperforming strong baselines in both quantitative and human evaluations. Code is available at this https URL.

There are no more papers matching your filters at the moment.