Dzine AI

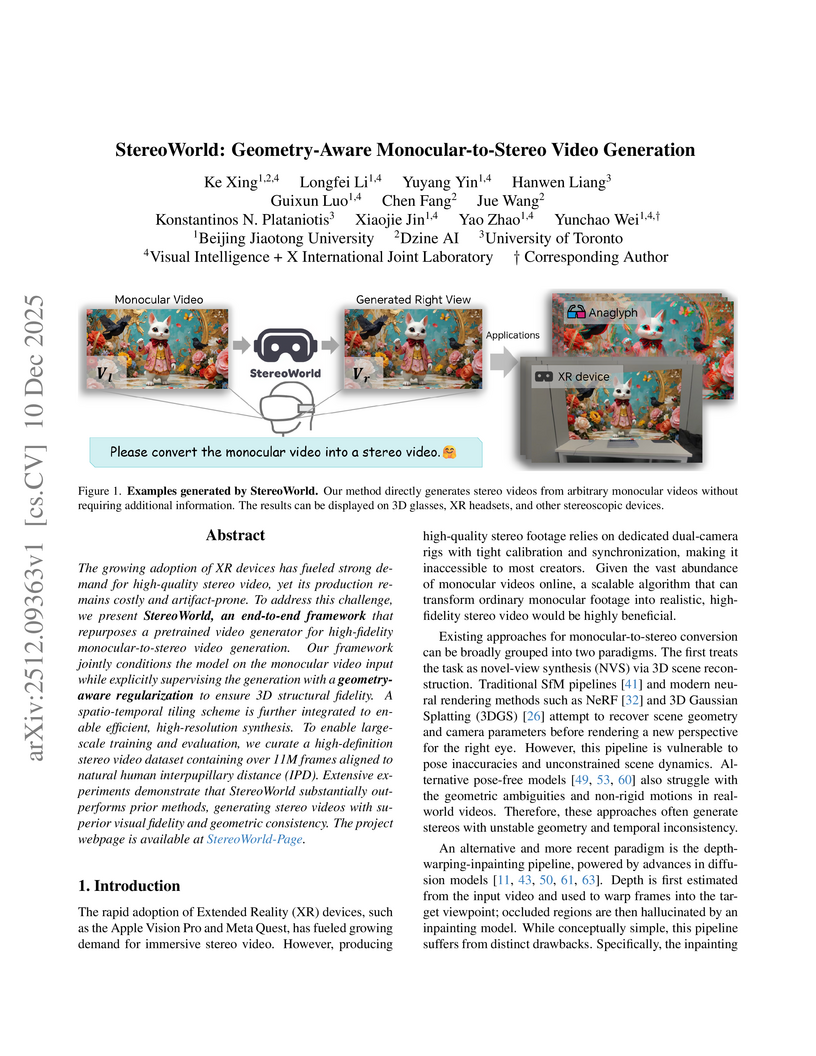

The growing adoption of XR devices has fueled strong demand for high-quality stereo video, yet its production remains costly and artifact-prone. To address this challenge, we present StereoWorld, an end-to-end framework that repurposes a pretrained video generator for high-fidelity monocular-to-stereo video generation. Our framework jointly conditions the model on the monocular video input while explicitly supervising the generation with a geometry-aware regularization to ensure 3D structural fidelity. A spatio-temporal tiling scheme is further integrated to enable efficient, high-resolution synthesis. To enable large-scale training and evaluation, we curate a high-definition stereo video dataset containing over 11M frames aligned to natural human interpupillary distance (IPD). Extensive experiments demonstrate that StereoWorld substantially outperforms prior methods, generating stereo videos with superior visual fidelity and geometric consistency. The project webpage is available at this https URL.

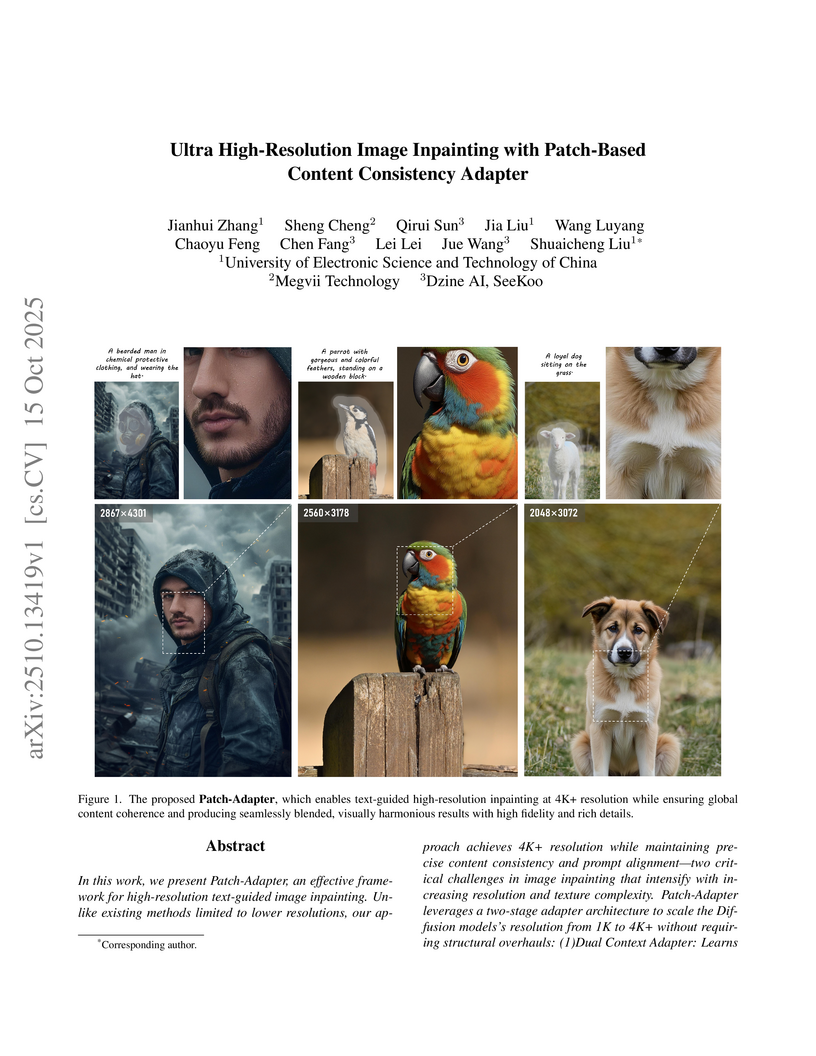

Researchers from UESTC, Megvii Technology, and Dzine AI/SeeKoo introduced a two-stage adapter framework to enable ultra high-resolution (4K+) text-guided image inpainting. Their Patch-Adapter model achieved a FID of 14.594 and an Aesthetic Score of 6.021 on the photo-concept-bucket dataset, significantly improving perceptual quality and semantic alignment over prior methods.

There are no more papers matching your filters at the moment.