Ask or search anything...

SeeKoo

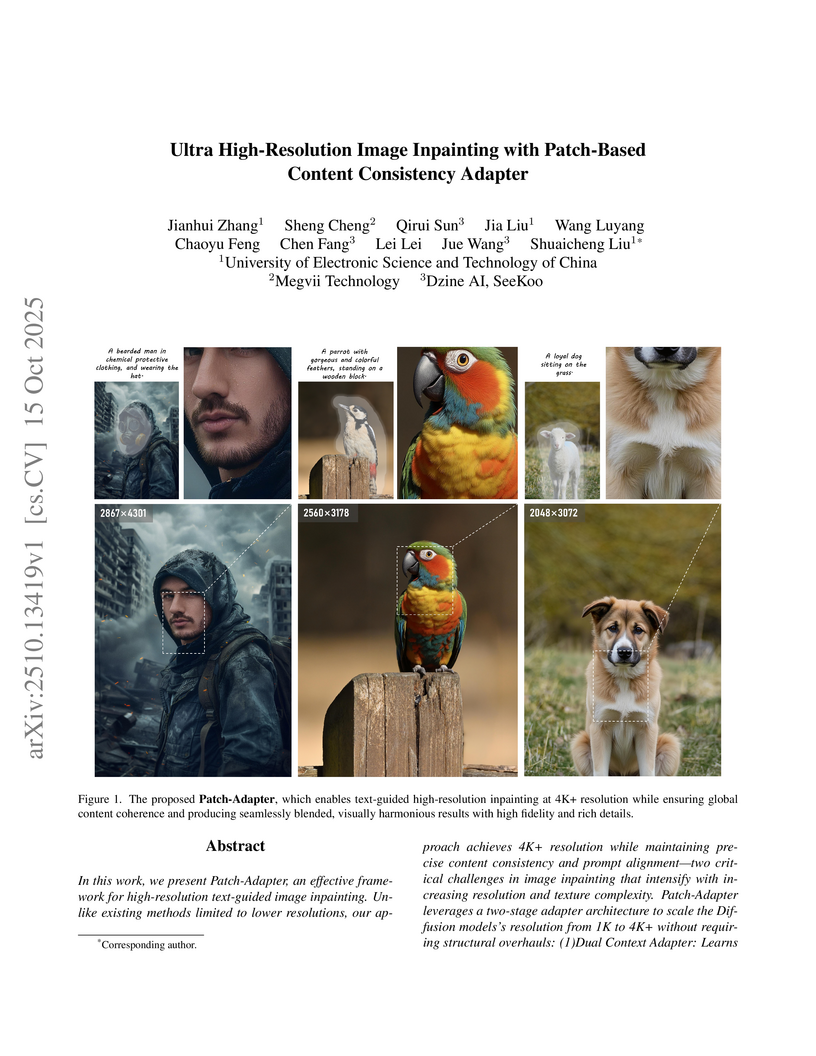

Ultra High-Resolution Image Inpainting with Patch-Based Content Consistency Adapter

Researchers from UESTC, Megvii Technology, and Dzine AI/SeeKoo introduced a two-stage adapter framework to enable ultra high-resolution (4K+) text-guided image inpainting. Their Patch-Adapter model achieved a FID of 14.594 and an Aesthetic Score of 6.021 on the photo-concept-bucket dataset, significantly improving perceptual quality and semantic alignment over prior methods.

View blogPerception-Oriented Video Frame Interpolation via Asymmetric Blending

The paper introduces PerVFI, a perception-oriented video frame interpolation (VFI) method, which employs asymmetric synergistic blending and a conditional normalizing flow-based generator. It significantly reduces blur and ghosting artifacts, achieving superior perceptual quality validated by quantitative metrics like LPIPS and VFIPS, and confirmed by user studies.

View blogAda-adapter:Fast Few-shot Style Personlization of Diffusion Model with Pre-trained Image Encoder

Fine-tuning advanced diffusion models for high-quality image stylization usually requires large training datasets and substantial computational resources, hindering their practical applicability. We propose Ada-Adapter, a novel framework for few-shot style personalization of diffusion models. Ada-Adapter leverages off-the-shelf diffusion models and pre-trained image feature encoders to learn a compact style representation from a limited set of source images. Our method enables efficient zero-shot style transfer utilizing a single reference image. Furthermore, with a small number of source images (three to five are sufficient) and a few minutes of fine-tuning, our method can capture intricate style details and conceptual characteristics, generating high-fidelity stylized images that align well with the provided text prompts. We demonstrate the effectiveness of our approach on various artistic styles, including flat art, 3D rendering, and logo design. Our experimental results show that Ada-Adapter outperforms existing zero-shot and few-shot stylization methods in terms of output quality, diversity, and training efficiency.

There are no more papers matching your filters at the moment.

Chinese Academy of Sciences

Chinese Academy of Sciences Shanghai Jiao Tong University

Shanghai Jiao Tong University