European Systems Integration

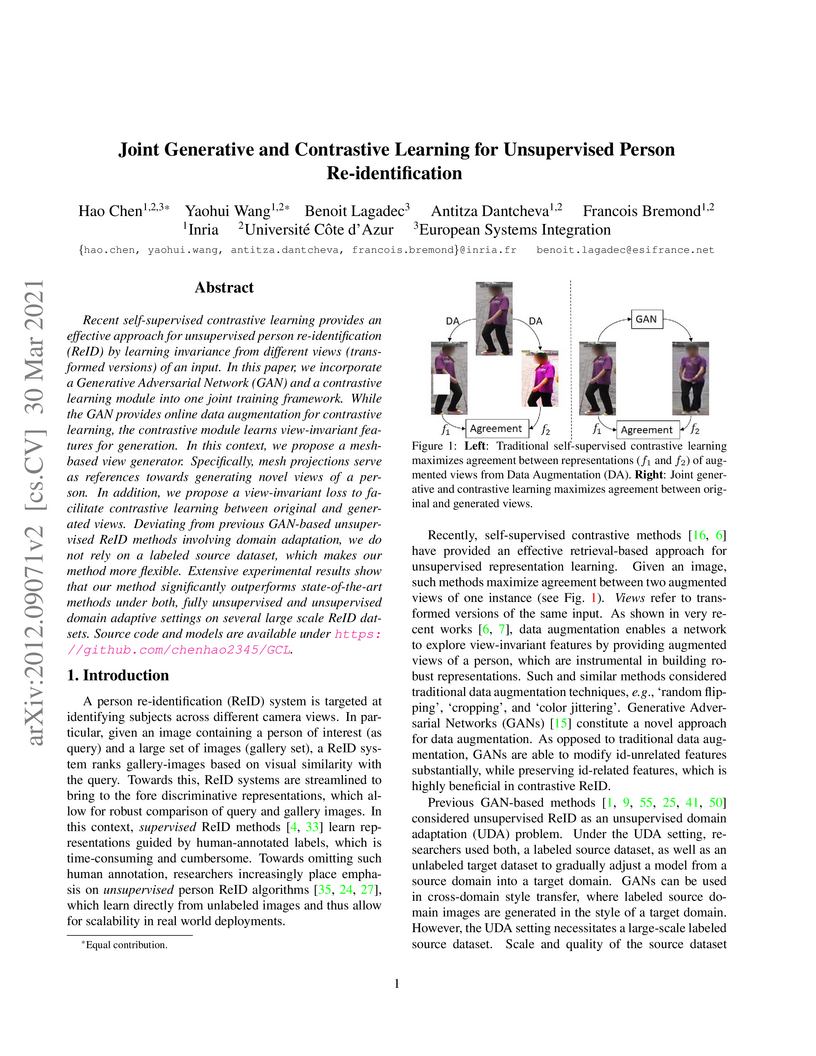

Recent self-supervised contrastive learning provides an effective approach

for unsupervised person re-identification (ReID) by learning invariance from

different views (transformed versions) of an input. In this paper, we

incorporate a Generative Adversarial Network (GAN) and a contrastive learning

module into one joint training framework. While the GAN provides online data

augmentation for contrastive learning, the contrastive module learns

view-invariant features for generation. In this context, we propose a

mesh-based view generator. Specifically, mesh projections serve as references

towards generating novel views of a person. In addition, we propose a

view-invariant loss to facilitate contrastive learning between original and

generated views. Deviating from previous GAN-based unsupervised ReID methods

involving domain adaptation, we do not rely on a labeled source dataset, which

makes our method more flexible. Extensive experimental results show that our

method significantly outperforms state-of-the-art methods under both, fully

unsupervised and unsupervised domain adaptive settings on several large scale

ReID datsets.

Unsupervised person re-identification (ReID) aims at learning discriminative

identity features without annotations. Recently, self-supervised contrastive

learning has gained increasing attention for its effectiveness in unsupervised

representation learning. The main idea of instance contrastive learning is to

match a same instance in different augmented views. However, the relationship

between different instances has not been fully explored in previous contrastive

methods, especially for instance-level contrastive loss. To address this issue,

we propose Inter-instance Contrastive Encoding (ICE) that leverages

inter-instance pairwise similarity scores to boost previous class-level

contrastive ReID methods. We first use pairwise similarity ranking as one-hot

hard pseudo labels for hard instance contrast, which aims at reducing

intra-class variance. Then, we use similarity scores as soft pseudo labels to

enhance the consistency between augmented and original views, which makes our

model more robust to augmentation perturbations. Experiments on several

large-scale person ReID datasets validate the effectiveness of our proposed

unsupervised method ICE, which is competitive with even supervised methods.

Code is made available at this https URL

Existing unsupervised person re-identification (ReID) methods focus on adapting a model trained on a source domain to a fixed target domain. However, an adapted ReID model usually only works well on a certain target domain, but can hardly memorize the source domain knowledge and generalize to upcoming unseen data. In this paper, we propose unsupervised lifelong person ReID, which focuses on continuously conducting unsupervised domain adaptation on new domains without forgetting the knowledge learnt from old domains. To tackle unsupervised lifelong ReID, we conduct a contrastive rehearsal on a small number of stored old samples while sequentially adapting to new domains. We further set an image-to-image similarity constraint between old and new models to regularize the model updates in a way that suits old knowledge. We sequentially train our model on several large-scale datasets in an unsupervised manner and test it on all seen domains as well as several unseen domains to validate the generalizability of our method. Our proposed unsupervised lifelong method achieves strong generalizability, which significantly outperforms previous lifelong methods on both seen and unseen domains. Code will be made available at this https URL.

This work focuses on unsupervised representation learning in person re-identification (ReID). Recent self-supervised contrastive learning methods learn invariance by maximizing the representation similarity between two augmented views of a same image. However, traditional data augmentation may bring to the fore undesirable distortions on identity features, which is not always favorable in id-sensitive ReID tasks. In this paper, we propose to replace traditional data augmentation with a generative adversarial network (GAN) that is targeted to generate augmented views for contrastive learning. A 3D mesh guided person image generator is proposed to disentangle a person image into id-related and id-unrelated features. Deviating from previous GAN-based ReID methods that only work in id-unrelated space (pose and camera style), we conduct GAN-based augmentation on both id-unrelated and id-related features. We further propose specific contrastive losses to help our network learn invariance from id-unrelated and id-related augmentations. By jointly training the generative and the contrastive modules, our method achieves new state-of-the-art unsupervised person ReID performance on mainstream large-scale benchmarks.

There are no more papers matching your filters at the moment.