Fastweb

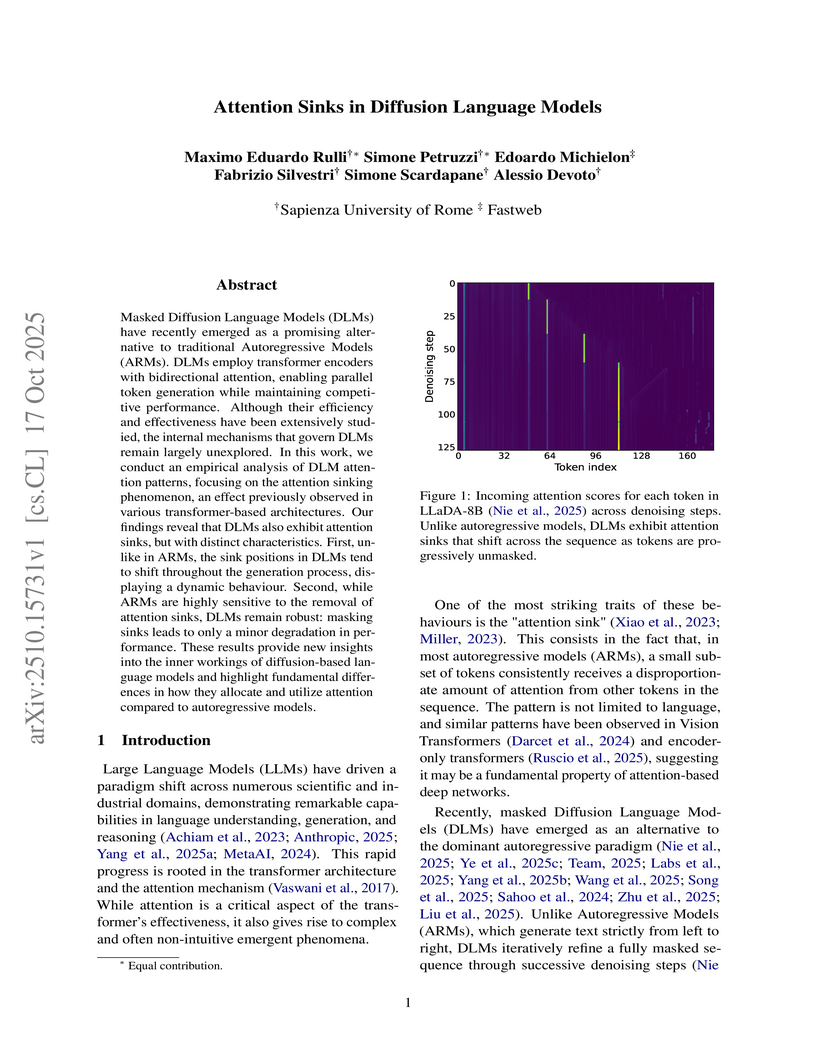

Researchers at Sapienza University of Rome and Fastweb conducted the first empirical analysis of attention sinks in Diffusion Language Models (DLMs), discovering that DLMs exhibit dynamic, shifting sinks and a surprising robustness to their removal, unlike Autoregressive Models (ARMs). This work offers key insights into DLM internal dynamics, showing that their attention mechanisms are more distributed and flexible.

07 Aug 2025

For any s∈C with \Re(s)>0, denote by ηn−1(s) the (n−1)th partial sum of the Dirichlet series for the eta function η(s)=1−2−s+3−s−⋯, and by Rn(s) the corresponding remainder. Denoting by un(s) the segment starting at ηn−1(s) and ending at ηn(s), we first show how, for sufficiently large n values, the circle of diameter un+2(s) lies strictly inside the circle of diameter un(s), to then derive the asymptotic relationship Rn(s)∼(−1)n−1/ns, as n→∞. Denoting by D=\left\{s \in \mathbb{C}: \; 0< \Re(s) < \frac{1}{2}\right\} the open left half of the critical strip, define for all s∈D the ratio χn±(s)=ηn(1−s)/ηn(s). We then prove that the limit $L(s)=\lim_{N(s)

There are no more papers matching your filters at the moment.