Galaxea AI

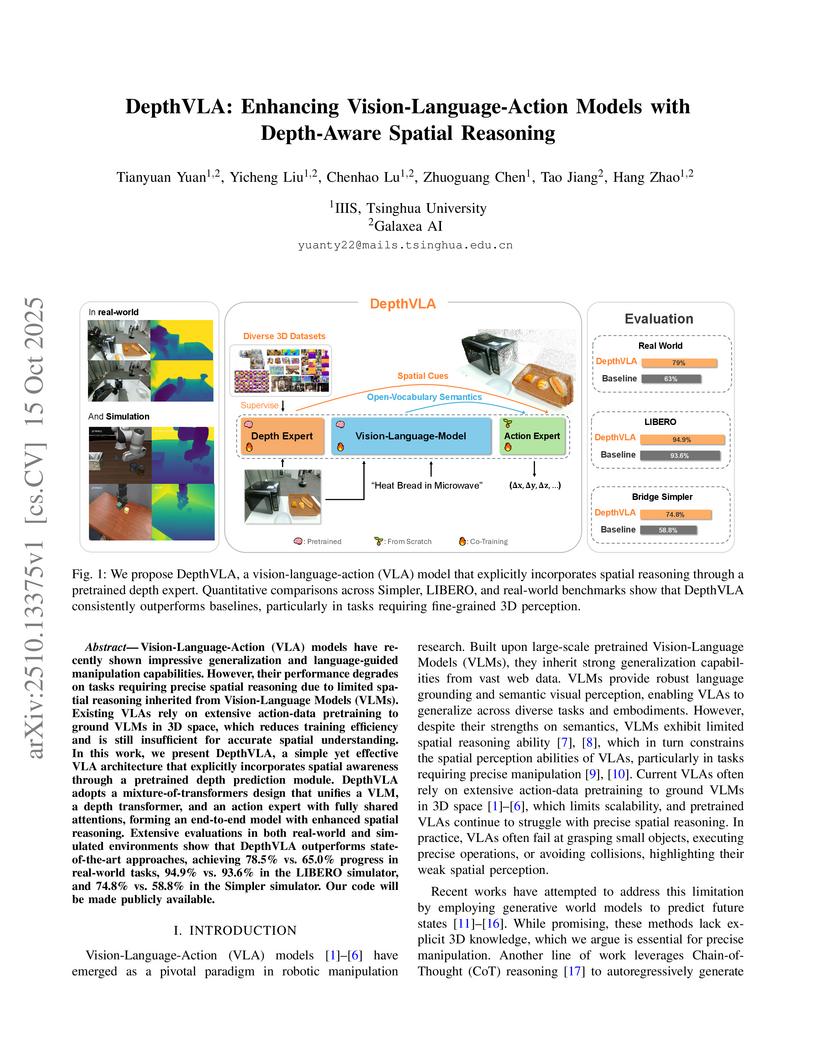

DepthVLA enhances Vision-Language-Action (VLA) models by integrating a pretrained depth prediction module within a mixture-of-transformers architecture, improving spatial reasoning for robotic manipulation tasks. The approach yields superior performance, achieving a 74.8% success rate on the simulated WidowX benchmark and a 79% average progress score on a real-world robotic platform.

FASTER is a unified framework featuring a learnable action tokenizer (FASTerVQ) and an efficient autoregressive policy (FASTerVLA) for robotic manipulation. It achieves state-of-the-art performance with a 97.9% success rate on Libero and significantly reduces inference latency to 112ms, enabling efficient real-time control for complex tasks.

Recent success in legged robot locomotion is attributed to the integration of

reinforcement learning and physical simulators. However, these policies often

encounter challenges when deployed in real-world environments due to

sim-to-real gaps, as simulators typically fail to replicate visual realism and

complex real-world geometry. Moreover, the lack of realistic visual rendering

limits the ability of these policies to support high-level tasks requiring

RGB-based perception like ego-centric navigation. This paper presents a

Real-to-Sim-to-Real framework that generates photorealistic and physically

interactive "digital twin" simulation environments for visual navigation and

locomotion learning. Our approach leverages 3D Gaussian Splatting (3DGS) based

scene reconstruction from multi-view images and integrates these environments

into simulations that support ego-centric visual perception and mesh-based

physical interactions. To demonstrate its effectiveness, we train a

reinforcement learning policy within the simulator to perform a visual

goal-tracking task. Extensive experiments show that our framework achieves

RGB-only sim-to-real policy transfer. Additionally, our framework facilitates

the rapid adaptation of robot policies with effective exploration capability in

complex new environments, highlighting its potential for applications in

households and factories.

There are no more papers matching your filters at the moment.