imitation-learning

Researchers from Columbia University and NYU introduced Online World Modeling (OWM) and Adversarial World Modeling (AWM) to mitigate the train-test gap in world models for gradient-based planning (GBP). These methods enabled GBP to achieve performance comparable to or better than search-based planning algorithms like CEM, while simultaneously reducing computation time by an order of magnitude across various robotic tasks.

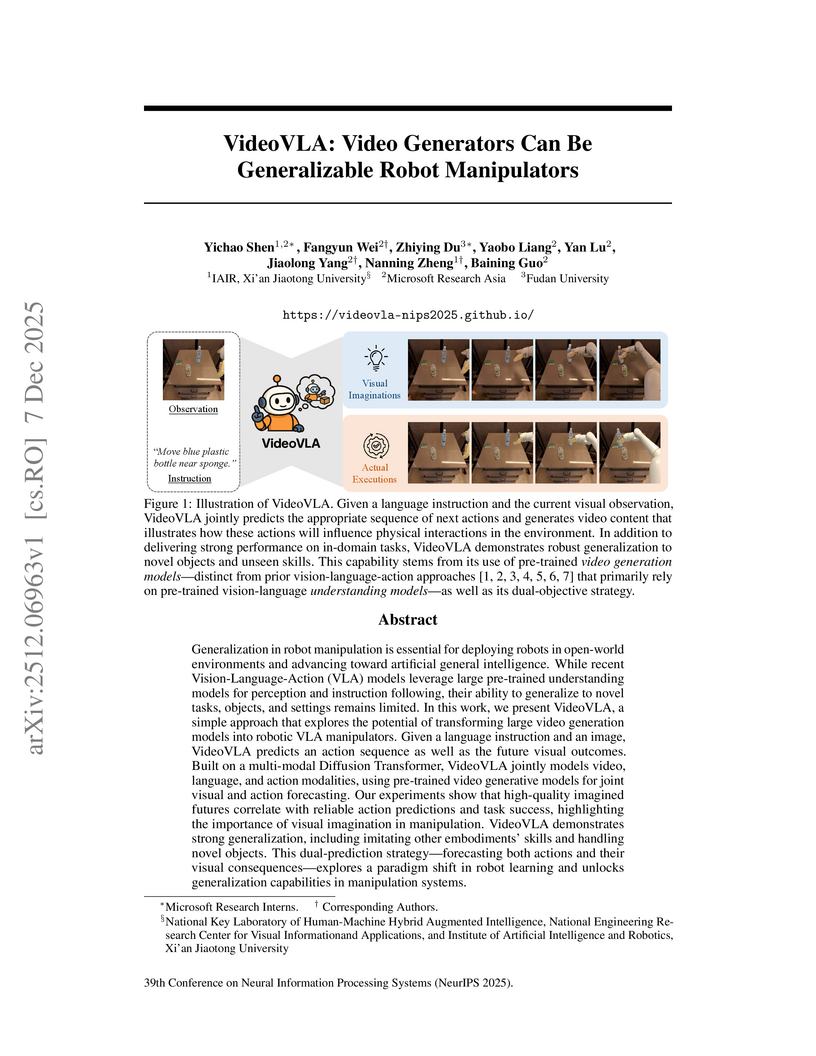

Researchers from Microsoft Research Asia, Xi'an Jiaotong University, and Fudan University developed VideoVLA, a robot manipulator that repurposes large pre-trained video generation models. This system jointly predicts future video states and corresponding actions, achieving enhanced generalization capabilities for novel objects and skills in both simulated and real-world environments.

The H2R-Grounder framework enables the translation of human interaction videos into physically grounded robot manipulation videos without requiring paired human-robot demonstration data. Researchers at the National University of Singapore's Show Lab developed this approach, which utilizes a simple 2D pose representation and fine-tunes a video diffusion model on unpaired robot videos, achieving higher human preference for motion consistency (54.5%), physical plausibility (63.6%), and visual quality (61.4%) compared to baseline methods.

We present Masked Generative Policy (MGP), a novel framework for visuomotor imitation learning. We represent actions as discrete tokens, and train a conditional masked transformer that generates tokens in parallel and then rapidly refines only low-confidence tokens. We further propose two new sampling paradigms: MGP-Short, which performs parallel masked generation with score-based refinement for Markovian tasks, and MGP-Long, which predicts full trajectories in a single pass and dynamically refines low-confidence action tokens based on new observations. With globally coherent prediction and robust adaptive execution capabilities, MGP-Long enables reliable control on complex and non-Markovian tasks that prior methods struggle with. Extensive evaluations on 150 robotic manipulation tasks spanning the Meta-World and LIBERO benchmarks show that MGP achieves both rapid inference and superior success rates compared to state-of-the-art diffusion and autoregressive policies. Specifically, MGP increases the average success rate by 9% across 150 tasks while cutting per-sequence inference time by up to 35x. It further improves the average success rate by 60% in dynamic and missing-observation environments, and solves two non-Markovian scenarios where other state-of-the-art methods fail.

RETAIN, developed at UC Berkeley, introduces a parameter merging strategy for generalist robot policies, interpolating pre-trained and finetuned weights to enable robust adaptation to new tasks. This approach enhances out-of-distribution generalization by approximately 40% on real-world robotic tasks while preserving the policy's existing broad capabilities in low-data scenarios.

Researchers at Physical Intelligence optimized Real-Time Chunking for Vision-Language-Action models by introducing training-time action conditioning, moving computationally intensive prefix conditioning from inference to training. This approach maintained task performance and execution speed, notably reducing latency from 135ms to 108ms and improving robustness at higher inference delays compared to prior methods.

This document introduces foundational concepts and algorithms in Deep Reinforcement Learning (DRL) and Deep Imitation Learning (DIL) for embodied agents, providing a self-contained pedagogical resource from ISCTE – University Institute of Lisbon. It offers clear, in-depth explanations of core methods, including necessary mathematical prerequisites, to build a strong understanding for learners.

09 Dec 2025

OSMO is an open-source tactile glove platform designed to capture both shear and normal forces from human demonstrations, facilitating direct transfer of these skills to robots. Policies trained using OSMO achieved 71.69% success in a wiping task, outperforming vision-only baselines (55.75%) by eliminating contact-related failures.

An independent research team secured 1st place in the 2025 BEHAVIOR Challenge, achieving a 26% q-score by enhancing a Vision-Language-Action model (Pi0.5) with innovations like correlated noise for flow matching, "System 2" stage tracking, and practical inference-time heuristics. The approach demonstrated emergent recovery behaviors and addressed challenges in long-horizon, complex manipulation tasks.

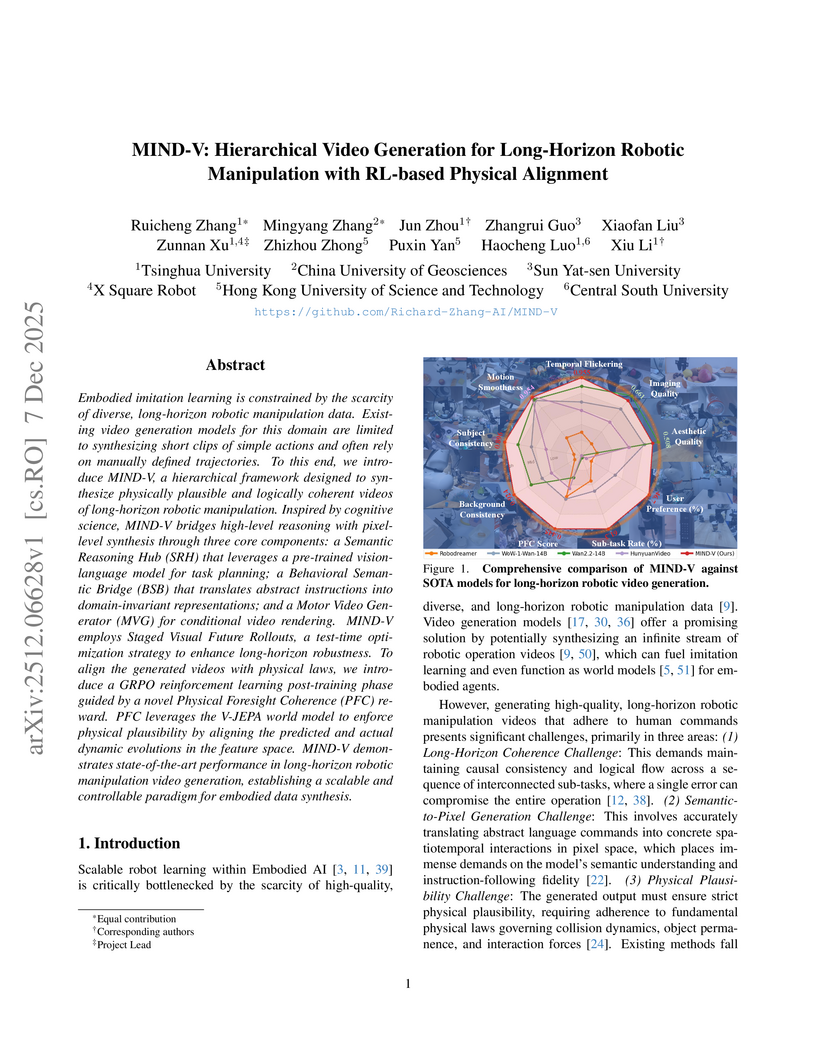

MIND-V introduces a hierarchical video generation framework for long-horizon robotic manipulation, autonomously synthesizing physically plausible and logically coherent operation videos. It employs a multi-stage architecture with reinforcement learning for physical alignment, providing a scalable method for generating robot training data.

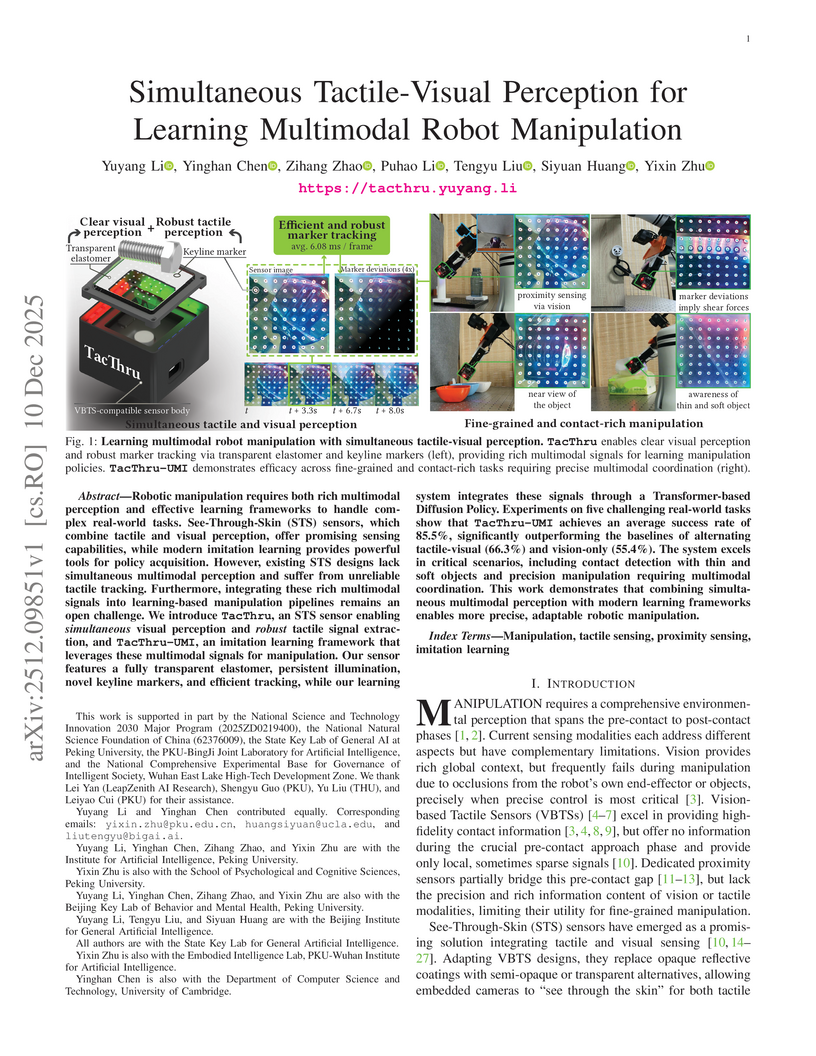

Robotic manipulation requires both rich multimodal perception and effective learning frameworks to handle complex real-world tasks. See-through-skin (STS) sensors, which combine tactile and visual perception, offer promising sensing capabilities, while modern imitation learning provides powerful tools for policy acquisition. However, existing STS designs lack simultaneous multimodal perception and suffer from unreliable tactile tracking. Furthermore, integrating these rich multimodal signals into learning-based manipulation pipelines remains an open challenge. We introduce TacThru, an STS sensor enabling simultaneous visual perception and robust tactile signal extraction, and TacThru-UMI, an imitation learning framework that leverages these multimodal signals for manipulation. Our sensor features a fully transparent elastomer, persistent illumination, novel keyline markers, and efficient tracking, while our learning system integrates these signals through a Transformer-based Diffusion Policy. Experiments on five challenging real-world tasks show that TacThru-UMI achieves an average success rate of 85.5%, significantly outperforming the baselines of alternating tactile-visual (66.3%) and vision-only (55.4%). The system excels in critical scenarios, including contact detection with thin and soft objects and precision manipulation requiring multimodal coordination. This work demonstrates that combining simultaneous multimodal perception with modern learning frameworks enables more precise, adaptable robotic manipulation.

08 Dec 2025

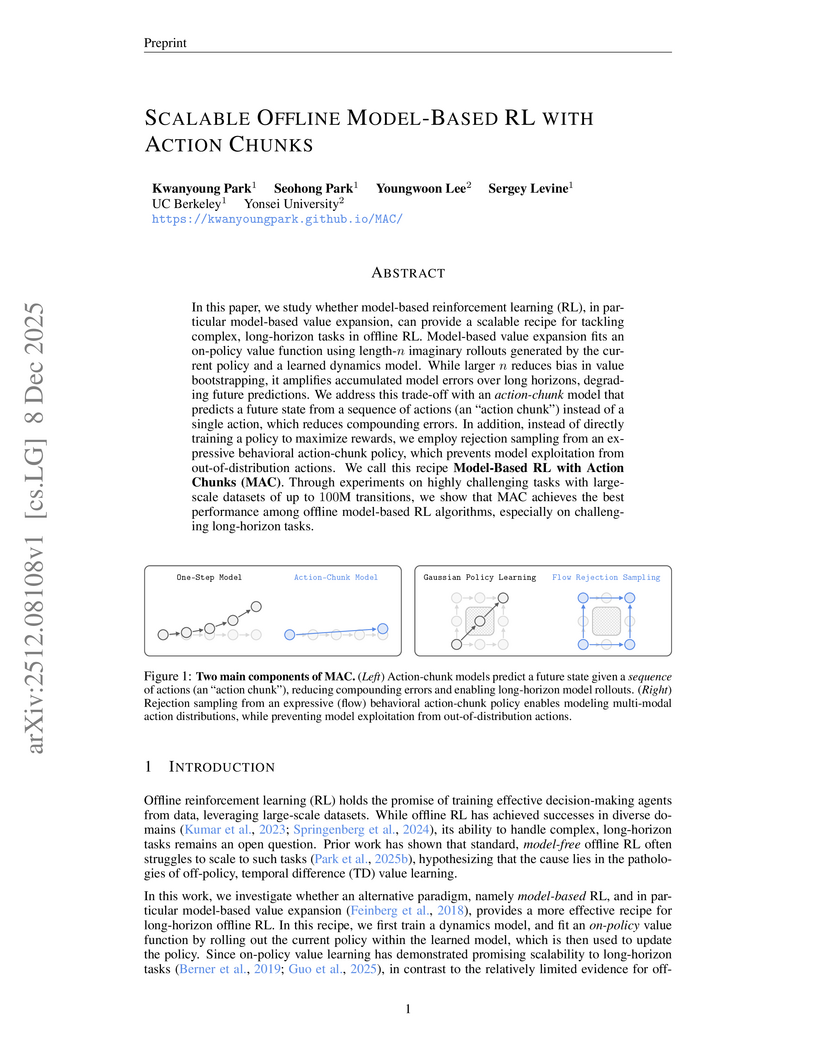

MAC introduces a model-based offline reinforcement learning approach that uses action chunks and flow-based generative policies to tackle complex, long-horizon tasks. It achieves state-of-the-art performance on challenging manipulation benchmarks, outperforming prior model-based methods and many model-free algorithms.

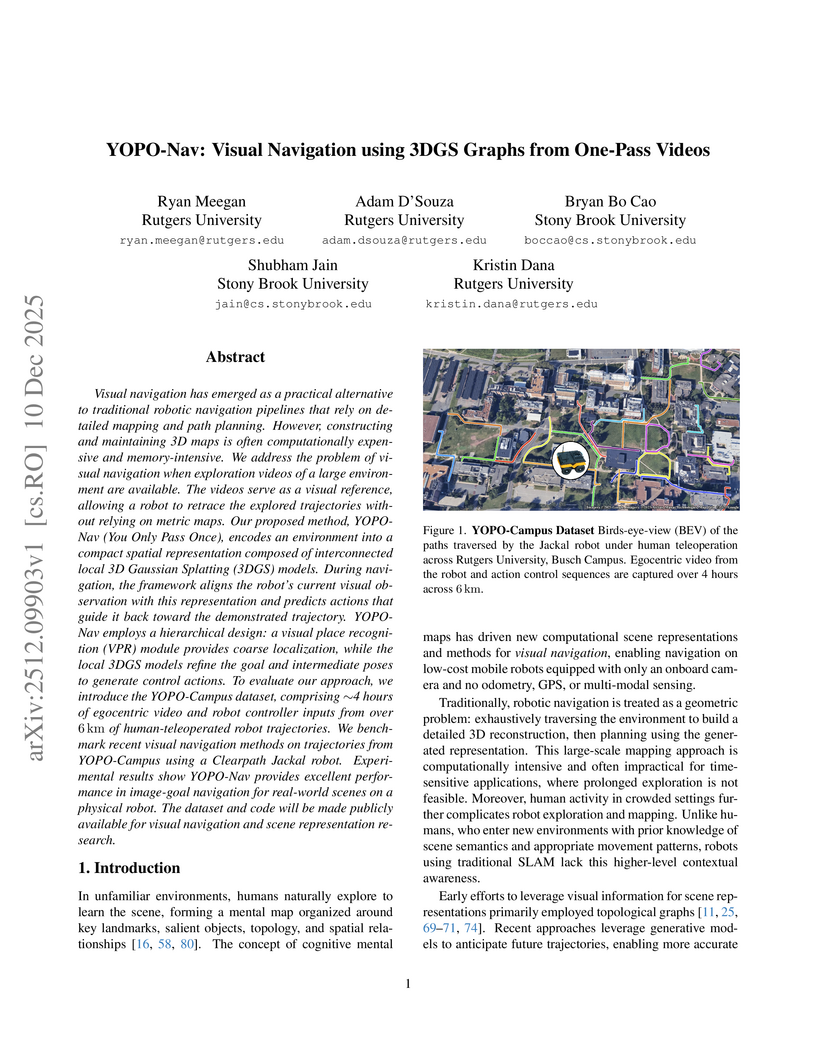

Visual navigation has emerged as a practical alternative to traditional robotic navigation pipelines that rely on detailed mapping and path planning. However, constructing and maintaining 3D maps is often computationally expensive and memory-intensive. We address the problem of visual navigation when exploration videos of a large environment are available. The videos serve as a visual reference, allowing a robot to retrace the explored trajectories without relying on metric maps. Our proposed method, YOPO-Nav (You Only Pass Once), encodes an environment into a compact spatial representation composed of interconnected local 3D Gaussian Splatting (3DGS) models. During navigation, the framework aligns the robot's current visual observation with this representation and predicts actions that guide it back toward the demonstrated trajectory. YOPO-Nav employs a hierarchical design: a visual place recognition (VPR) module provides coarse localization, while the local 3DGS models refine the goal and intermediate poses to generate control actions. To evaluate our approach, we introduce the YOPO-Campus dataset, comprising 4 hours of egocentric video and robot controller inputs from over 6 km of human-teleoperated robot trajectories. We benchmark recent visual navigation methods on trajectories from YOPO-Campus using a Clearpath Jackal robot. Experimental results show YOPO-Nav provides excellent performance in image-goal navigation for real-world scenes on a physical robot. The dataset and code will be made publicly available for visual navigation and scene representation research.

10 Dec 2025

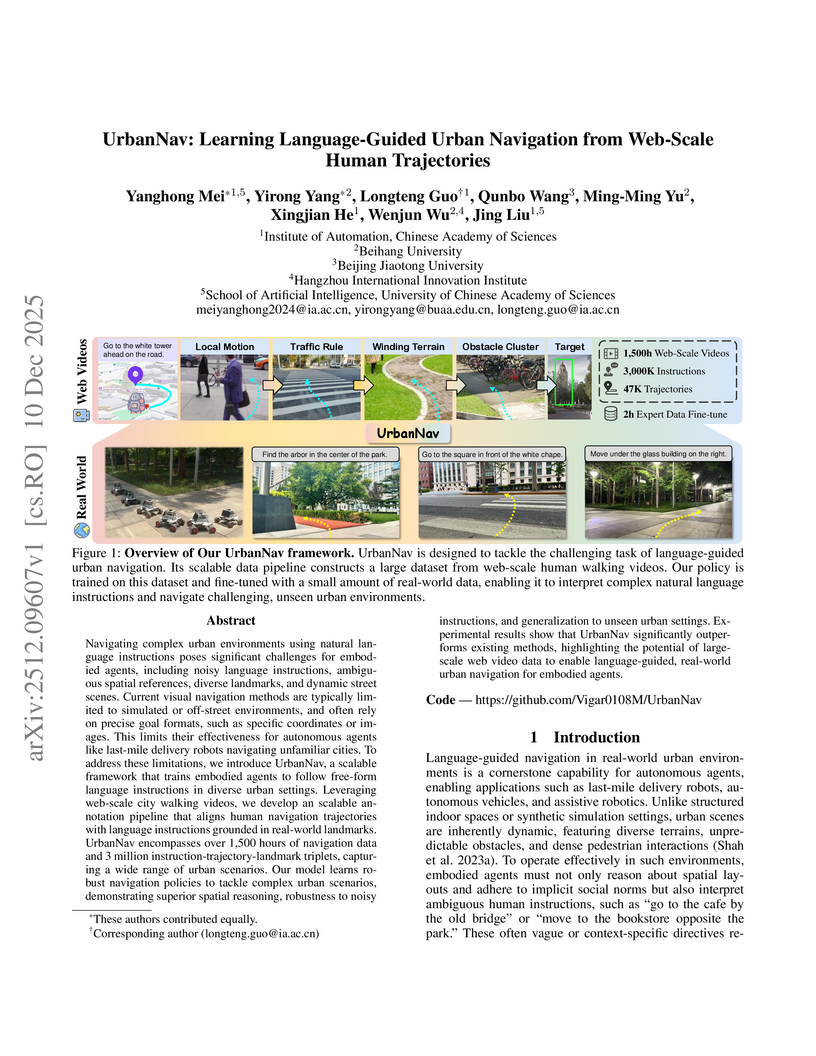

Navigating complex urban environments using natural language instructions poses significant challenges for embodied agents, including noisy language instructions, ambiguous spatial references, diverse landmarks, and dynamic street scenes. Current visual navigation methods are typically limited to simulated or off-street environments, and often rely on precise goal formats, such as specific coordinates or images. This limits their effectiveness for autonomous agents like last-mile delivery robots navigating unfamiliar cities. To address these limitations, we introduce UrbanNav, a scalable framework that trains embodied agents to follow free-form language instructions in diverse urban settings. Leveraging web-scale city walking videos, we develop an scalable annotation pipeline that aligns human navigation trajectories with language instructions grounded in real-world landmarks. UrbanNav encompasses over 1,500 hours of navigation data and 3 million instruction-trajectory-landmark triplets, capturing a wide range of urban scenarios. Our model learns robust navigation policies to tackle complex urban scenarios, demonstrating superior spatial reasoning, robustness to noisy instructions, and generalization to unseen urban settings. Experimental results show that UrbanNav significantly outperforms existing methods, highlighting the potential of large-scale web video data to enable language-guided, real-world urban navigation for embodied agents.

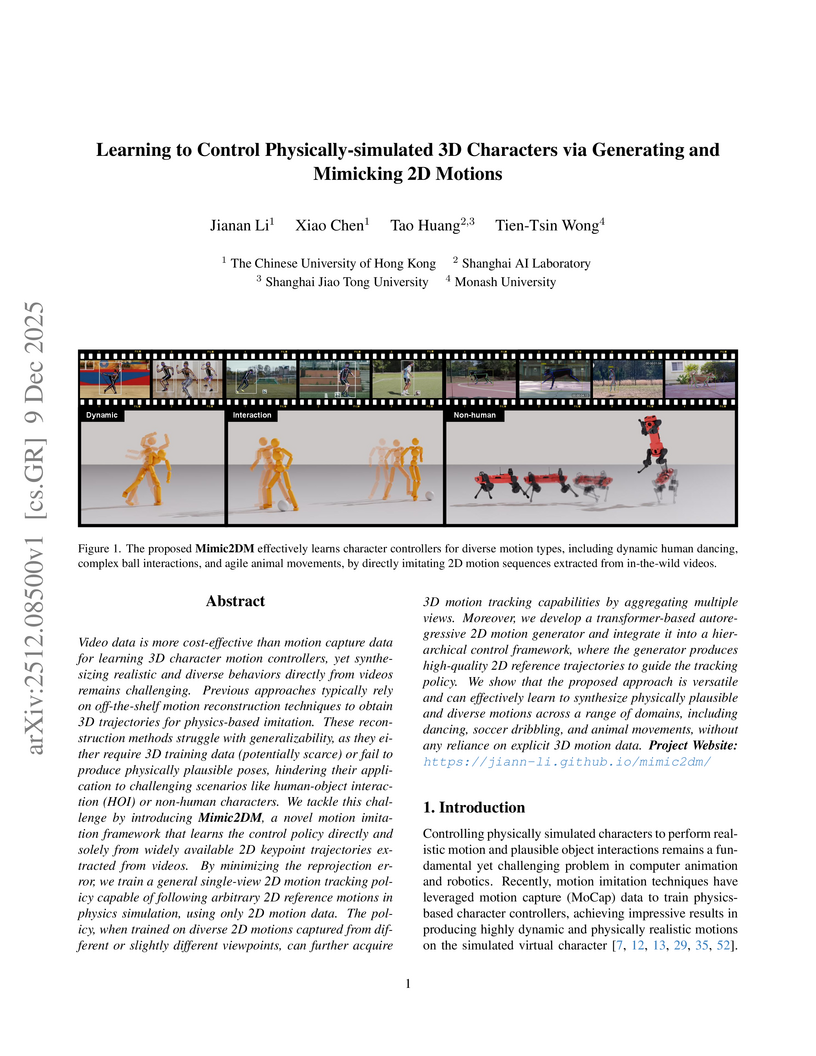

Mimic2DM is a framework that learns to control physically simulated 3D characters from abundant 2D video data, bypassing explicit 3D reconstruction by formulating motion imitation as a physics-based 2D motion tracking problem. The system enables characters to perform complex human-object interactions and animal locomotion, outperforming two-stage methods and exhibiting implicit 3D understanding from diverse 2D viewpoints.

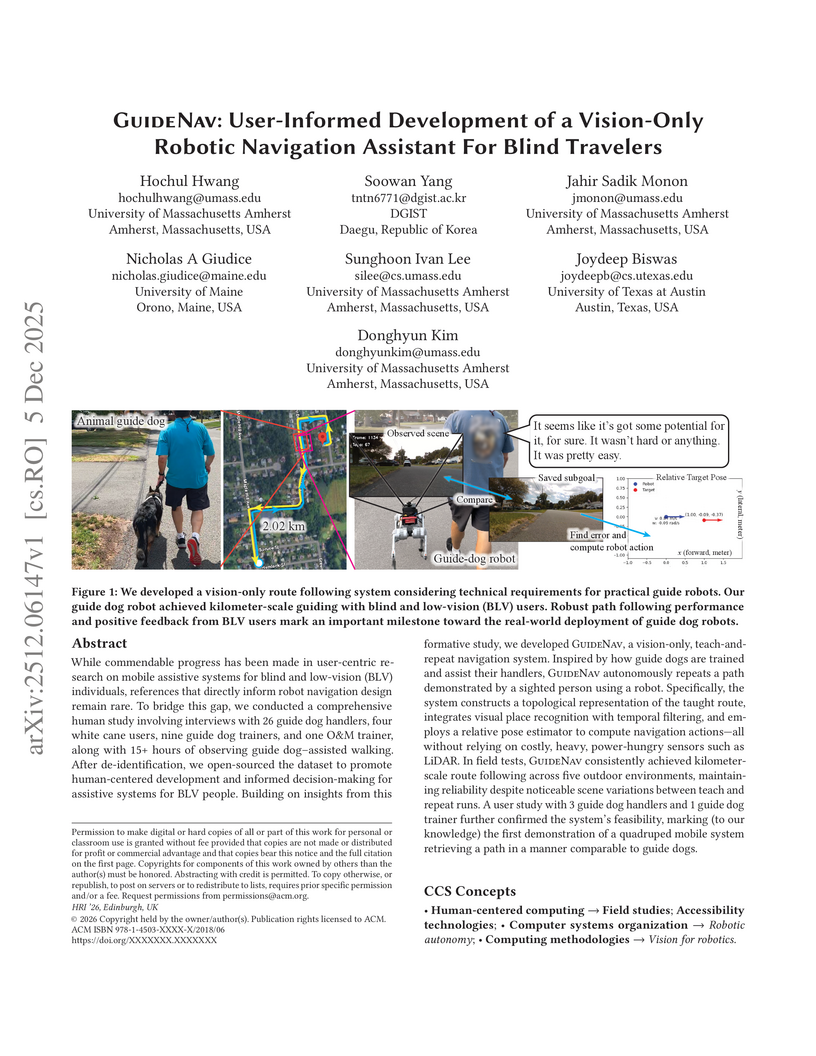

While commendable progress has been made in user-centric research on mobile assistive systems for blind and low-vision (BLV) individuals, references that directly inform robot navigation design remain rare. To bridge this gap, we conducted a comprehensive human study involving interviews with 26 guide dog handlers, four white cane users, nine guide dog trainers, and one O\&M trainer, along with 15+ hours of observing guide dog-assisted walking. After de-identification, we open-sourced the dataset to promote human-centered development and informed decision-making for assistive systems for BLV people. Building on insights from this formative study, we developed GuideNav, a vision-only, teach-and-repeat navigation system. Inspired by how guide dogs are trained and assist their handlers, GuideNav autonomously repeats a path demonstrated by a sighted person using a robot. Specifically, the system constructs a topological representation of the taught route, integrates visual place recognition with temporal filtering, and employs a relative pose estimator to compute navigation actions - all without relying on costly, heavy, power-hungry sensors such as LiDAR. In field tests, GuideNav consistently achieved kilometer-scale route following across five outdoor environments, maintaining reliability despite noticeable scene variations between teach and repeat runs. A user study with 3 guide dog handlers and 1 guide dog trainer further confirmed the system's feasibility, marking (to our knowledge) the first demonstration of a quadruped mobile system retrieving a path in a manner comparable to guide dogs.

Inverse reinforcement learning aims to infer the reward function that explains expert behavior observed through trajectories of state--action pairs. A long-standing difficulty in classical IRL is the non-uniqueness of the recovered reward: many reward functions can induce the same optimal policy, rendering the inverse problem ill-posed. In this paper, we develop a statistical framework for Inverse Entropy-regularized Reinforcement Learning that resolves this ambiguity by combining entropy regularization with a least-squares reconstruction of the reward from the soft Bellman residual. This combination yields a unique and well-defined so-called least-squares reward consistent with the expert policy. We model the expert demonstrations as a Markov chain with the invariant distribution defined by an unknown expert policy π⋆ and estimate the policy by a penalized maximum-likelihood procedure over a class of conditional distributions on the action space. We establish high-probability bounds for the excess Kullback--Leibler divergence between the estimated policy and the expert policy, accounting for statistical complexity through covering numbers of the policy class. These results lead to non-asymptotic minimax optimal convergence rates for the least-squares reward function, revealing the interplay between smoothing (entropy regularization), model complexity, and sample size. Our analysis bridges the gap between behavior cloning, inverse reinforcement learning, and modern statistical learning theory.

03 Dec 2025

Researchers introduced Score-Matching Motion Priors (SMP), a framework that leverages pre-trained motion diffusion models as static, reusable reward functions for physics-based character control. This method generates high-quality, naturalistic character movements and supports style adaptation and composition across various tasks, matching or exceeding performance of state-of-the-art adversarial methods while reducing data dependency.

Invariance Co-training introduces a method to enhance robot visual generalization by leveraging static image data, both synthetic and real-world, to teach policies robustness against variations in camera perspective, lighting, and distractor objects. This approach improves average success rates by approximately 40% compared to standard behavioral cloning in diverse visual environments.

The `GenMimic` framework enables humanoid robots to execute actions from noisy, AI-generated videos in a zero-shot manner by combining 4D human reconstruction and retargeting with a robust reinforcement learning policy. This approach facilitates successful physical reproduction on a Unitree G1 robot and demonstrates improved performance on a newly introduced benchmark dataset of synthetic motions.

There are no more papers matching your filters at the moment.