Hochschule Aalen

20 Jan 2023

We report a joint experimental and theoretical study of a three-sideband (3-SB) modification of the "reconstruction of attosecond beating by interference of two-photon transitions" (RABBIT) setup. The 3-SB RABBIT scheme makes it possible to investigate phases resulting from interference between transitions of different orders in the continuum. Furthermore, the strength of this method is its ability to focus on the atomic phases only, independent of a chirp in the harmonics, by comparing the RABBIT phases extracted from specific SB groups formed by two adjacent harmonics. We verify earlier predictions that the phases and the corresponding time delays in the three SBs extracted from angle-integrated measurements become similar with increasing photon electron energy. A variation in the angle dependence of the RABBIT phases in the three SBs results from the distinct Wigner and continuum-continuum coupling phases associated with the individual angular momentum channels. A qualitative explanation of this dependence is attempted by invoking a propensity rule. Comparison between the experimental data and predictions from an R-matrix (close-coupling) with time dependence calculation shows qualitative agreement in the observed trends.

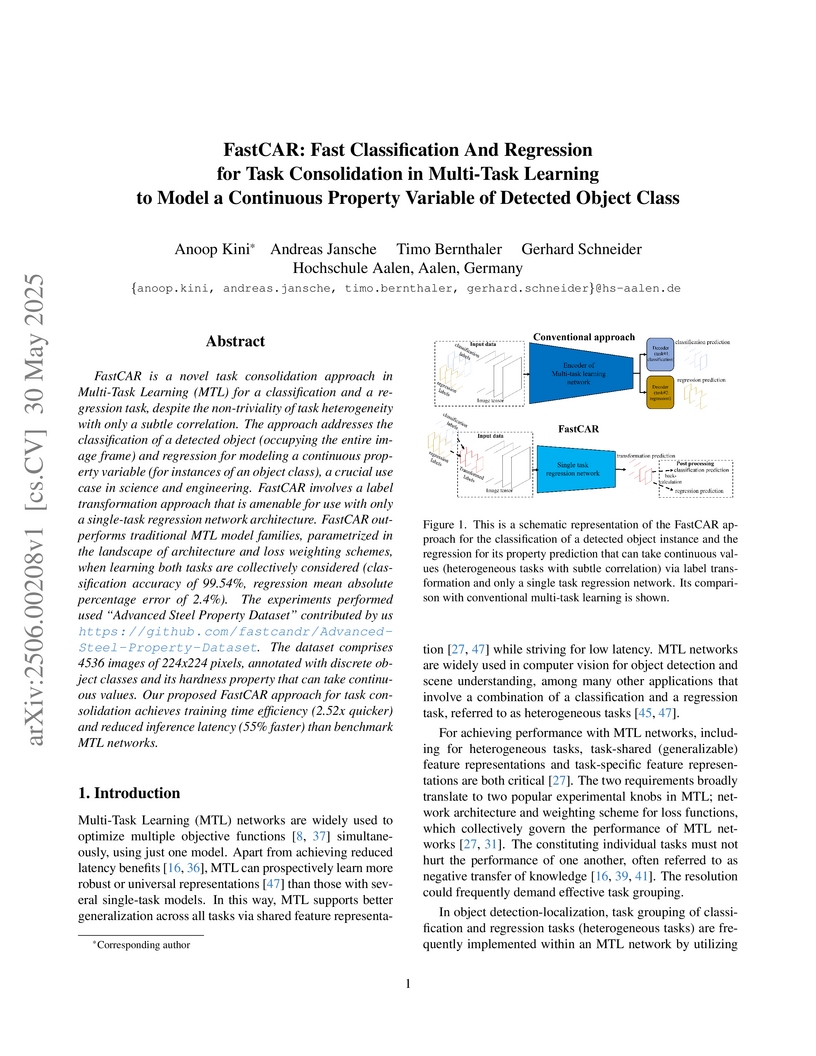

FastCAR is a novel task consolidation approach in Multi-Task Learning (MTL)

for a classification and a regression task, despite the non-triviality of task

heterogeneity with only a subtle correlation. The approach addresses the

classification of a detected object (occupying the entire image frame) and

regression for modeling a continuous property variable (for instances of an

object class), a crucial use case in science and engineering. FastCAR involves

a label transformation approach that is amenable for use with only a

single-task regression network architecture. FastCAR outperforms traditional

MTL model families, parametrized in the landscape of architecture and loss

weighting schemes, when learning both tasks are collectively considered

(classification accuracy of 99.54%, regression mean absolute percentage error

of 2.4%). The experiments performed used "Advanced Steel Property Dataset"

contributed by us this https URL

The dataset comprises 4536 images of 224x224 pixels, annotated with discrete

object classes and its hardness property that can take continuous values. Our

proposed FastCAR approach for task consolidation achieves training time

efficiency (2.52x quicker) and reduced inference latency (55% faster) than

benchmark MTL networks.

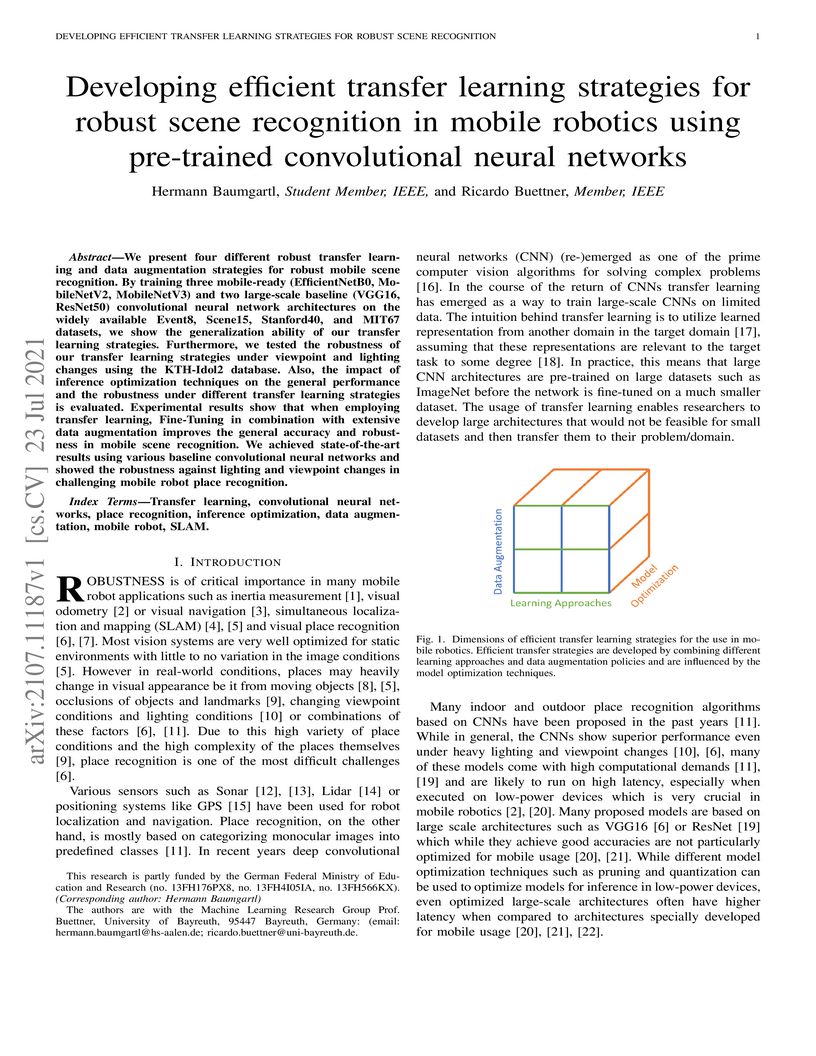

We present four different robust transfer learning and data augmentation strategies for robust mobile scene recognition. By training three mobile-ready (EfficientNetB0, MobileNetV2, MobileNetV3) and two large-scale baseline (VGG16, ResNet50) convolutional neural network architectures on the widely available Event8, Scene15, Stanford40, and MIT67 datasets, we show the generalization ability of our transfer learning strategies. Furthermore, we tested the robustness of our transfer learning strategies under viewpoint and lighting changes using the KTH-Idol2 database. Also, the impact of inference optimization techniques on the general performance and the robustness under different transfer learning strategies is evaluated. Experimental results show that when employing transfer learning, Fine-Tuning in combination with extensive data augmentation improves the general accuracy and robustness in mobile scene recognition. We achieved state-of-the-art results using various baseline convolutional neural networks and showed the robustness against lighting and viewpoint changes in challenging mobile robot place recognition.

There are no more papers matching your filters at the moment.