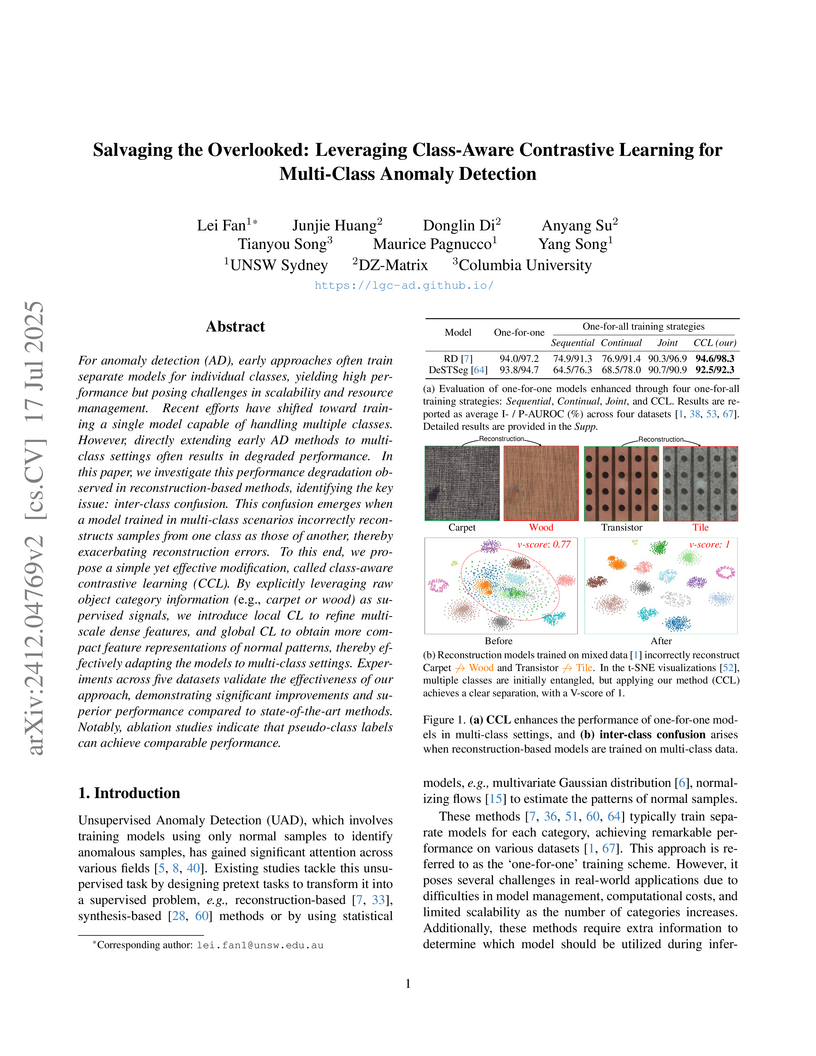

Researchers at UNSW Sydney developed Class-aware Contrastive Learning (CCL), a modular framework that mitigates inter-class confusion in multi-class anomaly detection by integrating local and global contrastive losses with existing reconstruction-based models. This approach achieved an Image-level AUROC of 90.6% across 60 object categories and demonstrated comparable performance using pseudo-class labels, making it suitable for truly unsupervised scenarios.

There are no more papers matching your filters at the moment.