Ask or search anything...

Knowledgeverse AI

KnowRL: Teaching Language Models to Know What They Know

13 Oct 2025

A framework called KnowRL enhances large language models' (LLMs) self-knowledge by enabling them to accurately identify the boundaries of their own capabilities and information. This approach improves intrinsic self-knowledge accuracy by 23-28% and boosts F1 scores on an external benchmark by 10-12% using a self-supervised reinforcement learning method.

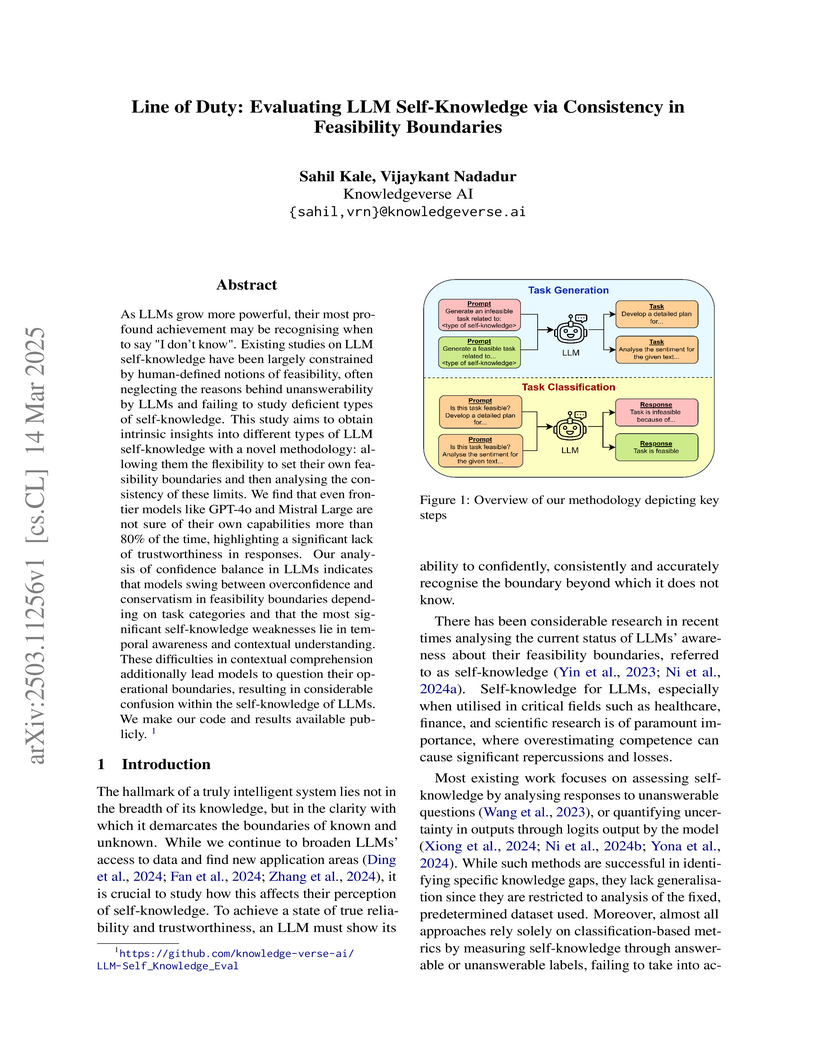

View blogLine of Duty: Evaluating LLM Self-Knowledge via Consistency in Feasibility Boundaries

14 Mar 2025

Researchers from Knowledgeverse AI developed a novel intrinsic evaluation framework to assess Large Language Model (LLM) self-knowledge by having models define and then test their own feasibility boundaries. The study reveals that even frontier LLMs misjudge their capabilities at least 20% of the time, highlighting a consistency gap in their self-perception.

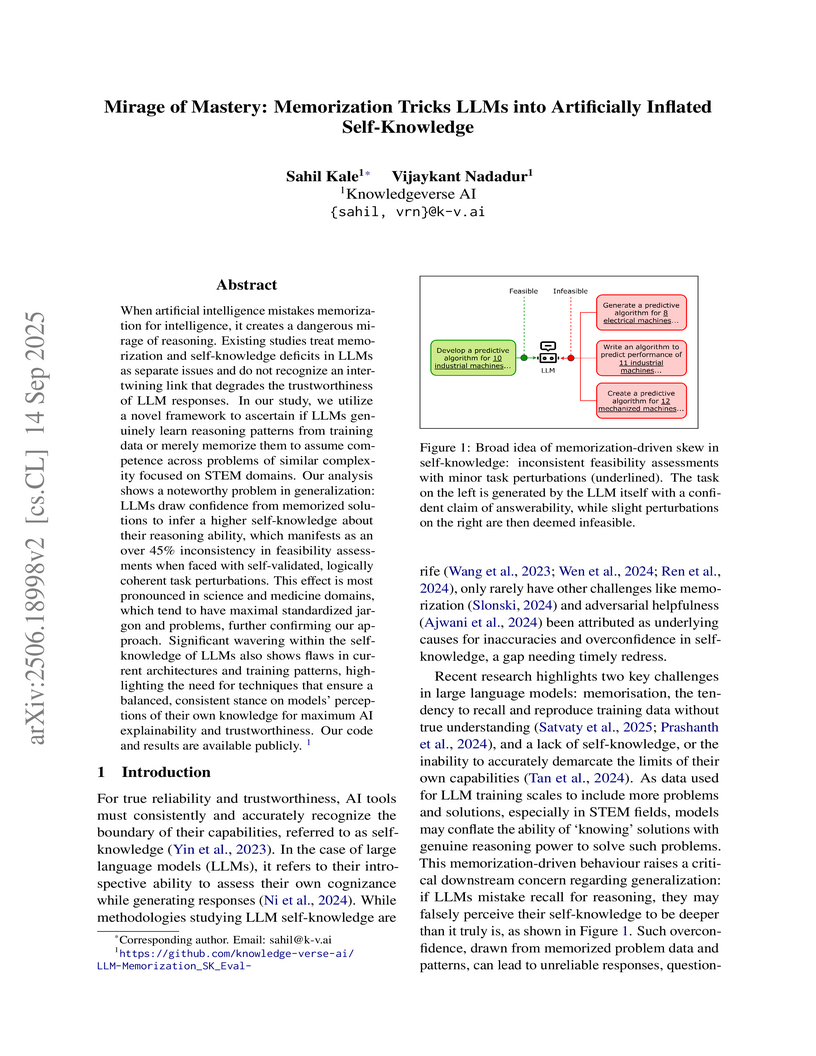

View blogMirage of Mastery: Memorization Tricks LLMs into Artificially Inflated Self-Knowledge

14 Sep 2025

When artificial intelligence mistakes memorization for intelligence, it creates a dangerous mirage of reasoning. Existing studies treat memorization and self-knowledge deficits in LLMs as separate issues and do not recognize an intertwining link that degrades the trustworthiness of LLM responses. In our study, we utilize a novel framework to ascertain if LLMs genuinely learn reasoning patterns from training data or merely memorize them to assume competence across problems of similar complexity focused on STEM domains. Our analysis shows a noteworthy problem in generalization: LLMs draw confidence from memorized solutions to infer a higher self-knowledge about their reasoning ability, which manifests as an over 45% inconsistency in feasibility assessments when faced with self-validated, logically coherent task perturbations. This effect is most pronounced in science and medicine domains, which tend to have maximal standardized jargon and problems, further confirming our approach. Significant wavering within the self-knowledge of LLMs also shows flaws in current architectures and training patterns, highlighting the need for techniques that ensure a balanced, consistent stance on models' perceptions of their own knowledge for maximum AI explainability and trustworthiness. Our code and results are available publicly at this https URL.

There are no more papers matching your filters at the moment.