Shenzhen People's Hospital

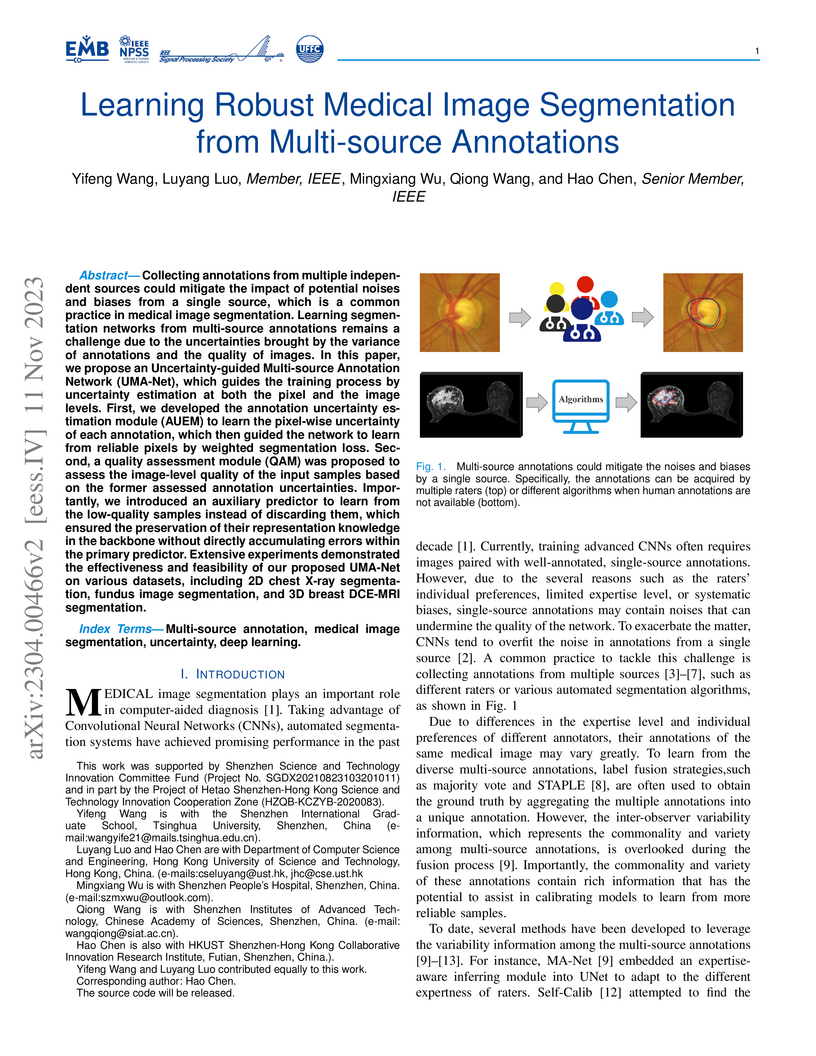

Collecting annotations from multiple independent sources could mitigate the impact of potential noises and biases from a single source, which is a common practice in medical image segmentation. Learning segmentation networks from multi-source annotations remains a challenge due to the uncertainties brought by the variance of annotations and the quality of images. In this paper, we propose an Uncertainty-guided Multi-source Annotation Network (UMA-Net), which guides the training process by uncertainty estimation at both the pixel and the image levels. First, we developed the annotation uncertainty estimation module (AUEM) to learn the pixel-wise uncertainty of each annotation, which then guided the network to learn from reliable pixels by weighted segmentation loss. Second, a quality assessment module (QAM) was proposed to assess the image-level quality of the input samples based on the former assessed annotation uncertainties. Importantly, we introduced an auxiliary predictor to learn from the low-quality samples instead of discarding them, which ensured the preservation of their representation knowledge in the backbone without directly accumulating errors within the primary predictor. Extensive experiments demonstrated the effectiveness and feasibility of our proposed UMA-Net on various datasets, including 2D chest X-ray segmentation, fundus image segmentation, and 3D breast DCE-MRI segmentation.

Two DL models were developed using radiograph-level annotations (yes or no disease) and fine-grained lesion-level annotations (lesion bounding boxes), respectively named CheXNet and CheXDet. The models' internal classification performance and lesion localization performance were compared on a testing set (n=2,922), external classification performance was compared on NIH-Google (n=4,376) and PadChest (n=24,536) datasets, and external lesion localization performance was compared on NIH-ChestX-ray14 dataset (n=880). The models were also compared to radiologists on a subset of the internal testing set (n=496). Given sufficient training data, both models performed comparably to radiologists. CheXDet achieved significant improvement for external classification, such as in classifying fracture on NIH-Google (CheXDet area under the ROC curve [AUC]: 0.67, CheXNet AUC: 0.51; p<.001) and PadChest (CheXDet AUC: 0.78, CheXNet AUC: 0.55; p<.001). CheXDet achieved higher lesion detection performance than CheXNet for most abnormalities on all datasets, such as in detecting pneumothorax on the internal set (CheXDet jacknife alternative free-response ROC-figure of merit [JAFROC-FOM]: 0.87, CheXNet JAFROC-FOM: 0.13; p<.001) and NIH-ChestX-ray14 (CheXDet JAFROC-FOM: 0.55, CheXNet JAFROC-FOM: 0.04; p<.001). To summarize, fine-grained annotations overcame shortcut learning and enabled DL models to identify correct lesion patterns, improving the models' generalizability.

31 Dec 2024

Host-response-based diagnostics can improve the accuracy of diagnosing bacterial and viral infections, thereby reducing inappropriate antibiotic prescriptions. However, the existing cohorts with limited sample size and coarse infections types are unable to support the exploration of an accurate and generalizable diagnostic model. Here, we curate the largest infection host-response transcriptome data, including 11,247 samples across 89 blood transcriptome datasets from 13 countries and 21 platforms. We build a diagnostic model for pathogen prediction starting from a pan-infection model as foundation (AUC = 0.97) based on the pan-infection dataset. Then, we utilize knowledge distillation to efficiently transfer the insights from this "teacher" model to four lightweight pathogen "student" models, i.e., staphylococcal infection (AUC = 0.99), streptococcal infection (AUC = 0.94), HIV infection (AUC = 0.93), and RSV infection (AUC = 0.94), as well as a sepsis "student" model (AUC = 0.99). The proposed knowledge distillation framework not only facilitates the diagnosis of pathogens using pan-infection data, but also enables an across-disease study from pan-infection to sepsis. Moreover, the framework enables high-degree lightweight design of diagnostic models, which is expected to be adaptively deployed in clinical settings.

There are no more papers matching your filters at the moment.