Shenzhen Smartcity Communication

Fine-grained air pollution forecasting is crucial for urban management and the development of healthy buildings. Deploying portable sensors on mobile platforms such as cars and buses offers a low-cost, easy-to-maintain, and wide-coverage data collection solution. However, due to the random and uncontrollable movement patterns of these non-dedicated mobile platforms, the resulting sensor data are often incomplete and temporally inconsistent. By exploring potential training patterns in the reverse process of diffusion models, we propose Spatio-Temporal Physics-Informed Diffusion Models (STeP-Diff). STeP-Diff leverages DeepONet to model the spatial sequence of measurements along with a PDE-informed diffusion model to forecast the spatio-temporal field from incomplete and time-varying data. Through a PDE-constrained regularization framework, the denoising process asymptotically converges to the convection-diffusion dynamics, ensuring that predictions are both grounded in real-world measurements and aligned with the fundamental physics governing pollution dispersion. To assess the performance of the system, we deployed 59 self-designed portable sensing devices in two cities, operating for 14 days to collect air pollution data. Compared to the second-best performing algorithm, our model achieved improvements of up to 89.12% in MAE, 82.30% in RMSE, and 25.00% in MAPE, with extensive evaluations demonstrating that STeP-Diff effectively captures the spatio-temporal dependencies in air pollution fields.

13 May 2025

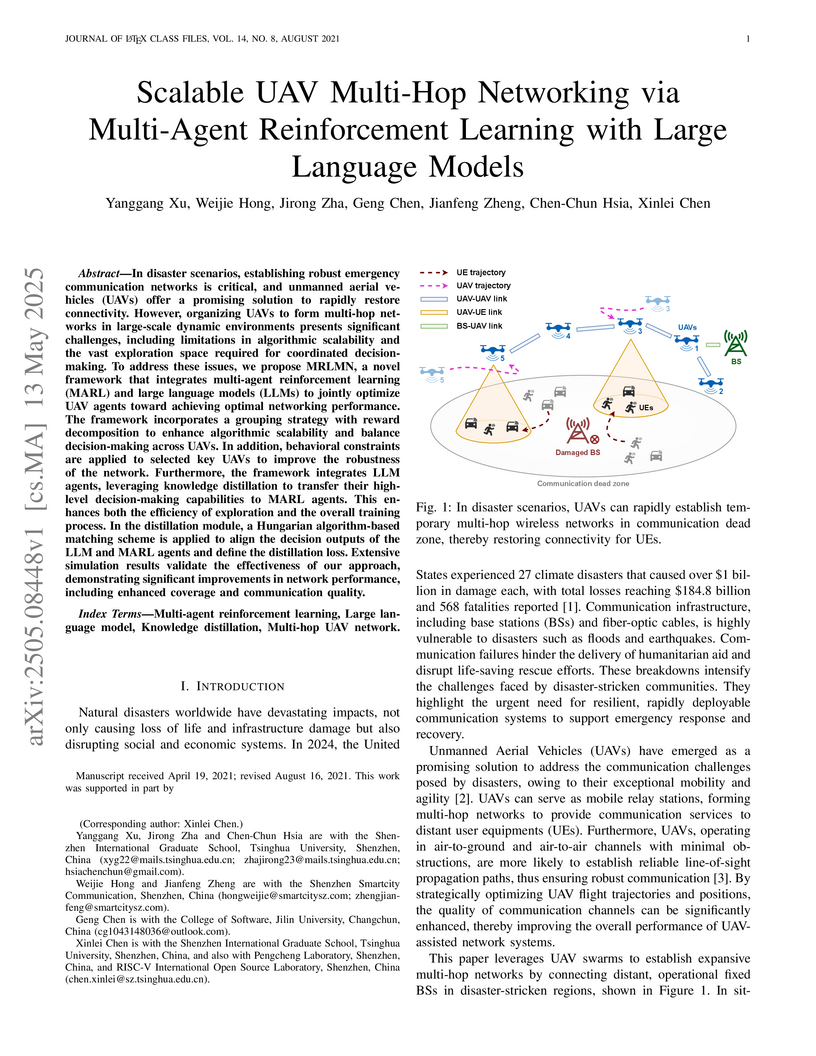

In disaster scenarios, establishing robust emergency communication networks

is critical, and unmanned aerial vehicles (UAVs) offer a promising solution to

rapidly restore connectivity. However, organizing UAVs to form multi-hop

networks in large-scale dynamic environments presents significant challenges,

including limitations in algorithmic scalability and the vast exploration space

required for coordinated decision-making. To address these issues, we propose

MRLMN, a novel framework that integrates multi-agent reinforcement learning

(MARL) and large language models (LLMs) to jointly optimize UAV agents toward

achieving optimal networking performance. The framework incorporates a grouping

strategy with reward decomposition to enhance algorithmic scalability and

balance decision-making across UAVs. In addition, behavioral constraints are

applied to selected key UAVs to improve the robustness of the network.

Furthermore, the framework integrates LLM agents, leveraging knowledge

distillation to transfer their high-level decision-making capabilities to MARL

agents. This enhances both the efficiency of exploration and the overall

training process. In the distillation module, a Hungarian algorithm-based

matching scheme is applied to align the decision outputs of the LLM and MARL

agents and define the distillation loss. Extensive simulation results validate

the effectiveness of our approach, demonstrating significant improvements in

network performance, including enhanced coverage and communication quality.

There are no more papers matching your filters at the moment.