multiagent-systems

09 Dec 2025

Foundation models (FMs) are increasingly assuming the role of the "brain" of AI agents. While recent efforts have begun to equip FMs with native single-agent abilities -- such as GUI interaction or integrated tool use -- we argue that the next frontier is endowing FMs with native multi-agent intelligence. We identify four core capabilities of FMs in multi-agent contexts: understanding, planning, efficient communication, and adaptation. Contrary to assumptions about the spontaneous emergence of such abilities, we provide extensive empirical evidence across 41 large language models showing that strong single-agent performance alone does not automatically yield robust multi-agent intelligence. To address this gap, we outline key research directions -- spanning dataset construction, evaluation, training paradigms, and safety considerations -- for building FMs with native multi-agent intelligence.

09 Dec 2025

Large Language Models and multi-agent systems have shown promise in decomposing complex tasks, yet they struggle with long-horizon reasoning tasks and escalating computation cost. This work introduces a hierarchical multi-agent architecture that distributes reasoning across a 64*64 grid of lightweight agents, supported by a selective oracle. A spatial curriculum progressively expands the operational region of the grid, ensuring that agents master easier central tasks before tackling harder peripheral ones. To improve reliability, the system integrates Negative Log-Likelihood as a measure of confidence, allowing the curriculum to prioritize regions where agents are both accurate and well calibrated. A Thompson Sampling curriculum manager adaptively chooses training zones based on competence and NLL-driven reward signals. We evaluate the approach on a spatially grounded Tower of Hanoi benchmark, which mirrors the long-horizon structure of many robotic manipulation and planning tasks. Results demonstrate improved stability, reduced oracle usage, and stronger long-range reasoning from distributed agent cooperation.

09 Dec 2025

A multi-agent intelligence framework enhances multidisciplinary decision-making in gastrointestinal oncology by mirroring human team collaboration. This system achieved a composite expert evaluation score of 4.60/5.00, outperforming monolithic baselines (3.76/5.00) in medical accuracy and reasoning, while mitigating issues like hallucination and context dilution.

08 Dec 2025

Luxembourg Institute of Science and TechnologyHo Chi Minh City University of TechnologyTeesside UniversityHo Chi Minh City University of Technology (HCMUT)Ho Chi Minh City University of ScienceVietnam National University - Ho Chi Minh CityHo Chi Minh City University of Science (HCMUS)Vietnam National University - Ho Chi Minh City (VNU-HCM)

As Large Language Models (LLMs) increasingly operate as autonomous decision-makers in interactive and multi-agent systems and human societies, understanding their strategic behaviour has profound implications for safety, coordination, and the design of AI-driven social and economic infrastructures. Assessing such behaviour requires methods that capture not only what LLMs output, but the underlying intentions that guide their decisions. In this work, we extend the FAIRGAME framework to systematically evaluate LLM behaviour in repeated social dilemmas through two complementary advances: a payoff-scaled Prisoners Dilemma isolating sensitivity to incentive magnitude, and an integrated multi-agent Public Goods Game with dynamic payoffs and multi-agent histories. These environments reveal consistent behavioural signatures across models and languages, including incentive-sensitive cooperation, cross-linguistic divergence and end-game alignment toward defection. To interpret these patterns, we train traditional supervised classification models on canonical repeated-game strategies and apply them to FAIRGAME trajectories, showing that LLMs exhibit systematic, model- and language-dependent behavioural intentions, with linguistic framing at times exerting effects as strong as architectural differences. Together, these findings provide a unified methodological foundation for auditing LLMs as strategic agents and reveal systematic cooperation biases with direct implications for AI governance, collective decision-making, and the design of safe multi-agent systems.

Deception is a fundamental challenge for multi-agent reasoning: effective systems must strategically conceal information while detecting misleading behavior in others. Yet most evaluations reduce deception to static classification, ignoring the interactive, adversarial, and longitudinal nature of real deceptive dynamics. Large language models (LLMs) can deceive convincingly but remain weak at detecting deception in peers. We present WOLF, a multi-agent social deduction benchmark based on Werewolf that enables separable measurement of deception production and detection. WOLF embeds role-grounded agents (Villager, Werewolf, Seer, Doctor) in a programmable LangGraph state machine with strict night-day cycles, debate turns, and majority voting. Every statement is a distinct analysis unit, with self-assessed honesty from speakers and peer-rated deceptiveness from others. Deception is categorized via a standardized taxonomy (omission, distortion, fabrication, misdirection), while suspicion scores are longitudinally smoothed to capture both immediate judgments and evolving trust dynamics. Structured logs preserve prompts, outputs, and state transitions for full reproducibility. Across 7,320 statements and 100 runs, Werewolves produce deceptive statements in 31% of turns, while peer detection achieves 71-73% precision with ~52% overall accuracy. Precision is higher for identifying Werewolves, though false positives occur against Villagers. Suspicion toward Werewolves rises from ~52% to over 60% across rounds, while suspicion toward Villagers and the Doctor stabilizes near 44-46%. This divergence shows that extended interaction improves recall against liars without compounding errors against truthful roles. WOLF moves deception evaluation beyond static datasets, offering a dynamic, controlled testbed for measuring deceptive and detective capacity in adversarial multi-agent interaction.

As AI agents built on large language models (LLMs) become increasingly embedded in society, issues of coordination, control, delegation, and accountability are entangled with concerns over their reliability. To design and implement LLM agents around reliable operations, we should consider the task complexity in the application settings and reduce their limitations while striving to minimize agent failures and optimize resource efficiency. High-functioning human organizations have faced similar balancing issues, which led to evidence-based theories that seek to understand their functioning strategies. We examine the parallels between LLM agents and the compatible frameworks in organization science, focusing on what the design, scaling, and management of organizations can inform agentic systems towards improving reliability. We offer three preliminary accounts of organizational principles for AI agent engineering to attain reliability and effectiveness, through balancing agency and capabilities in agent design, resource constraints and performance benefits in agent scaling, and internal and external mechanisms in agent management. Our work extends the growing exchanges between the operational and governance principles of AI systems and social systems to facilitate system integration.

Collaborative pursuit-evasion in cluttered environments presents significant challenges due to sparse rewards and constrained Fields of View (FOV). Standard Multi-Agent Reinforcement Learning (MARL) often suffers from inefficient exploration and fails to scale to large scenarios. We propose PGF-MAPPO (Path-Guided Frontier MAPPO), a hierarchical framework bridging topological planning with reactive control. To resolve local minima and sparse rewards, we integrate an A*-based potential field for dense reward shaping. Furthermore, we introduce Directional Frontier Allocation, combining Farthest Point Sampling (FPS) with geometric angle suppression to enforce spatial dispersion and accelerate coverage. The architecture employs a parameter-shared decentralized critic, maintaining O(1) model complexity suitable for robotic swarms. Experiments demonstrate that PGF-MAPPO achieves superior capture efficiency against faster evaders. Policies trained on 10x10 maps exhibit robust zero-shot generalization to unseen 20x20 environments, significantly outperforming rule-based and learning-based baselines.

08 Dec 2025

Researchers from Ho Chi Minh City University of Technology and Teesside University investigated social welfare optimization in cooperation dilemmas, finding that strategies maximizing overall societal benefit often diverge from those solely minimizing institutional cost or maximizing cooperation frequency. Their work identifies distinct optimal incentive schemes when prioritizing social welfare in both well-mixed and structured populations.

26 Nov 2025

NVIDIA researchers developed ToolOrchestra, a method for training an 8-billion-parameter language model to act as an intelligent orchestrator, coordinating diverse models and tools to solve complex agentic tasks. This approach achieves state-of-the-art performance on benchmarks like Humanity's Last Exam while being 2.5 times more computationally efficient than frontier models and demonstrating robust generalization.

The emerging "agentic web" envisions large populations of autonomous agents coordinating, transacting, and delegating across open networks. Yet many agent communication and commerce protocols treat agents as low-cost identities, despite the empirical reality that LLM agents remain unreliable, hallucinated, manipulable, and vulnerable to prompt-injection and tool-abuse. A natural response is "agents-at-stake": binding economically meaningful, slashable collateral to persistent identities and adjudicating misbehavior with verifiable evidence. However, heterogeneous tasks make universal verification brittle and centralization-prone, while traditional reputation struggles under rapid model drift and opaque internal states. We propose a protocol-native alternative: insured agents. Specialized insurer agents post stake on behalf of operational agents in exchange for premiums, and receive privileged, privacy-preserving audit access via TEEs to assess claims. A hierarchical insurer market calibrates stake through pricing, decentralizes verification via competitive underwriting, and yields incentive-compatible dispute resolution.

The rise of multi-agent systems powered by large language models (LLMs) and specialized reasoning agents exposes fundamental limitations in today's data management architectures. Traditional databases and data fabrics were designed for static, well-defined workloads, whereas agentic systems exhibit dynamic, context-driven, and collaborative behaviors. Agents continuously decompose tasks, shift attention across modalities, and share intermediate results with peers - producing non-deterministic, multi-modal workloads that strain conventional query optimizers and caching mechanisms. We propose an Agent-Centric Data Fabric, a unified architecture that rethinks how data systems serve, optimize, coordinate, and learn from agentic workloads. To achieve this we exploit the concepts of attention-guided data retrieval, semantic micro-caching for context-driven agent federations, predictive data prefetching and quorum-based data serving. Together, these mechanisms enable agents to access representative data faster and more efficiently, while reducing redundant queries, data movement, and inference load across systems. By framing data systems as adaptive collaborators, instead of static executors, we outline new research directions toward behaviorally responsive data infrastructures, where caching, probing, and orchestration jointly enable efficient, context-rich data exchange among dynamic, reasoning-driven agents.

05 Dec 2025

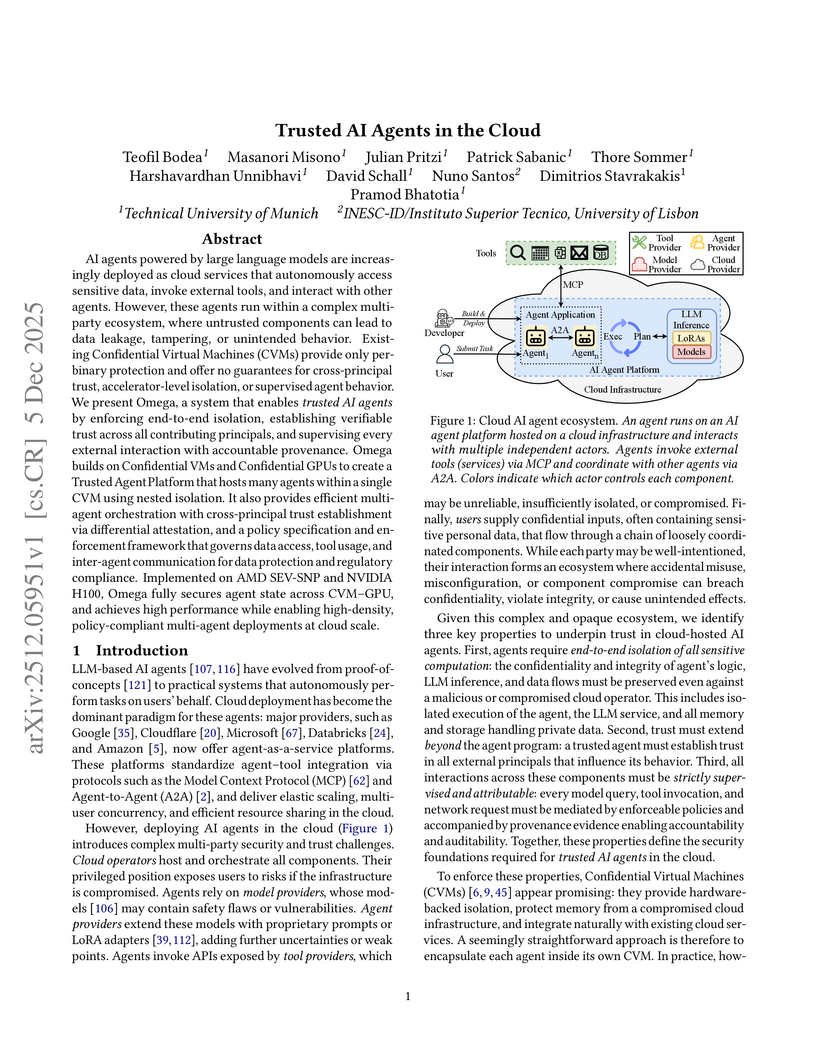

AI agents powered by large language models are increasingly deployed as cloud services that autonomously access sensitive data, invoke external tools, and interact with other agents. However, these agents run within a complex multi-party ecosystem, where untrusted components can lead to data leakage, tampering, or unintended behavior. Existing Confidential Virtual Machines (CVMs) provide only per binary protection and offer no guarantees for cross-principal trust, accelerator-level isolation, or supervised agent behavior. We present Omega, a system that enables trusted AI agents by enforcing end-to-end isolation, establishing verifiable trust across all contributing principals, and supervising every external interaction with accountable provenance. Omega builds on Confidential VMs and Confidential GPUs to create a Trusted Agent Platform that hosts many agents within a single CVM using nested isolation. It also provides efficient multi-agent orchestration with cross-principal trust establishment via differential attestation, and a policy specification and enforcement framework that governs data access, tool usage, and inter-agent communication for data protection and regulatory compliance. Implemented on AMD SEV-SNP and NVIDIA H100, Omega fully secures agent state across CVM-GPU, and achieves high performance while enabling high-density, policy-compliant multi-agent deployments at cloud scale.

Multi-agent role-playing has recently shown promise for studying social behavior with language agents, but existing simulations are mostly monolingual and fail to model cross-lingual interaction, an essential property of real societies. We introduce MASim, the first multilingual agent-based simulation framework that supports multi-turn interaction among generative agents with diverse sociolinguistic profiles. MASim offers two key analyses: (i) global public opinion modeling, by simulating how attitudes toward open-domain hypotheses evolve across languages and cultures, and (ii) media influence and information diffusion, via autonomous news agents that dynamically generate content and shape user behavior. To instantiate simulations, we construct the MAPS benchmark, which combines survey questions and demographic personas drawn from global population distributions. Experiments on calibration, sensitivity, consistency, and cultural case studies show that MASim reproduces sociocultural phenomena and highlights the importance of multilingual simulation for scalable, controlled computational social science.

Analysing learning behaviour in Multi-Agent Reinforcement Learning (MARL) environments is challenging, in particular with respect to \textit{individual} decision-making. Practitioners frequently tend to study or compare MARL algorithms from a qualitative perspective largely due to the inherent stochasticity in practical algorithms arising from random dithering exploration strategies, environment transition noise, and stochastic gradient updates to name a few. Traditional analytical approaches, such as replicator dynamics, often rely on mean-field approximations to remove stochastic effects, but this simplification, whilst able to provide general overall trends, might lead to dissonance between analytical predictions and actual realisations of individual trajectories. In this paper, we propose a novel perspective on MARL systems by modelling them as \textit{coupled stochastic dynamical systems}, capturing both agent interactions and environmental characteristics. Leveraging tools from dynamical systems theory, we analyse the stability and sensitivity of agent behaviour at individual level, which are key dimensions for their practical deployments, for example, in presence of strict safety requirements. This framework allows us, for the first time, to rigorously study MARL dynamics taking into consideration their inherent stochasticity, providing a deeper understanding of system behaviour and practical insights for the design and control of multi-agent learning processes.

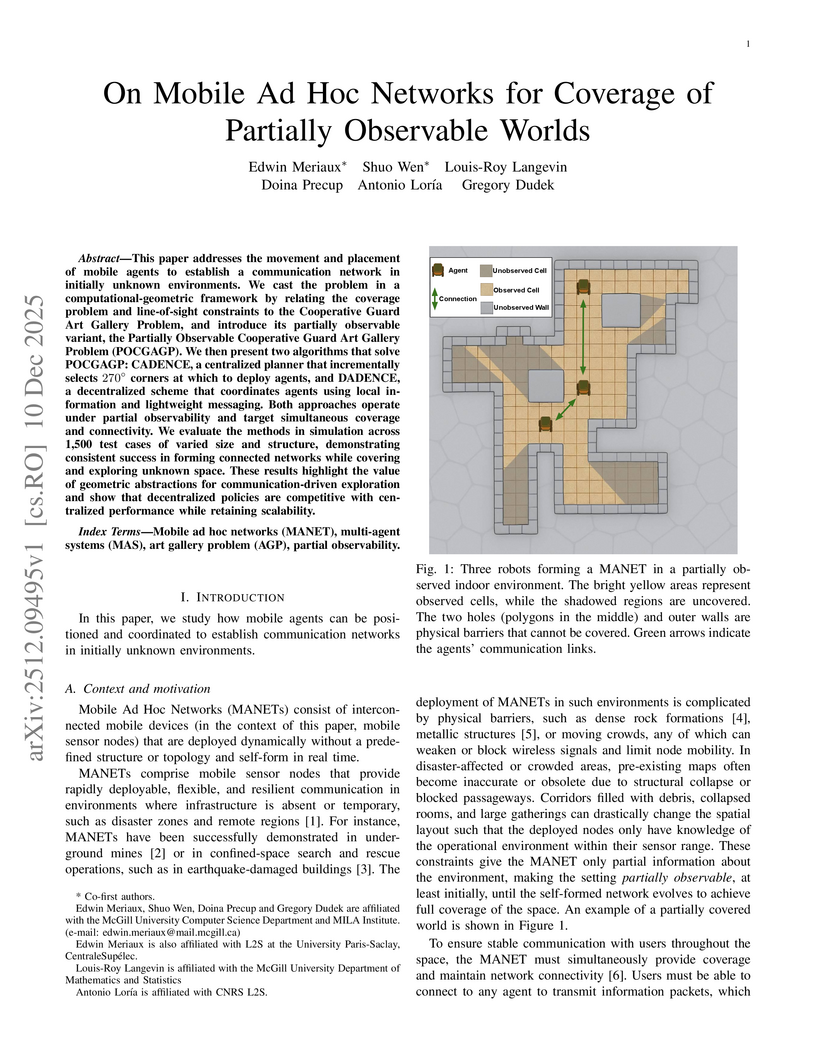

This paper addresses the movement and placement of mobile agents to establish a communication network in initially unknown environments. We cast the problem in a computational-geometric framework by relating the coverage problem and line-of-sight constraints to the Cooperative Guard Art Gallery Problem, and introduce its partially observable variant, the Partially Observable Cooperative Guard Art Gallery Problem (POCGAGP). We then present two algorithms that solve POCGAGP: CADENCE, a centralized planner that incrementally selects 270 degree corners at which to deploy agents, and DADENCE, a decentralized scheme that coordinates agents using local information and lightweight messaging. Both approaches operate under partial observability and target simultaneous coverage and connectivity. We evaluate the methods in simulation across 1,500 test cases of varied size and structure, demonstrating consistent success in forming connected networks while covering and exploring unknown space. These results highlight the value of geometric abstractions for communication-driven exploration and show that decentralized policies are competitive with centralized performance while retaining scalability.

This paper develops a geometric framework for modeling belief, motivation, and influence across cognitively heterogeneous agents. Each agent is represented by a personalized value space, a vector space encoding the internal dimensions through which the agent interprets and evaluates meaning. Beliefs are formalized as structured vectors-abstract beings-whose transmission is mediated by linear interpretation maps. A belief survives communication only if it avoids the null spaces of these maps, yielding a structural criterion for intelligibility, miscommunication, and belief death.

Within this framework, I show how belief distortion, motivational drift, counterfactual evaluation, and the limits of mutual understanding arise from purely algebraic constraints. A central result-"the No-Null-Space Leadership Condition"-characterizes leadership as a property of representational reachability rather than persuasion or authority. More broadly, the model explains how abstract beings can propagate, mutate, or disappear as they traverse diverse cognitive geometries.

The account unifies insights from conceptual spaces, social epistemology, and AI value alignment by grounding meaning preservation in structural compatibility rather than shared information or rationality. I argue that this cognitive-geometric perspective clarifies the epistemic boundaries of influence in both human and artificial systems, and offers a general foundation for analyzing belief dynamics across heterogeneous agents.

Agent-Kernel, developed by researchers from Zhejiang University, Alibaba Group, and other institutions, introduces a microkernel multi-agent system framework for LLM-powered social simulations, featuring a society-centric modular architecture with strict agent-environment-action decoupling. This design enables high adaptability and configurability for large-scale simulations, demonstrated by replicating complex social phenomena and efficiently managing 10,000 agents in a distributed campus environment.

The generation of realistic and diverse traffic scenarios in simulation is essential for developing and evaluating autonomous driving systems. However, most simulation frameworks rely on rule-based or simplified models for scene generation, which lack the fidelity and diversity needed to represent real-world driving. While recent advances in generative modeling produce more realistic and context-aware traffic interactions, they often overlook how social preferences influence driving behavior. SocialDriveGen addresses this gap through a hierarchical framework that integrates semantic reasoning and social preference modeling with generative trajectory synthesis. By modeling egoism and altruism as complementary social dimensions, our framework enables controllable diversity in driver personalities and interaction styles. Experiments on the Argoverse 2 dataset show that SocialDriveGen generates diverse, high-fidelity traffic scenarios spanning cooperative to adversarial behaviors, significantly enhancing policy robustness and generalization to rare or high-risk situations.

05 Dec 2025

Zhongxing Telecom Equipment (ZTE), China, presents MARINE, a multi-agent framework that enhances Large Language Model reasoning at inference time by iteratively refining a persistent reference trajectory. The framework achieved 46.0% pass@1 accuracy on the BrowserComp-ZH benchmark and allowed 80B-parameter LLMs to match the performance of standalone 1000B models, demonstrating considerable parameter efficiency.

02 Dec 2025

This paper examines why safety mechanisms designed for human-model interaction do not scale to environments where large language models (LLMs) interact with each other. Most current governance practices still rely on single-agent safety containment, prompts, fine-tuning, and moderation layers that constrain individual model behavior but leave the dynamics of multi-model interaction ungoverned. These mechanisms assume a dyadic setting: one model responding to one user under stable oversight. Yet research and industrial development are rapidly shifting toward LLM-to-LLM ecosystems, where outputs are recursively reused as inputs across chains of agents. In such systems, local compliance can aggregate into collective failure even when every model is individually aligned. We propose a conceptual transition from model-level safety to system-level safety, introducing the framework of the Emergent Systemic Risk Horizon (ESRH) to formalize how instability arises from interaction structure rather than from isolated misbehavior. The paper contributes (i) a theoretical account of collective risk in interacting LLMs, (ii) a taxonomy connecting micro, meso, and macro-level failure modes, and (iii) a design proposal for InstitutionalAI, an architecture for embedding adaptive oversight within multi-agent systems.

There are no more papers matching your filters at the moment.