Spirit AI

Robotic visuomotor policies achieve dramatically improved spatial generalization by removing proprioceptive state inputs. This approach leverages a relative end-effector action space and comprehensive egocentric vision to enable robust task performance across varied spatial configurations, while also enhancing data efficiency and cross-embodiment adaptation.

17 May 2025

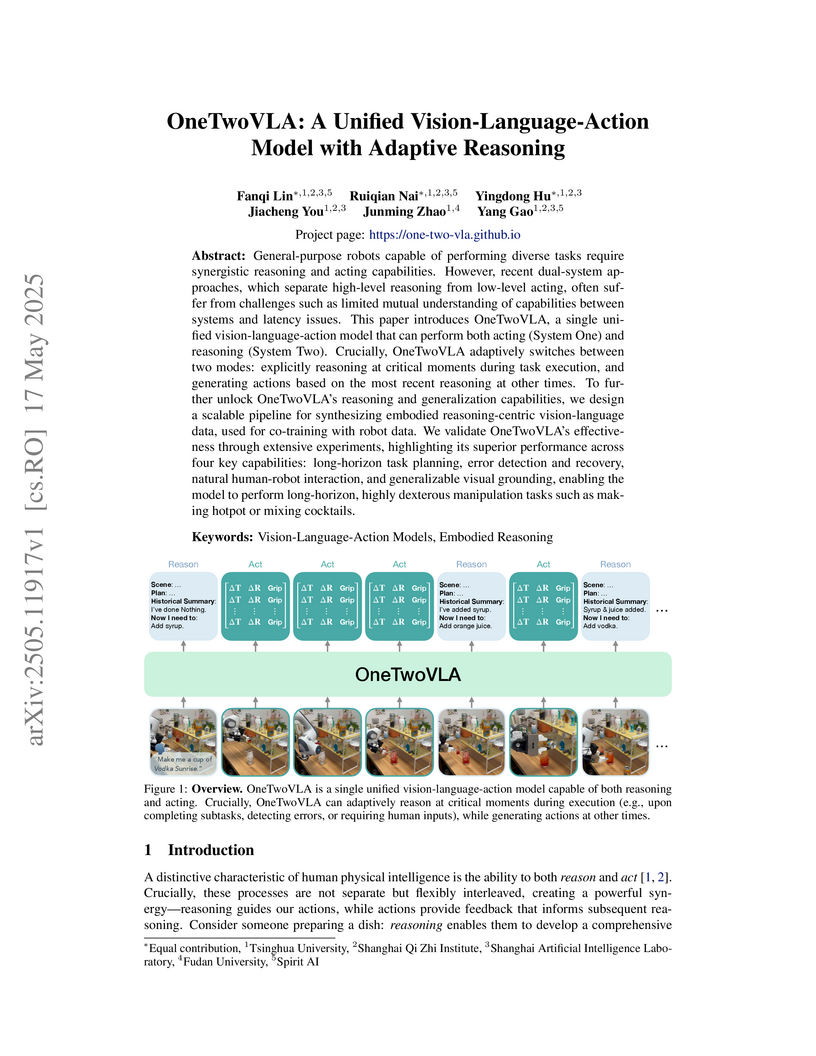

Researchers from Tsinghua University and collaborating institutions introduce OneTwoVLA, a unified vision-language-action model that dynamically switches between reasoning and acting modes for robotic control, alongside a scalable pipeline for generating embodied reasoning data that enables enhanced performance on long-horizon tasks while maintaining real-time responsiveness.

There are no more papers matching your filters at the moment.