Tehran Polytechnic

Researchers from the University of Washington and the Allen Institute for AI developed SELF-INSTRUCT, a framework that enables language models to generate their own instruction-following training data. This approach allows a GPT-3 model to achieve a 33.1% absolute improvement in ROUGE-L on the SUPER-NATURALINSTRUCTIONS benchmark and perform comparably to InstructGPT-001, demonstrating that self-generated data can effectively align language models with instructions with minimal human annotation.

UC Berkeley

UC Berkeley Microsoft

Microsoft Columbia University

Columbia University Allen Institute for AINational Univ. of SingaporeTCS ResearchTehran PolytechnicUniv. of WashingtonStanford Univ.Factored AIArizona State Univ.Univ of AmsterdamSharif Univ. of Tech.PSG College of Tech.Indian Institute of Tech.Government Polytechnic CollegeZycus InfotechNational Institute of Tech. KarnatakaUniv. of Massachusetts, Amherst

Allen Institute for AINational Univ. of SingaporeTCS ResearchTehran PolytechnicUniv. of WashingtonStanford Univ.Factored AIArizona State Univ.Univ of AmsterdamSharif Univ. of Tech.PSG College of Tech.Indian Institute of Tech.Government Polytechnic CollegeZycus InfotechNational Institute of Tech. KarnatakaUniv. of Massachusetts, AmherstResearchers at the Allen Institute for AI and the University of Washington created SUPER-NATURALINSTRUCTIONS, a public meta-dataset of 1,616 diverse NLP tasks, to advance instruction-following capabilities. Their Tk-INSTRUCT model, trained on this benchmark, outperformed the 175B-parameter InstructGPT by 9.9 ROUGE-L points on unseen English tasks and by 13.3 ROUGE-L points on unseen non-English tasks.

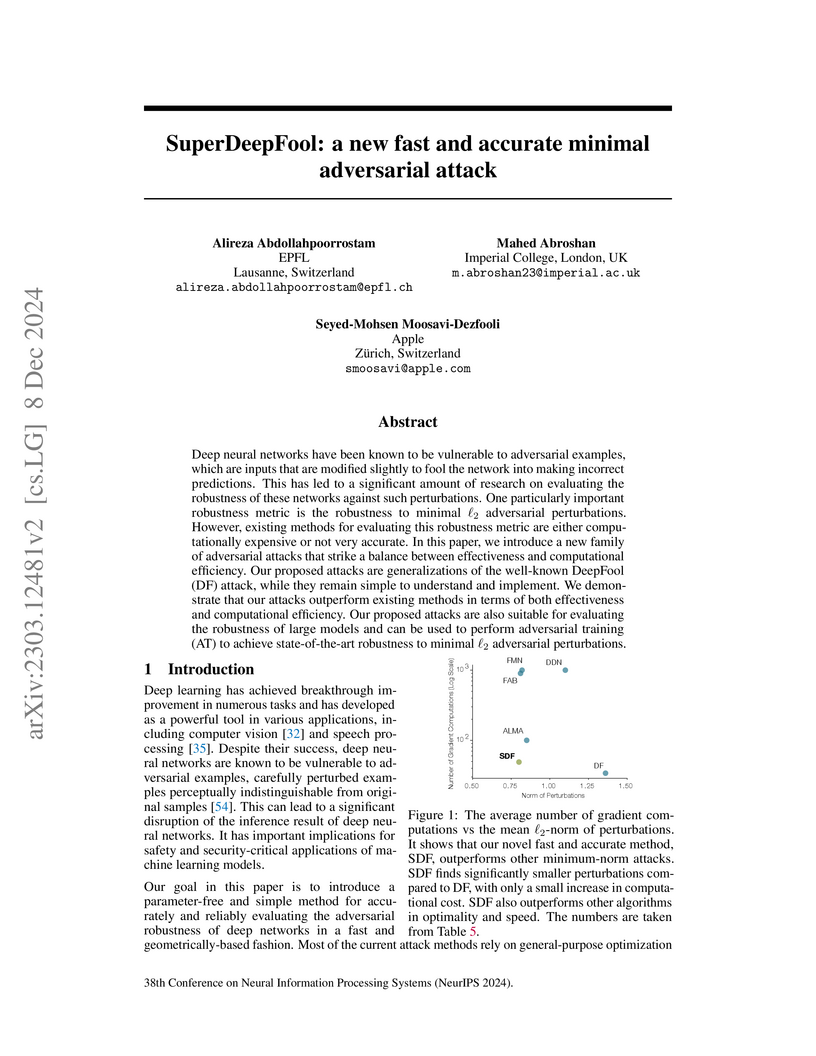

Deep neural networks have been known to be vulnerable to adversarial examples, which are inputs that are modified slightly to fool the network into making incorrect predictions. This has led to a significant amount of research on evaluating the robustness of these networks against such perturbations. One particularly important robustness metric is the robustness to minimal ℓ2 adversarial perturbations. However, existing methods for evaluating this robustness metric are either computationally expensive or not very accurate. In this paper, we introduce a new family of adversarial attacks that strike a balance between effectiveness and computational efficiency. Our proposed attacks are generalizations of the well-known DeepFool (DF) attack, while they remain simple to understand and implement. We demonstrate that our attacks outperform existing methods in terms of both effectiveness and computational efficiency. Our proposed attacks are also suitable for evaluating the robustness of large models and can be used to perform adversarial training (AT) to achieve state-of-the-art robustness to minimal ℓ2 adversarial perturbations.

We present UnifiedQA-v2, a QA model built with the same process as UnifiedQA, except that it utilizes more supervision -- roughly 3x the number of datasets used for UnifiedQA. This generally leads to better in-domain and cross-domain results.

There are no more papers matching your filters at the moment.