CNR-ILC

11 Jun 2024

Automatic methods for generating and gathering linguistic data have proven

effective for fine-tuning Language Models (LMs) in languages less resourced

than English. Still, while there has been emphasis on data quantity, less

attention has been given to its quality. In this work, we investigate the

impact of human intervention on machine-generated data when fine-tuning

dialogical models. In particular, we study (1) whether post-edited dialogues

exhibit higher perceived quality compared to the originals that were

automatically generated; (2) whether fine-tuning with post-edited dialogues

results in noticeable differences in the generated outputs; and (3) whether

post-edited dialogues influence the outcomes when considering the parameter

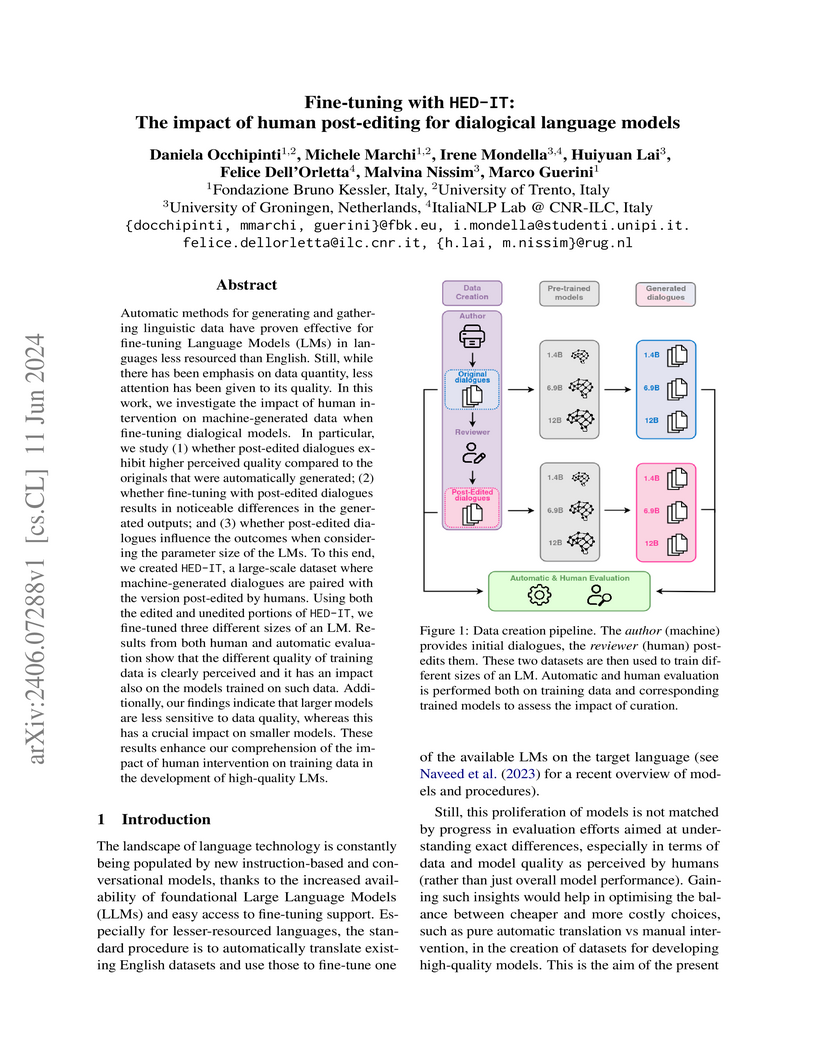

size of the LMs. To this end we created HED-IT, a large-scale dataset where

machine-generated dialogues are paired with the version post-edited by humans.

Using both the edited and unedited portions of HED-IT, we fine-tuned three

different sizes of an LM. Results from both human and automatic evaluation show

that the different quality of training data is clearly perceived and it has an

impact also on the models trained on such data. Additionally, our findings

indicate that larger models are less sensitive to data quality, whereas this

has a crucial impact on smaller models. These results enhance our comprehension

of the impact of human intervention on training data in the development of

high-quality LMs.

OWL ontologies are a quite popular way to describe structured knowledge in terms of classes, relations among classes and class instances. In this paper, given a target class T of an OWL ontology, with a focus on ontologies with real- and boolean-valued data properties, we address the problem of learning graded fuzzy concept inclusion axioms with the aim of describing enough conditions for being an individual classified as instance of the class T. To do so, we present PN-OWL that is a two-stage learning algorithm made of a P-stage and an N-stage. Roughly, in the P-stage the algorithm tries to cover as many positive examples as possible (increase recall), without compromising too much precision, while in the N-stage, the algorithm tries to rule out as many false positives, covered by the P-stage, as possible. PN-OWL then aggregates the fuzzy inclusion axioms learnt at the P-stage and the N-stage by combining them via aggregation functions to allow for a final decision whether an individual is instance of T or not. We also illustrate its effectiveness by means of an experimentation. An interesting feature is that fuzzy datatypes are built automatically, the learnt fuzzy concept inclusions can be represented directly into Fuzzy OWL 2 and, thus, any Fuzzy OWL 2 reasoner can then be used to automatically determine/classify (and to which degree) whether an individual belongs to the target class T or not.

There are no more papers matching your filters at the moment.