Hainan Institute of China University of Petroleum (Beijing)

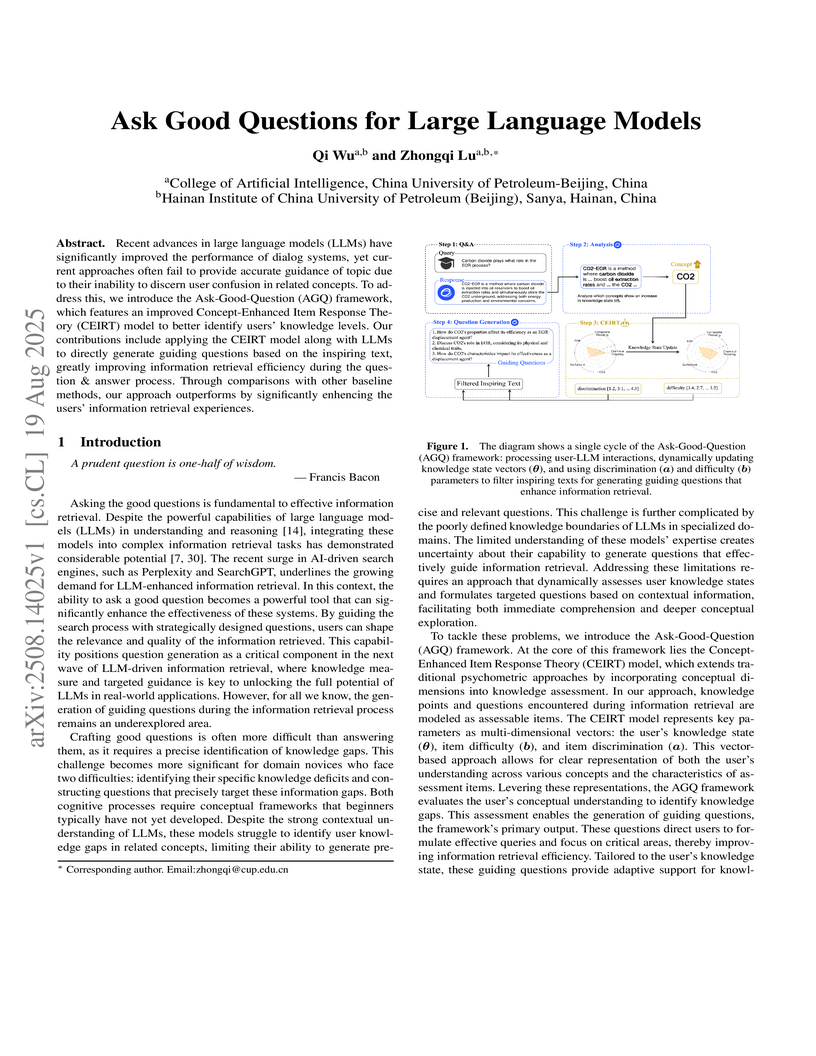

The Ask-Good-Question (AGQ) framework integrates a Concept-Enhanced Item Response Theory (CEIRT) model with Large Language Models (LLMs) to dynamically assess user knowledge gaps and generate tailored guiding questions. This system enhances information retrieval efficiency and user understanding in specialized domains by providing adaptive, personalized guidance.

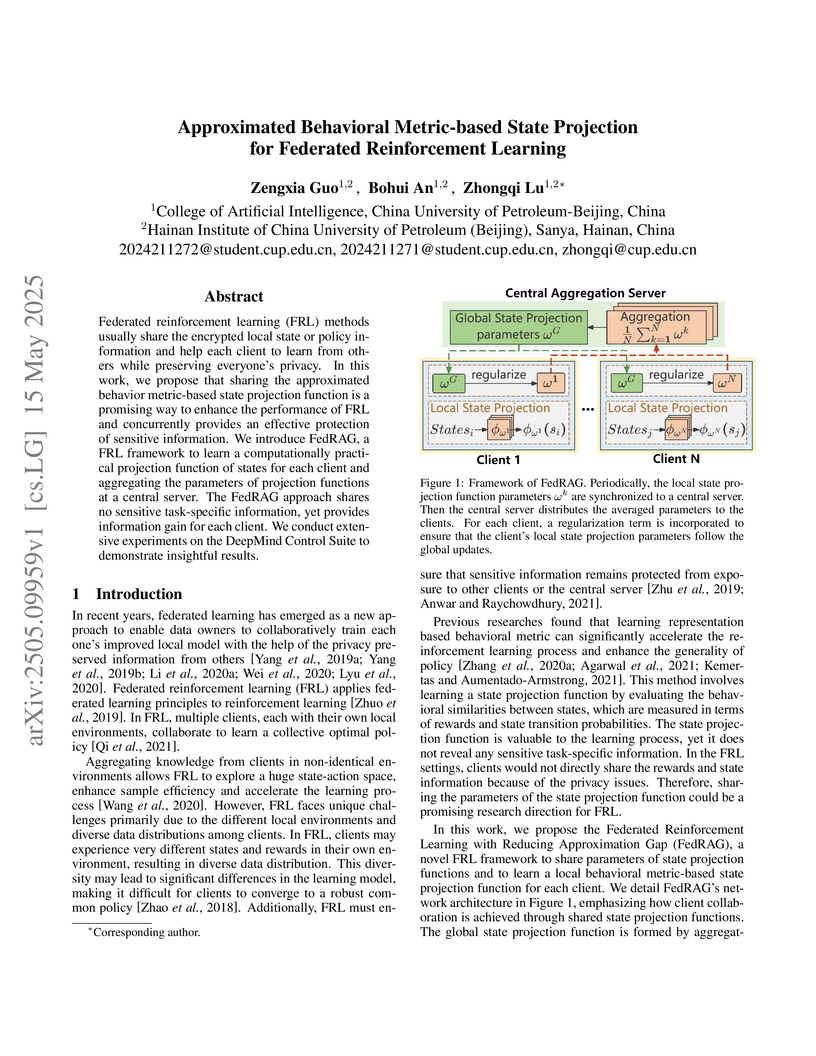

Federated reinforcement learning (FRL) methods usually share the encrypted

local state or policy information and help each client to learn from others

while preserving everyone's privacy. In this work, we propose that sharing the

approximated behavior metric-based state projection function is a promising way

to enhance the performance of FRL and concurrently provides an effective

protection of sensitive information. We introduce FedRAG, a FRL framework to

learn a computationally practical projection function of states for each client

and aggregating the parameters of projection functions at a central server. The

FedRAG approach shares no sensitive task-specific information, yet provides

information gain for each client. We conduct extensive experiments on the

DeepMind Control Suite to demonstrate insightful results.

There are no more papers matching your filters at the moment.