Institute of Computational Perception

Deep Neural Networks are known to be very demanding in terms of computing and

memory requirements. Due to the ever increasing use of embedded systems and

mobile devices with a limited resource budget, designing low-complexity models

without sacrificing too much of their predictive performance gained great

importance. In this work, we investigate and compare several well-known methods

to reduce the number of parameters in neural networks. We further put these

into the context of a recent study on the effect of the Receptive Field (RF) on

a model's performance, and empirically show that we can achieve high-performing

low-complexity models by applying specific restrictions on the RFs, in

combination with parameter reduction methods. Additionally, we propose a

filter-damping technique for regularizing the RF of models, without altering

their architecture and changing their parameter counts. We will show that

incorporating this technique improves the performance in various low-complexity

settings such as pruning and decomposed convolution. Using our proposed filter

damping, we achieved the 1st rank at the DCASE-2020 Challenge in the task of

Low-Complexity Acoustic Scene Classification.

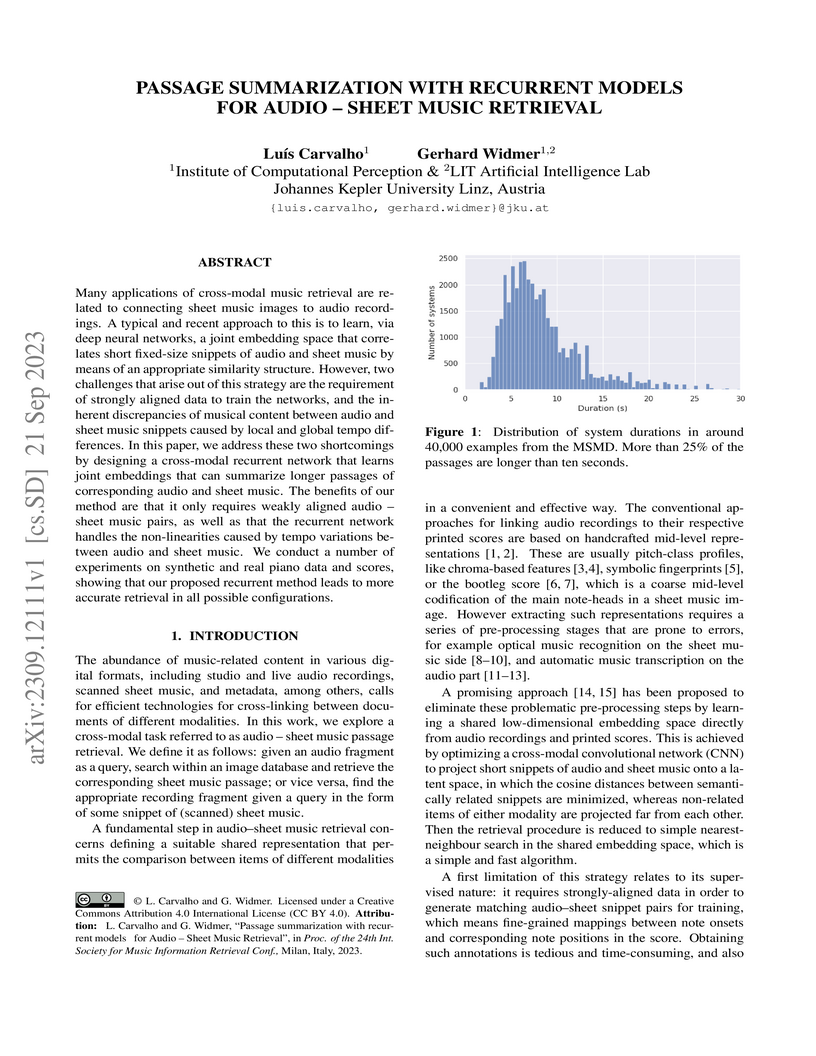

Many applications of cross-modal music retrieval are related to connecting

sheet music images to audio recordings. A typical and recent approach to this

is to learn, via deep neural networks, a joint embedding space that correlates

short fixed-size snippets of audio and sheet music by means of an appropriate

similarity structure. However, two challenges that arise out of this strategy

are the requirement of strongly aligned data to train the networks, and the

inherent discrepancies of musical content between audio and sheet music

snippets caused by local and global tempo differences. In this paper, we

address these two shortcomings by designing a cross-modal recurrent network

that learns joint embeddings that can summarize longer passages of

corresponding audio and sheet music. The benefits of our method are that it

only requires weakly aligned audio-sheet music pairs, as well as that the

recurrent network handles the non-linearities caused by tempo variations

between audio and sheet music. We conduct a number of experiments on synthetic

and real piano data and scores, showing that our proposed recurrent method

leads to more accurate retrieval in all possible configurations.

02 Jul 2024

Matching raw audio signals with textual descriptions requires understanding the audio's content and the description's semantics and then drawing connections between the two modalities. This paper investigates a hybrid retrieval system that utilizes audio metadata as an additional clue to understand the content of audio signals before matching them with textual queries. We experimented with metadata often attached to audio recordings, such as keywords and natural-language descriptions, and we investigated late and mid-level fusion strategies to merge audio and metadata. Our hybrid approach with keyword metadata and late fusion improved the retrieval performance over a content-based baseline by 2.36 and 3.69 pp. mAP@10 on the ClothoV2 and AudioCaps benchmarks, respectively.

There are no more papers matching your filters at the moment.