neural-and-evolutionary-computing

A Conditional Neural Cellular Automata (c-NCA) framework is introduced, enabling a single set of local rules to grow diverse structural digits from a single pixel, guided by a broadcasted class vector. The lightweight model achieves 96.30% recognition accuracy on generated digits by an external classifier and demonstrates robust emergent self-repair from significant damage without specific training.

Spiking neural networks excel at event-driven sensing yet maintaining task-relevant context over long timescales. However building these networks in hardware respecting both tight energy and memory budgets, remains a core challenge in the field. We address this challenge through novel algorithm-hardware co-design effort. At the algorithm level, inspired by the cortical fast-slow organization in the brain, we introduce a neural network with an explicit slow memory pathway that, combined with fast spiking activity, enables a dual memory pathway (DMP) architecture in which each layer maintains a compact low-dimensional state that summarizes recent activity and modulates spiking dynamics. This explicit memory stabilizes learning while preserving event-driven sparsity, achieving competitive accuracy on long-sequence benchmarks with 40-60% fewer parameters than equivalent state-of-the-art spiking neural networks. At the hardware level, we introduce a near-memory-compute architecture that fully leverages the advantages of the DMP architecture by retaining its compact shared state while optimizing dataflow, across heterogeneous sparse-spike and dense-memory pathways. We show experimental results that demonstrate more than a 4x increase in throughput and over a 5x improvement in energy efficiency compared with state-of-the-art implementations. Together, these contributions demonstrate that biological principles can guide functional abstractions that are both algorithmically effective and hardware-efficient, establishing a scalable co-design paradigm for real-time neuromorphic computation and learning.

Medical decision-making makes frequent use of algorithms that combine risk equations with rules, providing clear and standardized treatment pathways. Symbolic regression (SR) traditionally limits its search space to continuous function forms and their parameters, making it difficult to model this decision-making. However, due to its ability to derive data-driven, interpretable models, SR holds promise for developing data-driven clinical risk scores. To that end we introduce Brush, an SR algorithm that combines decision-tree-like splitting algorithms with non-linear constant optimization, allowing for seamless integration of rule-based logic into symbolic regression and classification models. Brush achieves Pareto-optimal performance on SRBench, and was applied to recapitulate two widely used clinical scoring systems, achieving high accuracy and interpretable models. Compared to decision trees, random forests, and other SR methods, Brush achieves comparable or superior predictive performance while producing simpler models.

This work introduces Concrete Ticket Search (CTS), an algorithm that frames lottery ticket discovery as a holistic combinatorial optimization problem, efficiently finding sparse subnetworks that preserve the training dynamics of their dense counterparts. CTS, particularly with a knowledge distillation-inspired objective, achieves accuracy comparable to or better than Iterative Magnitude Pruning (LTR) at high sparsities while drastically reducing computational overhead and robustly passing sanity checks.

10 Dec 2025

Diverse scientific and engineering research areas deal with discrete, time-stamped changes in large systems of interacting delay differential equations. Simulating such complex systems at scale on high-performance computing clusters demands efficient management of communication and memory. Inspired by the human cerebral cortex -- a sparsely connected network of O(1010) neurons, each forming O(103)--O(104) synapses and communicating via short electrical pulses called spikes -- we study the simulation of large-scale spiking neural networks for computational neuroscience research. This work presents a novel network construction method for multi-GPU clusters and upcoming exascale supercomputers using the Message Passing Interface (MPI), where each process builds its local connectivity and prepares the data structures for efficient spike exchange across the cluster during state propagation. We demonstrate scaling performance of two cortical models using point-to-point and collective communication, respectively.

GraphBench is introduced as a comprehensive benchmarking suite that unifies diverse tasks and domains in graph learning, addressing the fragmented nature of existing evaluation practices. It provides standardized datasets and protocols to rigorously assess graph neural networks and graph transformers, revealing their varying capabilities and persistent challenges across real-world applications and out-of-distribution generalization scenarios.

Understanding how protein mutations affect protein structure is essential for advancements in computational biology and bioinformatics. We introduce PRIMRose, a novel approach that predicts energy values for each residue given a mutated protein sequence. Unlike previous models that assess global energy shifts, our method analyzes the localized energetic impact of double amino acid insertions or deletions (InDels) at the individual residue level, enabling residue-specific insights into structural and functional disruption. We implement a Convolutional Neural Network architecture to predict the energy changes of each residue in a protein mutation. We train our model on datasets constructed from nine proteins, grouped into three categories: one set with exhaustive double InDel mutations, another with approximately 145k randomly sampled double InDel mutations, and a third with approximately 80k randomly sampled double InDel mutations. Our model achieves high predictive accuracy across a range of energy metrics as calculated by the Rosetta molecular modeling suite and reveals localized patterns that influence model performance, such as solvent accessibility and secondary structure context. This per-residue analysis offers new insights into the mutational tolerance of specific regions within proteins and provides higher interpretable and biologically meaningful predictions of InDels' effects.

In this work, we propose a novel energy-efficient spiking neural network (SNN)-based receiver for 5G-NR OFDM system, called neuromorphic receiver (NeuromorphicRx), replacing the channel estimation, equalization and symbol demapping blocks. We leverage domain knowledge to design the input with spiking encoding and propose a deep convolutional SNN with spike-element-wise residual connections. We integrate an SNN with artificial neural network (ANN) hybrid architecture to obtain soft outputs and employ surrogate gradient descent for training. We focus on generalization across diverse scenarios and robustness through quantized aware training. We focus on interpretability of NeuromorphicRx for 5G-NR signals and perform detailed ablation study for 5G-NR signals. Our extensive numerical simulations show that NeuromorphicRx is capable of achieving significant block error rate performance gain compared to 5G-NR receivers and similar performance compared to its ANN-based counterparts with 7.6x less energy consumption.

Neural architecture search (NAS) in expressive search spaces is a computationally hard problem, but it also holds the potential to automatically discover completely novel and performant architectures. To achieve this we need effective search algorithms that can identify powerful components and reuse them in new candidate architectures. In this paper, we introduce two adapted variants of the Smith-Waterman algorithm for local sequence alignment and use them to compute the edit distance in a grammar-based evolutionary architecture search. These algorithms enable us to efficiently calculate a distance metric for neural architectures and to generate a set of hybrid offspring from two parent models. This facilitates the deployment of crossover-based search heuristics, allows us to perform a thorough analysis on the architectural loss landscape, and track population diversity during search. We highlight how our method vastly improves computational complexity over previous work and enables us to efficiently compute shortest paths between architectures. When instantiating the crossover in evolutionary searches, we achieve competitive results, outperforming competing methods. Future work can build upon this new tool, discovering novel components that can be used more broadly across neural architecture design, and broadening its applications beyond NAS.

The rapid advancement of artificial intelligence (AI) and deep learning (DL) has catalyzed the emergence of several optimization-driven subfields, notably neuromorphic computing and quantum machine learning. Leveraging the differentiable nature of hybrid models, researchers have explored their potential to address complex problems through unified optimization strategies. One such development is the Spiking Quantum Neural Network (SQNN), which combines principles from spiking neural networks (SNNs) and quantum computing. However, existing SQNN implementations often depend on pretrained SNNs due to the non-differentiable nature of spiking activity and the limited scalability of current SNN encoders. In this work, we propose a novel architecture, Spiking-Quantum Data Re-upload Convolutional Neural Network (SQDR-CNN), that enables joint training of convolutional SNNs and quantum circuits within a single backpropagation framework. Unlike its predecessor, SQDR-CNN allow convergence to reasonable performance without the reliance of pretrained spiking encoder and subsetting datasets. We also clarified some theoretical foundations, testing new design using quantum data-reupload with different training algorithm-initialization and evaluate the performance of the proposed model under noisy simulated quantum environments. As a result, we were able to achieve 86% of the mean top-performing accuracy of the SOTA SNN baselines, yet uses only 0.5% of the smallest spiking model's parameters. Through this integration of neuromorphic and quantum paradigms, we aim to open new research directions and foster technological progress in multi-modal, learnable systems.

We liberate Equilibrium Propagation (EP) from the limit of infinitesimal perturbations by establishing a finite-nudge foundation for local credit assignment. By modeling network states as Gibbs-Boltzmann distributions rather than deterministic points, we prove that the gradient of the difference in Helmholtz free energy between a nudged and free phase is exactly the difference in expected local energy derivatives. This validates the classic Contrastive Hebbian Learning update as an exact gradient estimator for arbitrary finite nudging, requiring neither infinitesimal approximations nor convexity. Furthermore, we derive a generalized EP algorithm based on the path integral of loss-energy covariances, enabling learning with strong error signals that standard infinitesimal approximations cannot support.

A tutorial provides a comprehensive framework for understanding the design principles, historical context, and future challenges of integrating speech modalities with Large Language Models to create spoken conversational agents. It synthesizes advancements and identifies key research directions while emphasizing ethical considerations.

Shogo Ohmae and Keiko Ohmae's perspective identifies predictive and generative world models as a common computational foundation underlying diverse functions in the neocortex, cerebellum, and modern attention-based AI systems. Their analysis reveals a shared principle where these models, acquired through prediction-error learning, are repurposed for prediction, understanding, and generation across various domains.

Quality-Diversity (QD) algorithms constitute a branch of optimization that is concerned with discovering a diverse and high-quality set of solutions to an optimization problem. Current QD methods commonly maintain diversity by dividing the behavior space into discrete regions, ensuring that solutions are distributed across different parts of the space. The QD problem is then solved by searching for the best solution in each region. This approach to QD optimization poses challenges in large solution spaces, where storing many solutions is impractical, and in high-dimensional behavior spaces, where discretization becomes ineffective due to the curse of dimensionality. We present an alternative framing of the QD problem, called \emph{Soft QD}, that sidesteps the need for discretizations. We validate this formulation by demonstrating its desirable properties, such as monotonicity, and by relating its limiting behavior to the widely used QD Score metric. Furthermore, we leverage it to derive a novel differentiable QD algorithm, \emph{Soft QD Using Approximated Diversity (SQUAD)}, and demonstrate empirically that it is competitive with current state of the art methods on standard benchmarks while offering better scalability to higher dimensional problems.

We present Conformer-based decoders for the LibriBrain 2025 PNPL competition, targeting two foundational MEG tasks: Speech Detection and Phoneme Classification. Our approach adapts a compact Conformer to raw 306-channel MEG signals, with a lightweight convolutional projection layer and task-specific heads. For Speech Detection, a MEG-oriented SpecAugment provided a first exploration of MEG-specific augmentation. For Phoneme Classification, we used inverse-square-root class weighting and a dynamic grouping loader to handle 100-sample averaged examples. In addition, a simple instance-level normalization proved critical to mitigate distribution shifts on the holdout split. Using the official Standard track splits and F1-macro for model selection, our best systems achieved 88.9% (Speech) and 65.8% (Phoneme) on the leaderboard, surpassing the competition baselines and ranking within the top-10 in both tasks. For further implementation details, the technical documentation, source code, and checkpoints are available at this https URL.

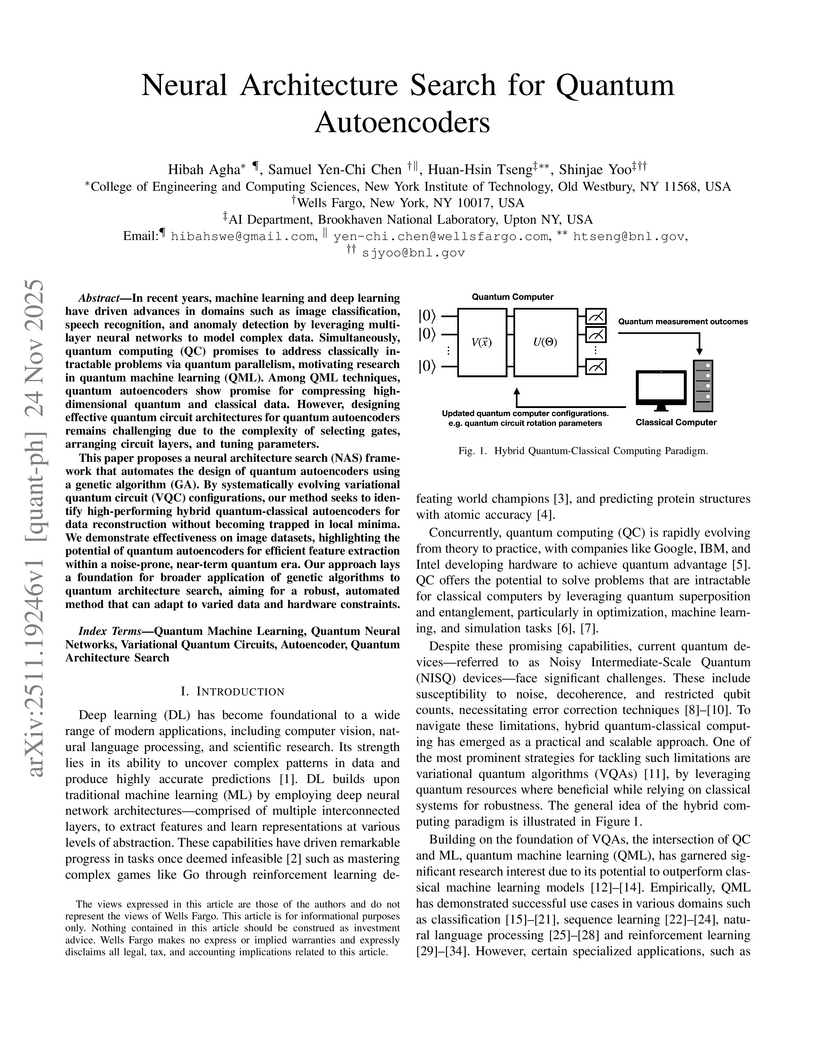

In recent years, machine learning and deep learning have driven advances in domains such as image classification, speech recognition, and anomaly detection by leveraging multi-layer neural networks to model complex data. Simultaneously, quantum computing (QC) promises to address classically intractable problems via quantum parallelism, motivating research in quantum machine learning (QML). Among QML techniques, quantum autoencoders show promise for compressing high-dimensional quantum and classical data. However, designing effective quantum circuit architectures for quantum autoencoders remains challenging due to the complexity of selecting gates, arranging circuit layers, and tuning parameters.

This paper proposes a neural architecture search (NAS) framework that automates the design of quantum autoencoders using a genetic algorithm (GA). By systematically evolving variational quantum circuit (VQC) configurations, our method seeks to identify high-performing hybrid quantum-classical autoencoders for data reconstruction without becoming trapped in local minima. We demonstrate effectiveness on image datasets, highlighting the potential of quantum autoencoders for efficient feature extraction within a noise-prone, near-term quantum era. Our approach lays a foundation for broader application of genetic algorithms to quantum architecture search, aiming for a robust, automated method that can adapt to varied data and hardware constraints.

Building self-improving AI systems remains a fundamental challenge in the AI domain. We present NNGPT, an open-source framework that turns a large language model (LLM) into a self-improving AutoML engine for neural network development, primarily for computer vision. Unlike previous frameworks, NNGPT extends the dataset of neural networks by generating new models, enabling continuous fine-tuning of LLMs based on closed-loop system of generation, assessment, and self-improvement. It integrates within one unified workflow five synergistic LLM-based pipelines: zero-shot architecture synthesis, hyperparameter optimization (HPO), code-aware accuracy/early-stop prediction, retrieval-augmented synthesis of scope-closed PyTorch blocks (NN-RAG), and reinforcement learning. Built on the LEMUR dataset as an audited corpus with reproducible metrics, NNGPT emits from a single prompt and validates network architecture, preprocessing code, and hyperparameters, executes them end-to-end, and learns from result. The PyTorch adapter makes NNGPT framework-agnostic, enabling strong performance: NN-RAG achieves 73% executability on 1,289 targets, 3-shot prompting boosts accuracy on common datasets, and hash-based deduplication saves hundreds of runs. One-shot prediction matches search-based AutoML, reducing the need for numerous trials. HPO on LEMUR achieves RMSE 0.60, outperforming Optuna (0.64), while the code-aware predictor reaches RMSE 0.14 with Pearson r=0.78. The system has already generated over 5K validated models, proving NNGPT as an autonomous AutoML engine. Upon acceptance, the code, prompts, and checkpoints will be released for public access to enable reproducibility and facilitate community usage.

Google DeepMind, in collaboration with mathematicians from Brown University and UCLA, developed AlphaEvolve, an AI system that autonomously discovers and improves mathematical constructions across various domains. The system achieved new state-of-the-art results in problems like finite field Kakeya sets, autocorrelation inequalities, and kissing numbers, inspiring new theoretical work.

Researchers from UC Berkeley's School of Information enhanced the Spectral Neuro-Symbolic Reasoning framework by integrating neural-linguistic models for graph refinement, leading to consistent accuracy improvements across reasoning benchmarks while maintaining sublinear inference complexity. The framework now semantically merges nodes, filters entailments, and aligns with external knowledge graphs to address real-world semantic noise and incompleteness.

The rapid evolution of generative adversarial networks (GANs) and diffusion models has made synthetic media increasingly realistic, raising societal concerns around misinformation, identity fraud, and digital trust. Existing deepfake detection methods either rely on deep learning, which suffers from poor generalization and vulnerability to distortions, or forensic analysis, which is interpretable but limited against new manipulation techniques. This study proposes a hybrid framework that fuses forensic features, including noise residuals, JPEG compression traces, and frequency-domain descriptors, with deep learning representations from convolutional neural networks (CNNs) and vision transformers (ViTs). Evaluated on benchmark datasets (FaceForensics++, Celeb-DF v2, DFDC), the proposed model consistently outperformed single-method baselines and demonstrated superior performance compared to existing state-of-the-art hybrid approaches, achieving F1-scores of 0.96, 0.82, and 0.77, respectively. Robustness tests demonstrated stable performance under compression (F1 = 0.87 at QF = 50), adversarial perturbations (AUC = 0.84), and unseen manipulations (F1 = 0.79). Importantly, explainability analysis showed that Grad-CAM and forensic heatmaps overlapped with ground-truth manipulated regions in 82 percent of cases, enhancing transparency and user trust. These findings confirm that hybrid approaches provide a balanced solution, combining the adaptability of deep models with the interpretability of forensic cues, to develop resilient and trustworthy deepfake detection systems.

There are no more papers matching your filters at the moment.