Ask or search anything...

Jina AI's `jina-embeddings-v4` is a 3.8 billion-parameter model designed for universal multimodal and multilingual retrieval, featuring a unified VLM backbone that minimizes the modality gap between text and images. The model achieves state-of-the-art results on the Jina-VDR and ViDoRe benchmarks for visually rich document retrieval and shows competitive performance across diverse text and multimodal tasks.

View blogJina AI's jina-reranker-v3 model implements a "last but not late interaction" (LBNL) mechanism, enabling a Qwen3-0.6B transformer to concurrently process queries and multiple documents for listwise reranking. This method achieved a 61.85 nDCG@10 on the BEIR benchmark, exceeding the performance of significantly larger rerankers, and showed strong capabilities across diverse languages and specialized domains.

View blogThe research from Jina AI and Weaviate introduces "Late Chunking," a method that generates contextual chunk embeddings by first encoding an entire document with a long-context embedding model and then applying chunking and pooling. This approach consistently improves retrieval accuracy (nDCG@10 by 2.70-3.63% on BeIR benchmarks) for neural search and RAG systems while avoiding the computational costs of LLM-based contextualization.

View blogJina AI introduces jina-embeddings-v3, a 570-million-parameter multilingual text embedding model that leverages Task LoRA for efficient adaptation to diverse tasks. It achieves superior performance on MTEB English and multilingual benchmarks, especially in long-context retrieval up to 8192 tokens, surpassing larger LLM-based embeddings.

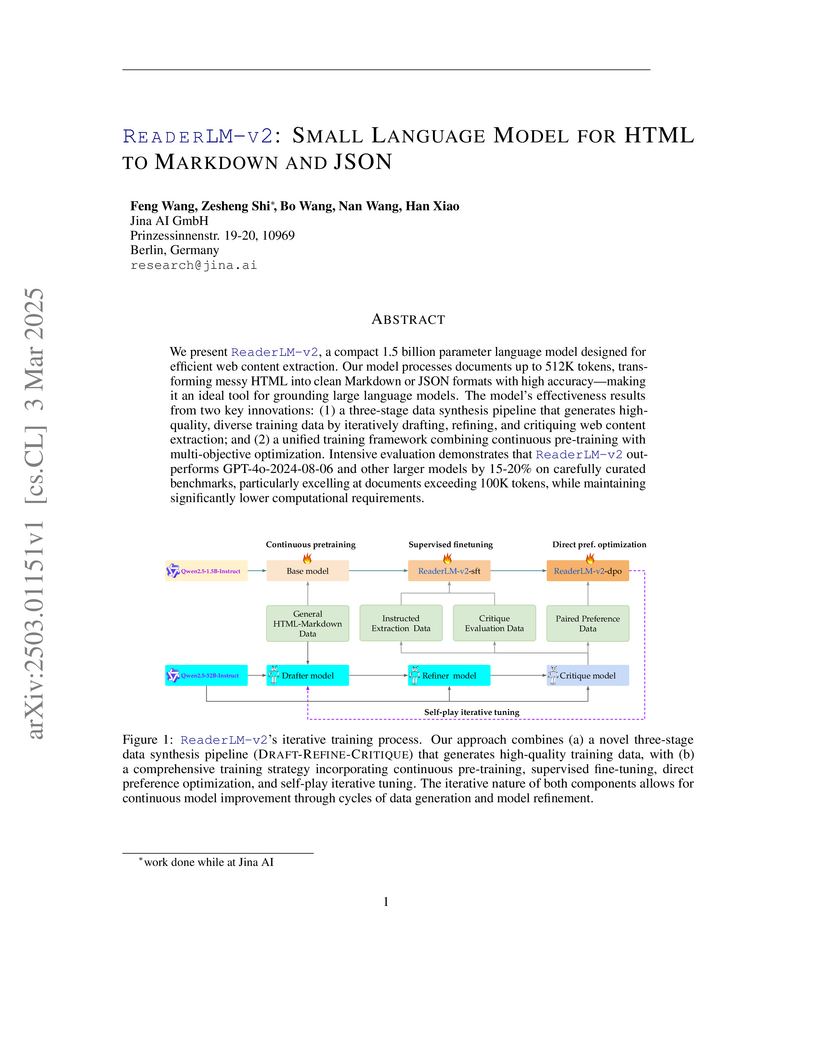

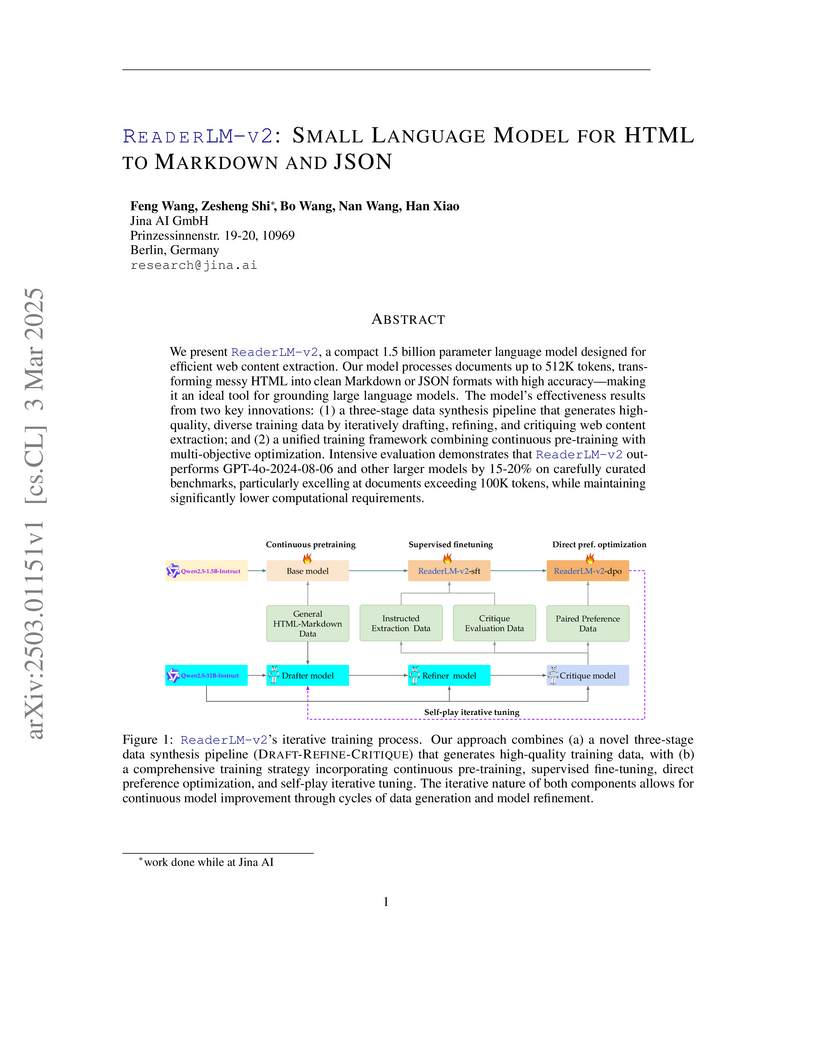

View blogJina AI researchers introduce ReaderLM-v2, a compact 1.5B parameter language model that outperforms GPT-4 by 15-20% in HTML-to-Markdown/JSON conversion tasks while handling documents up to 512K tokens, demonstrating that specialized smaller models can excel at structured content extraction through innovative three-stage training.

View blogJina AI introduced Jina Embeddings v2, the first open-source general-purpose text embedding model designed to process documents up to 8192 tokens, a 16x increase over typical limits. The model achieves competitive performance on standard benchmarks and demonstrates superior results on long-document specific tasks, outperforming proprietary models like OpenAI's `ada-embeddings-002` on the LoCo benchmark.

View blog

University of Pittsburgh

University of Pittsburgh

The University of Texas at Austin

The University of Texas at Austin