Lenovo Corporation

12 Nov 2025

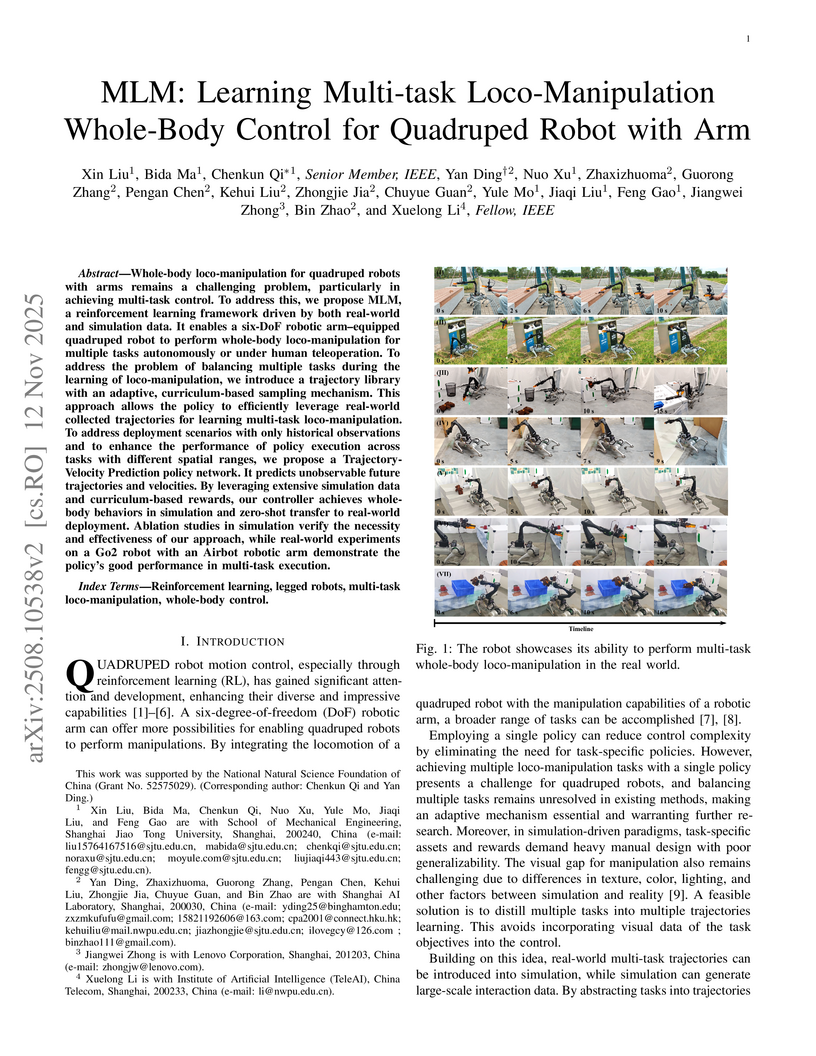

Whole-body loco-manipulation for quadruped robots with arms remains a challenging problem, particularly in achieving multi-task control. To address this, we propose MLM, a reinforcement learning framework driven by both real-world and simulation data. It enables a six-DoF robotic arm-equipped quadruped robot to perform whole-body loco-manipulation for multiple tasks autonomously or under human teleoperation. To address the problem of balancing multiple tasks during the learning of loco-manipulation, we introduce a trajectory library with an adaptive, curriculum-based sampling mechanism. This approach allows the policy to efficiently leverage real-world collected trajectories for learning multi-task loco-manipulation. To address deployment scenarios with only historical observations and to enhance the performance of policy execution across tasks with different spatial ranges, we propose a Trajectory-Velocity Prediction policy network. It predicts unobservable future trajectories and velocities. By leveraging extensive simulation data and curriculum-based rewards, our controller achieves whole-body behaviors in simulation and zero-shot transfer to real-world deployment. Ablation studies in simulation verify the necessity and effectiveness of our approach, while real-world experiments on a Go2 robot with an Airbot robotic arm demonstrate the policy's good performance in multi-task execution.

04 Dec 2025

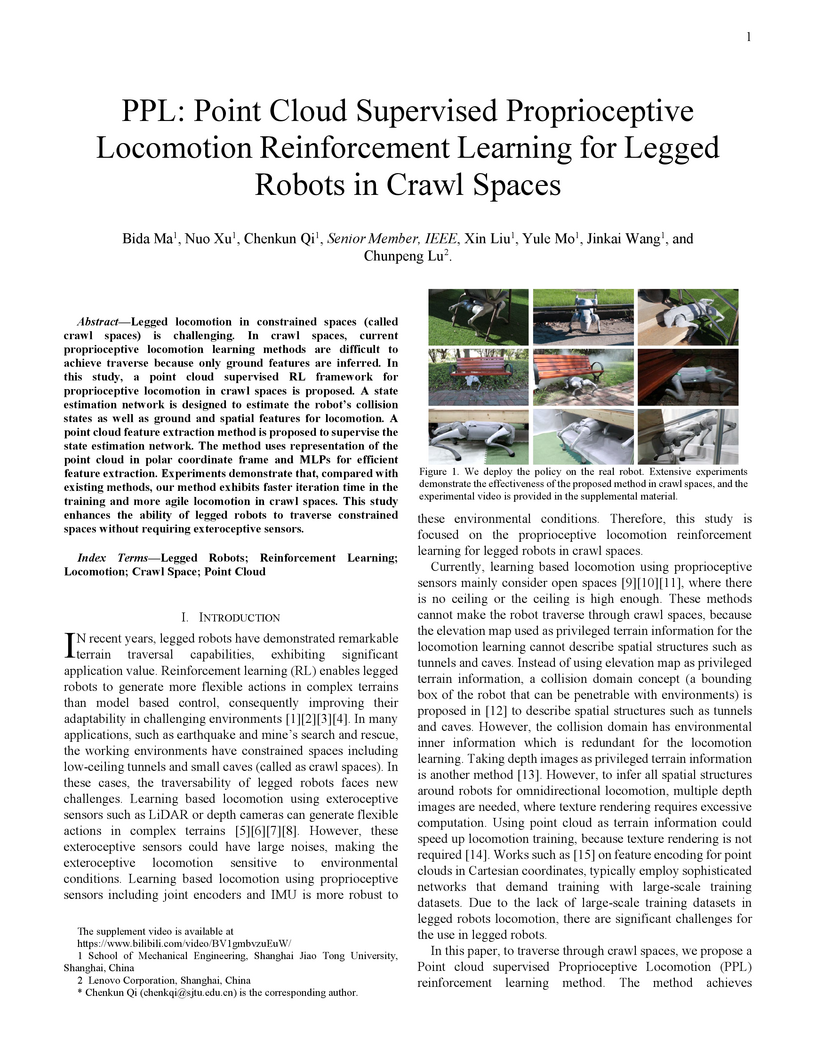

Legged locomotion in constrained spaces (called crawl spaces) is challenging. In crawl spaces, current proprioceptive locomotion learning methods are difficult to achieve traverse because only ground features are inferred. In this study, a point cloud supervised RL framework for proprioceptive locomotion in crawl spaces is proposed. A state estimation network is designed to estimate the robot's collision states as well as ground and spatial features for locomotion. A point cloud feature extraction method is proposed to supervise the state estimation network. The method uses representation of the point cloud in polar coordinate frame and MLPs for efficient feature extraction. Experiments demonstrate that, compared with existing methods, our method exhibits faster iteration time in the training and more agile locomotion in crawl spaces. This study enhances the ability of legged robots to traverse constrained spaces without requiring exteroceptive sensors.

Gait recognition captures gait patterns from the walking sequence of an individual for identification. Most existing gait recognition methods learn features from silhouettes or skeletons for the robustness to clothing, carrying, and other exterior factors. The combination of the two data modalities, however, is not fully exploited. Previous multimodal gait recognition methods mainly employ the skeleton to assist the local feature extraction where the intrinsic discrimination of the skeleton data is ignored. This paper proposes a simple yet effective Bimodal Fusion (BiFusion) network which mines discriminative gait patterns in skeletons and integrates with silhouette representations to learn rich features for identification. Particularly, the inherent hierarchical semantics of body joints in a skeleton is leveraged to design a novel Multi-Scale Gait Graph (MSGG) network for the feature extraction of skeletons. Extensive experiments on CASIA-B and OUMVLP demonstrate both the superiority of the proposed MSGG network in modeling skeletons and the effectiveness of the bimodal fusion for gait recognition. Under the most challenging condition of walking in different clothes on CASIA-B, our method achieves the rank-1 accuracy of 92.1%.

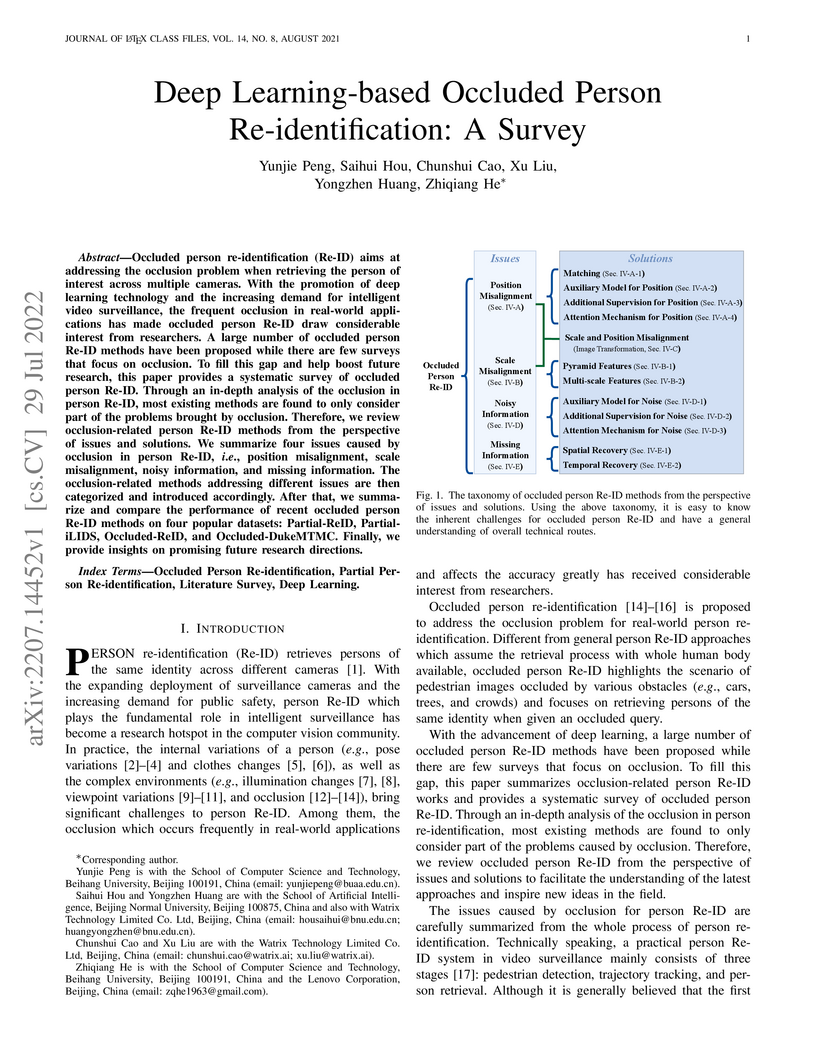

This survey provides the first systematic review of deep learning-based occluded person re-identification, categorizing existing methods by how they address four distinct issues caused by occlusion: position misalignment, scale misalignment, noisy information, and missing information. It also summarizes and compares the performance of mainstream approaches on challenging datasets like Partial-ReID and Occluded-DukeMTMC.

There are no more papers matching your filters at the moment.