Lossfunk

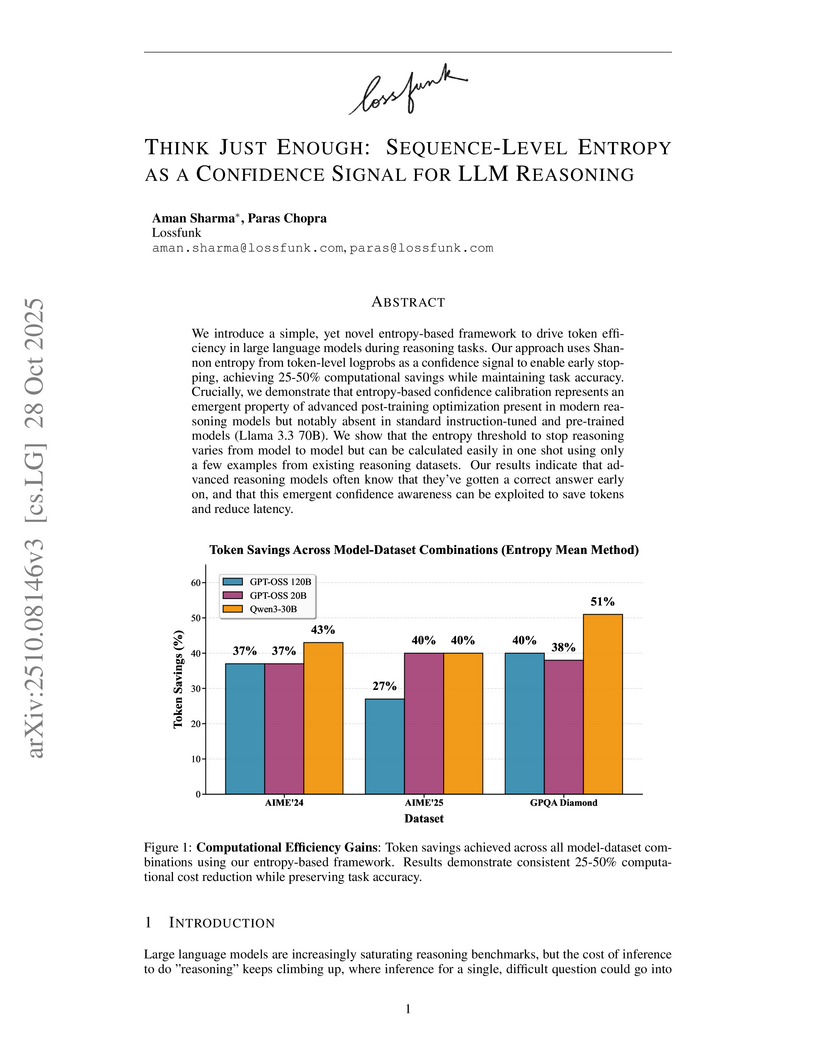

Researchers at Lossfunk introduced a framework that uses sequence-level Shannon entropy as an emergent confidence signal to enable early stopping in large language model (LLM) reasoning. This approach achieves 25-50% token savings without accuracy loss and identifies confidence calibration as an emergent property of advanced post-training optimized models.

20 Mar 2025

The Implicit Preference Optimization (IPO) framework introduces a method for Large Language Model (LLM) alignment by leveraging the LLM itself as a probabilistic preference classifier, significantly reducing the reliance on costly human annotation or external reward models. Developed by researchers from IIT Roorkee and Lossfunk, it enables LLMs to self-align through Direct Preference Optimization (DPO) using internally generated preference signals, achieving performance comparable to dedicated reward models.

Generating diverse solutions is key to human-like reasoning, yet

autoregressive language models focus on single accurate responses, limiting

creativity. GFlowNets optimize solution generation as a flow network, promising

greater diversity. Our case study shows their limited zero-shot transferability

by fine-tuning small and medium-sized large language models on the Game of 24

and testing them on the Game of 42 datasets. Results revealed that GFlowNets

struggle to maintain solution diversity and accuracy, highlighting key

limitations in their cross-task generalization and the need for future research

in improved transfer learning capabilities.

We introduce a simple, yet novel entropy-based framework to drive token efficiency in large language models during reasoning tasks. Our approach uses Shannon entropy from token-level logprobs as a confidence signal to enable early stopping, achieving 25-50% computational savings while maintaining task accuracy. Crucially, we demonstrate that entropy-based confidence calibration represents an emergent property of advanced post-training optimization present in modern reasoning models but notably absent in standard instruction-tuned and pre-trained models (Llama 3.3 70B). We show that the entropy threshold to stop reasoning varies from model to model but can be calculated easily in one shot using only a few examples from existing reasoning datasets. Our results indicate that advanced reasoning models often know that they've gotten a correct answer early on, and that this emergent confidence awareness can be exploited to save tokens and reduce latency. The framework demonstrates consistent performance across reasoning-optimized model families with 25-50% computational cost reduction while preserving accuracy, revealing that confidence mechanisms represent a distinguishing characteristic of modern post-trained reasoning systems versus their predecessors.

There are no more papers matching your filters at the moment.