National Center for Geriatrics and Gerontology

Large Language Models (LLMs) are gaining popularity in the field of robotics.

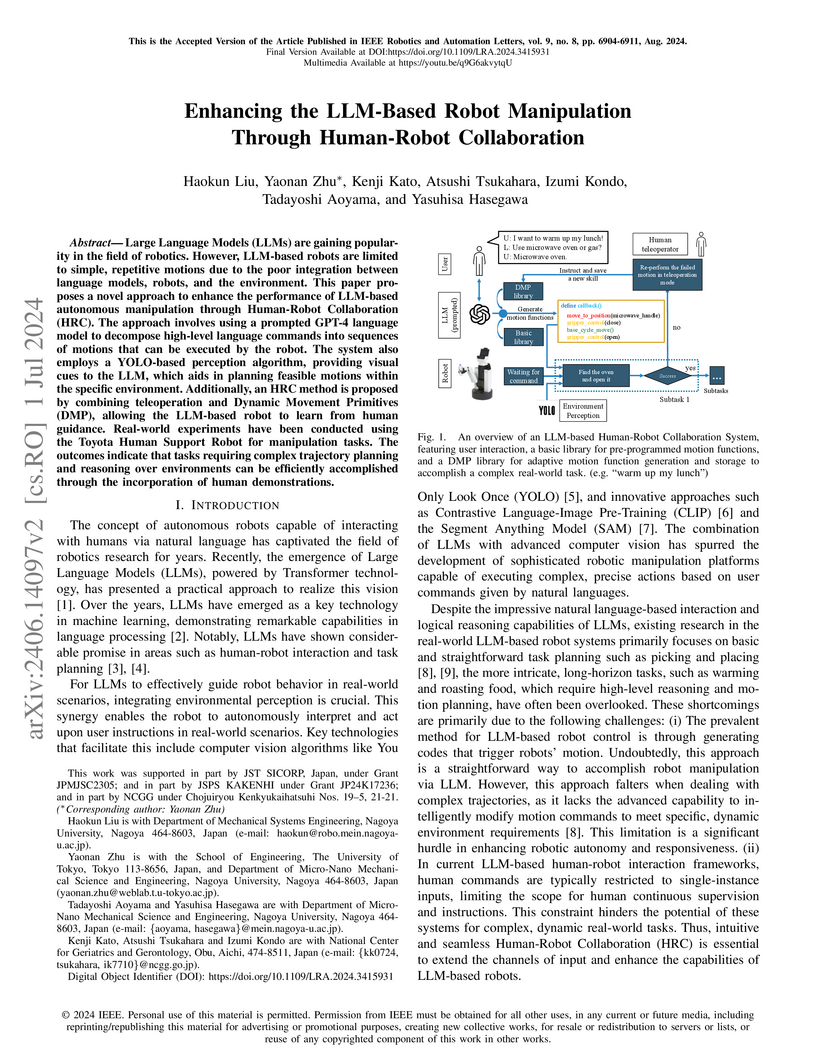

However, LLM-based robots are limited to simple, repetitive motions due to the

poor integration between language models, robots, and the environment. This

paper proposes a novel approach to enhance the performance of LLM-based

autonomous manipulation through Human-Robot Collaboration (HRC). The approach

involves using a prompted GPT-4 language model to decompose high-level language

commands into sequences of motions that can be executed by the robot. The

system also employs a YOLO-based perception algorithm, providing visual cues to

the LLM, which aids in planning feasible motions within the specific

environment. Additionally, an HRC method is proposed by combining teleoperation

and Dynamic Movement Primitives (DMP), allowing the LLM-based robot to learn

from human guidance. Real-world experiments have been conducted using the

Toyota Human Support Robot for manipulation tasks. The outcomes indicate that

tasks requiring complex trajectory planning and reasoning over environments can

be efficiently accomplished through the incorporation of human demonstrations.

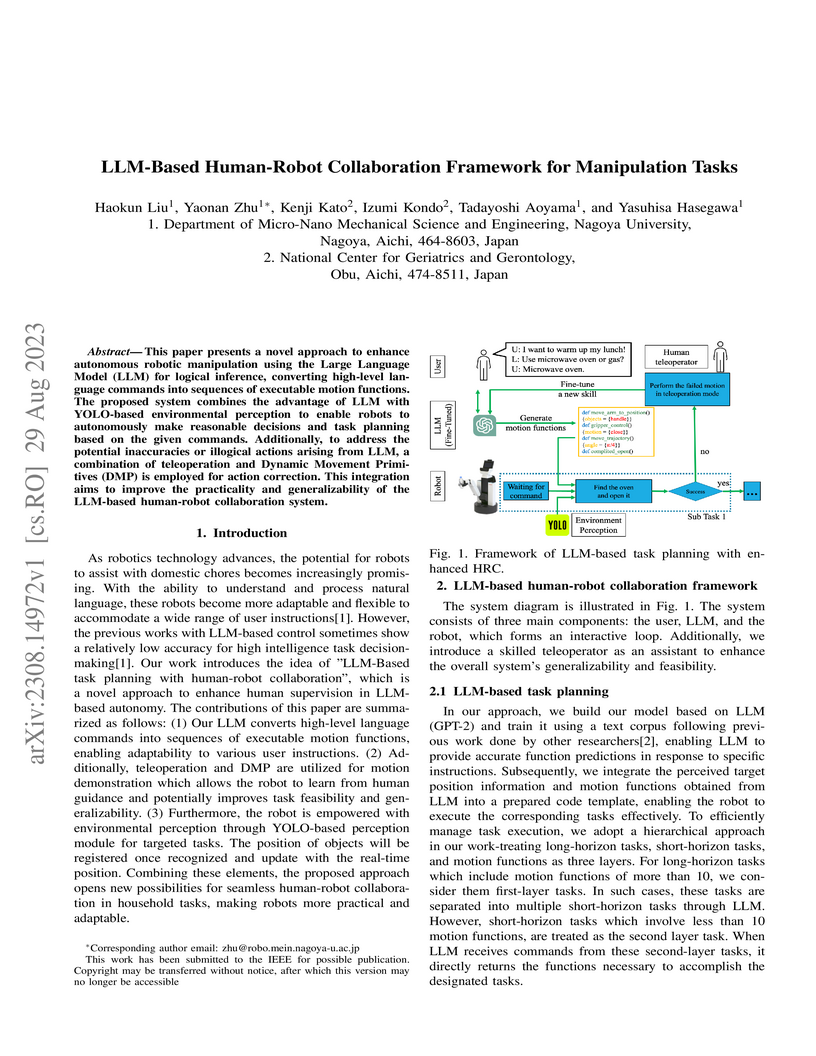

This paper presents a novel approach to enhance autonomous robotic manipulation using the Large Language Model (LLM) for logical inference, converting high-level language commands into sequences of executable motion functions. The proposed system combines the advantage of LLM with YOLO-based environmental perception to enable robots to autonomously make reasonable decisions and task planning based on the given commands. Additionally, to address the potential inaccuracies or illogical actions arising from LLM, a combination of teleoperation and Dynamic Movement Primitives (DMP) is employed for action correction. This integration aims to improve the practicality and generalizability of the LLM-based human-robot collaboration system.

Progressive cognitive decline spanning across decades is characteristic of Alzheimer's disease (AD). Various predictive models have been designed to realize its early onset and study the long-term trajectories of cognitive test scores across populations of interest. Research efforts have been geared towards superimposing patients' cognitive test scores with the long-term trajectory denoting gradual cognitive decline, while considering the heterogeneity of AD. Multiple trajectories representing cognitive assessment for the long-term have been developed based on various parameters, highlighting the importance of classifying several groups based on disease progression patterns. In this study, a novel method capable of self-organized prediction, classification, and the overlay of long-term cognitive trajectories based on short-term individual data was developed, based on statistical and differential equation modeling. We validated the predictive accuracy of the proposed method for the long-term trajectory of cognitive test score results on two cohorts: the Alzheimer's Disease Neuroimaging Initiative (ADNI) study and the Japanese ADNI study. We also presented two practical illustrations of the simultaneous evaluation of risk factor associated with both the onset and the longitudinal progression of AD, and an innovative randomized controlled trial design for AD that standardizes the heterogeneity of patients enrolled in a clinical trial. These resources would improve the power of statistical hypothesis testing and help evaluate the therapeutic effect. The application of predicting the trajectory of longitudinal disease progression goes beyond AD, and is especially relevant for progressive and neurodegenerative disorders.

There are no more papers matching your filters at the moment.