neurons-and-cognition

Diverse scientific and engineering research areas deal with discrete, time-stamped changes in large systems of interacting delay differential equations. Simulating such complex systems at scale on high-performance computing clusters demands efficient management of communication and memory. Inspired by the human cerebral cortex -- a sparsely connected network of O(1010) neurons, each forming O(103)--O(104) synapses and communicating via short electrical pulses called spikes -- we study the simulation of large-scale spiking neural networks for computational neuroscience research. This work presents a novel network construction method for multi-GPU clusters and upcoming exascale supercomputers using the Message Passing Interface (MPI), where each process builds its local connectivity and prepares the data structures for efficient spike exchange across the cluster during state propagation. We demonstrate scaling performance of two cortical models using point-to-point and collective communication, respectively.

Accurately predicting individual neurons' responses and spatial functional properties in complex visual tasks remains a key challenge in understanding neural computation. Existing whole-brain connectome models of Drosophila often rely on parameter assumptions or deep learning approaches, yet remain limited in their ability to reliably predict dynamic neuronal responses. We introduce a Multi-Path Aggregation (MPA) framework, based on neural network steady-state theory, to build a whole-brain Visual Function Profiles (VFP) of Drosophila neurons and predict their responses under diverse visual tasks. Unlike conventional methods relying on redundant parameters, MPA combines visual input features with the whole-brain connectome topology. It uses adjacency matrix powers and finite-path optimization to efficiently predict neuronal function, including ON/OFF polarity, direction selectivity, and responses to complex visual stimuli. Our model achieves a Pearson correlation of 0.84+/-0.12 for ON/OFF responses, outperforming existing methods (0.33+/-0.59), and accurately captures neuron functional properties, including luminance and direction preferences, while allowing single-neuron or population-level blockade simulations. Replacing CNN modules with VFP-derived Lobula Columnar(LC) population responses in a Drosophila simulation enables successful navigation and obstacle avoidance, demonstrating the model's effectiveness in guiding embodied behavior. This study establishes a "connectome-functional profile-behavior" framework, offering a whole-brain quantitative tool to study Drosophila visual computation and a neuron-level guide for brain-inspired intelligence.

Biological neural networks learn complex behaviors from sparse, delayed feedback using local synaptic plasticity, yet the mechanisms enabling structured credit assignment remain elusive. In contrast, artificial recurrent networks solving similar tasks typically rely on biologically implausible global learning rules or hand-crafted local updates. The space of local plasticity rules capable of supporting learning from delayed reinforcement remains largely unexplored. Here, we present a meta-learning framework that discovers local learning rules for structured credit assignment in recurrent networks trained with sparse feedback. Our approach interleaves local neo-Hebbian-like updates during task execution with an outer loop that optimizes plasticity parameters via \textbf{tangent-propagation through learning}. The resulting three-factor learning rules enable long-timescale credit assignment using only local information and delayed rewards, offering new insights into biologically grounded mechanisms for learning in recurrent circuits.

Influential models of primate visual cortex describe two functionally distinct pathways: a ventral pathway for object recognition and the dorsal pathway for spatial and action processing. However, recent human and non-human primate research suggests the existence of a third visual pathway projecting from early visual cortex through the motion-selective area V5/MT into the superior temporal sulcus (STS). Here we integrate anatomical, neuroimaging, and neuropsychological evidence demonstrating that this pathway specializes in processing dynamic social cues such as facial expressions, eye gaze, and body movements. This third pathway supports social perception by computing the actions and intentions of other people. These findings enhance our understanding of visual cortical organization and highlight the STS's critical role in social cognition, suggesting that visual processing encompasses a dedicated neural circuit for interpreting socially relevant motion and behavior.

Reinforcement learning (RL) enables adaptive behavior across species via reward prediction errors (RPEs), but the neural origins of species-specific adaptability remain unknown. Integrating RL modeling, transcriptomics, and neuroimaging during reversal learning, we discovered convergent RPE signatures - shared monoaminergic/synaptic gene upregulation and neuroanatomical representations, yet humans outperformed macaques behaviorally. Single-trial decoding showed RPEs guided choices similarly in both species, but humans disproportionately recruited dorsal anterior cingulate (dACC) and dorsolateral prefrontal cortex (dlPFC). Cross-species alignment uncovered that macaque prefrontal circuits encode human-like optimal RPEs yet fail to translate them into action. Adaptability scaled not with RPE encoding fidelity, but with the areal extent of dACC/dlPFC recruitment governing RPE-to-action transformation. These findings resolve an evolutionary puzzle: behavioral performance gaps arise from executive cortical readout efficiency, not encoding capacity.

Neural decoding, a critical component of Brain-Computer Interface (BCI), has recently attracted increasing research interest. Previous research has focused on leveraging signal processing and deep learning methods to enhance neural decoding performance. However, the in-depth exploration of model architectures remains underexplored, despite its proven effectiveness in other tasks such as energy forecasting and image classification. In this study, we propose NeuroSketch, an effective framework for neural decoding via systematic architecture optimization. Starting with the basic architecture study, we find that CNN-2D outperforms other architectures in neural decoding tasks and explore its effectiveness from temporal and spatial perspectives. Building on this, we optimize the architecture from macro- to micro-level, achieving improvements in performance at each step. The exploration process and model validations take over 5,000 experiments spanning three distinct modalities (visual, auditory, and speech), three types of brain signals (EEG, SEEG, and ECoG), and eight diverse decoding tasks. Experimental results indicate that NeuroSketch achieves state-of-the-art (SOTA) performance across all evaluated datasets, positioning it as a powerful tool for neural decoding. Our code and scripts are available at this https URL.

Researchers from TU Dresden and MIT developed fastDSA, a computationally optimized version of Dynamical Similarity Analysis (DSA), achieving up to 100 times faster computation while preserving the method's accuracy and sensitivity to subtle dynamic changes. This enhanced framework robustly distinguishes between systems with identical eigenvalues but distinct flow fields, enabling large-scale comparative studies of neural and artificial dynamics.

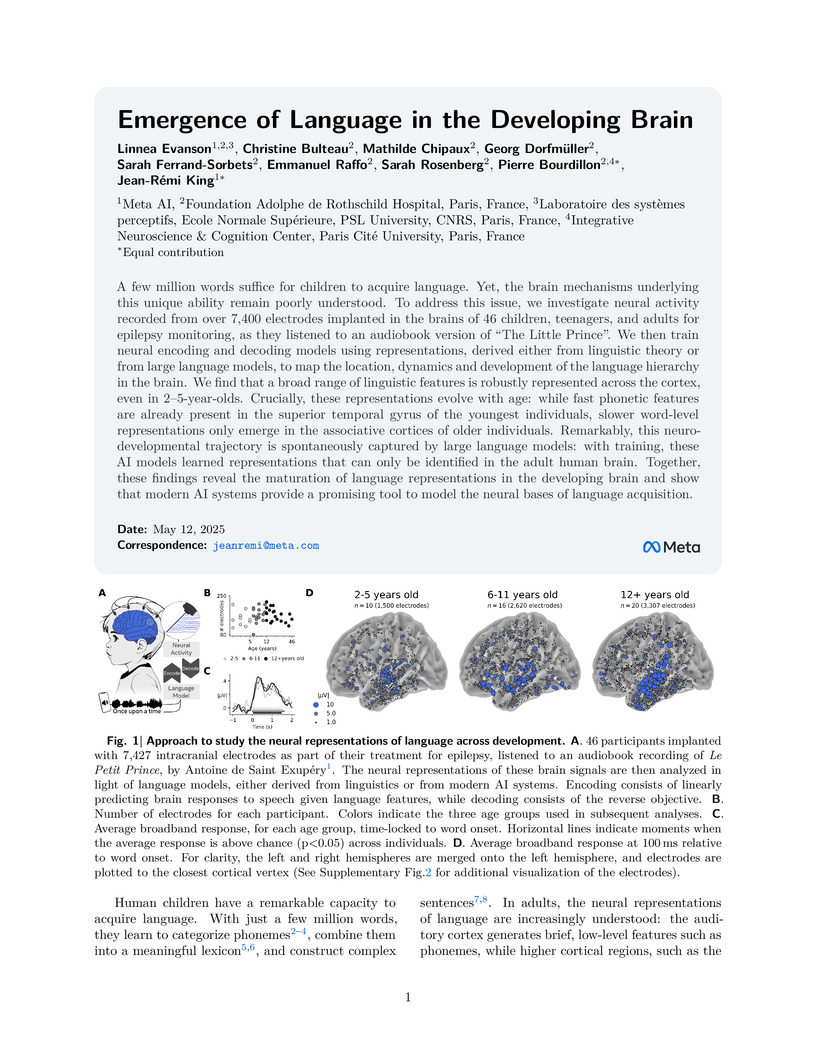

A few million words suffice for children to acquire language. Yet, the brain mechanisms underlying this unique ability remain poorly understood. To address this issue, we investigate neural activity recorded from over 7,400 electrodes implanted in the brains of 46 children, teenagers, and adults for epilepsy monitoring, as they listened to an audiobook version of "The Little Prince". We then train neural encoding and decoding models using representations, derived either from linguistic theory or from large language models, to map the location, dynamics and development of the language hierarchy in the brain. We find that a broad range of linguistic features is robustly represented across the cortex, even in 2-5-year-olds. Crucially, these representations evolve with age: while fast phonetic features are already present in the superior temporal gyrus of the youngest individuals, slower word-level representations only emerge in the associative cortices of older individuals. Remarkably, this neuro-developmental trajectory is spontaneously captured by large language models: with training, these AI models learned representations that can only be identified in the adult human brain. Together, these findings reveal the maturation of language representations in the developing brain and show that modern AI systems provide a promising tool to model the neural bases of language acquisition.

Energy-based models have become a central paradigm for understanding computation and stability in both theoretical neuroscience and machine learning. However, the energetic framework typically relies on symmetry in synaptic or weight matrices - a constraint that excludes biologically realistic systems such as excitatory-inhibitory (E-I) networks. When symmetry is relaxed, the classical notion of a global energy landscape fails, leaving the dynamics of asymmetric neural systems conceptually unanchored. In this work, we extend the energetic framework to asymmetric firing rate networks, revealing an underlying game-theoretic structure for the neural dynamics in which each neuron is an agent that seeks to minimize its own energy. In addition, we exploit rigorous stability principles from network theory to study regulation and balancing of neural activity in E-I networks. We combine the novel game-energetic interpretation and the stability results to revisit standard frameworks in theoretical neuroscience, such as the Wilson-Cowan and lateral inhibition models. These insights allow us to study cortical columns of lateral inhibition microcircuits as contrast enhancer - with the ability to selectively sharpen subtle differences in the environment through hierarchical excitation-inhibition interplay. Our results bridge energetic and game-theoretic views of neural computation, offering a pathway toward the systematic engineering of biologically grounded, dynamically stable neural architectures.

Studying learning-related plasticity is central to understanding the acquisition of complex skills, for example learning to master a musical instrument. Over the past three decades, conventional group-based functional magnetic resonance imaging (fMRI) studies have advanced our understanding of how humans' neural representations change during skill acquisition. However, group-based fMRI studies average across heterogeneous learners and often rely on coarse pre- versus post-training comparisons, limiting the spatial and temporal precision with which neural changes can be estimated. Here, we outline an individual-specific precision approach that tracks neural changes within individuals by collecting high-quality neuroimaging data frequently over the course of training, mapping brain function in each person's own anatomical space, and gathering detailed behavioral measures of learning, allowing neural trajectories to be directly linked to individual learning progress. Complementing fMRI with mobile neuroimaging methods, such as functional near-infrared spectroscopy (fNIRS), will enable researchers to track plasticity during naturalistic practice and across extended time scales. This multi-modal approach will enhance sensitivity to individual learning trajectories and will offer more nuanced insights into how neural representations change with training. We also discuss how findings can be generalized beyond individuals, including through statistical methods based on replication in additional individuals. Together, this approach allows researchers to design highly informative longitudinal training studies that advance a mechanistic, personalized account of skill learning in the human brain.

Shogo Ohmae and Keiko Ohmae's perspective identifies predictive and generative world models as a common computational foundation underlying diverse functions in the neocortex, cerebellum, and modern attention-based AI systems. Their analysis reveals a shared principle where these models, acquired through prediction-error learning, are repurposed for prediction, understanding, and generation across various domains.

Generating whole-brain 4D fMRI sequences conditioned on cognitive tasks remains challenging due to the high-dimensional, heterogeneous BOLD dynamics across subjects/acquisitions and the lack of neuroscience-grounded validation. We introduce the first diffusion transformer for voxelwise 4D fMRI conditional generation, combining 3D VQ-GAN latent compression with a CNN-Transformer backbone and strong task conditioning via AdaLN-Zero and cross-attention. On HCP task fMRI, our model reproduces task-evoked activation maps, preserves the inter-task representational structure observed in real data (RSA), achieves perfect condition specificity, and aligns ROI time-courses with canonical hemodynamic responses. Performance improves predictably with scale, reaching task-evoked map correlation of 0.83 and RSA of 0.98, consistently surpassing a U-Net baseline on all metrics. By coupling latent diffusion with a scalable backbone and strong conditioning, this work establishes a practical path to conditional 4D fMRI synthesis, paving the way for future applications such as virtual experiments, cross-site harmonization, and principled augmentation for downstream neuroimaging models.

The primary output of the nervous system is movement and behavior. While recent advances have democratized pose tracking during complex behavior, kinematic trajectories alone provide only indirect access to the underlying control processes. Here we present MIMIC-MJX, a framework for learning biologically-plausible neural control policies from kinematics. MIMIC-MJX models the generative process of motor control by training neural controllers that learn to actuate biomechanically-realistic body models in physics simulation to reproduce real kinematic trajectories. We demonstrate that our implementation is accurate, fast, data-efficient, and generalizable to diverse animal body models. Policies trained with MIMIC-MJX can be utilized to both analyze neural control strategies and simulate behavioral experiments, illustrating its potential as an integrative modeling framework for neuroscience.

Neural decoding from electroencephalography (EEG) remains fundamentally limited by poor generalization to unseen subjects, driven by high inter-subject variability and the lack of large-scale datasets to model it effectively. Existing methods often rely on synthetic subject generation or simplistic data augmentation, but these strategies fail to scale or generalize reliably. We introduce \textit{MultiDiffNet}, a diffusion-based framework that bypasses generative augmentation entirely by learning a compact latent space optimized for multiple objectives. We decode directly from this space and achieve state-of-the-art generalization across various neural decoding tasks using subject and session disjoint evaluation. We also curate and release a unified benchmark suite spanning four EEG decoding tasks of increasing complexity (SSVEP, Motor Imagery, P300, and Imagined Speech) and an evaluation protocol that addresses inconsistent split practices in prior EEG research. Finally, we develop a statistical reporting framework tailored for low-trial EEG settings. Our work provides a reproducible and open-source foundation for subject-agnostic EEG decoding in real-world BCI systems.

24 Nov 2025

Accurate simulations of electric fields (E-fields) in brain stimulation depend on tissue conductivity representations that link macroscopic assumptions with underlying microscopic tissue structure. Mesoscale conductivity variations can produce meaningful changes in E-fields and neural activation thresholds but remain largely absent from standard macroscopic models. Recent microscopic models have suggested substantial local E-field perturbations and could, in principle, inform mesoscale conductivity. However, the quantitative validity of microscopic models is limited by fixation-related tissue distortion and incomplete extracellular-space reconstruction. We outline approaches that bridge macro- and microscales to derive consistent mesoscale conductivity distributions, providing a foundation for accurate multiscale models of E-fields and neural activation in brain stimulation.

Understanding the relationship between brain activity and behavior is a central goal of neuroscience. Despite significant advances, a fundamental dichotomy persists: neural activity manifests as both discrete spikes of individual neurons and collective waves of populations. Both neural codes correlate with behavior, yet correlation alone cannot determine whether waves exert a causal influence or merely reflect spiking dynamics without causal efficacy. According to the Causal Hierarchy Theorem, no amount of observational data--however extensive--can settle this question; causal conclusions require explicit structural assumptions or careful experiment designs that directly correspond to the causal effect of interest. We develop a formal framework that makes this limitation precise and constructive. Formalizing epiphenomenality via the invariance of interventional distributions in Structural Causal Models (SCMs), we derive a certificate of sufficiency from Pearl's do-calculus that specifies when variables can be removed from the model without loss of causal explainability and clarifies how interventions should be interpreted under different causal structures of spike-wave duality. The purpose of this work is not to resolve the spike-wave debate, but to reformulate it. We shift the problem from asking which signal matters most to asking under what conditions any signal can be shown to matter at all. This reframing distinguishes prediction from explanation and offers neuroscience a principled route for deciding when waves belong to mechanism and when they constitute a byproduct of underlying coordination

Neural dynamics underlie behaviors from memory to sleep, yet identifying mechanisms for higher-order phenomena (e.g., social interaction) is experimentally challenging. Existing whole-brain models often fail to scale to single-neuron resolution, omit behavioral readouts, or rely on PCA/conv pipelines that miss long-range, non-linear interactions. We introduce a sparse-attention whole-brain foundation model (SBM) for larval zebrafish that forecasts neuron spike probabilities conditioned on sensory stimuli and links brain state to behavior. SBM factorizes attention across neurons and along time, enabling whole-brain scale and interpretability. On a held-out subject, it achieves mean absolute error <0.02 with calibrated predictions and stable autoregressive rollouts. Coupled to a permutation-invariant behavior head, SBM enables gradient-based synthesis of neural patterns that elicit target behaviors. This framework supports rapid, behavior-grounded exploration of complex neural phenomena.

BRAIN-IT presents a framework for reconstructing images from fMRI, achieving state-of-the-art performance by producing reconstructions that are both semantically accurate and structurally faithful to the perceived images. The method also demonstrates highly efficient transfer learning, enabling high-quality reconstructions from as little as 1 hour of fMRI data from a new subject, significantly reducing data requirements.

Large language models develop the "lost-in-the-middle" effect as an emergent property from adapting to specific information retrieval demands during training, similar to human memory biases. Researchers at Rutgers University showed that training on mixed memory tasks yields U-shaped recall curves, driven by autoregressive architectures and attention sinks.

The computational role of imagination remains debated. While classical accounts emphasize reward maximization, emerging evidence suggests imagination serves a broader function: accessing internal world models (IWMs). Here, we employ psychological network analysis to compare IWMs in humans and large language models (LLMs) through imagination vividness ratings. Using the Vividness of Visual Imagery Questionnaire (VVIQ-2) and Plymouth Sensory Imagery Questionnaire (PSIQ), we construct imagination networks from three human populations (Florida, Poland, London; N=2,743) and six LLM variants in two conversation conditions. Human imagination networks demonstrate robust correlations across centrality measures (expected influence, strength, closeness) and consistent clustering patterns, indicating shared structural organization of IWMs across populations. In contrast, LLM-derived networks show minimal clustering and weak centrality correlations, even when manipulating conversational memory. These systematic differences persist across environmental scenes (VVIQ-2) and sensory modalities (PSIQ), revealing fundamental disparities between human and artificial world models. Our network-based approach provides a quantitative framework for comparing internally-generated representations across cognitive agents, with implications for developing human-like imagination in artificial intelligence systems.

There are no more papers matching your filters at the moment.