Umbo Computer Vision

Despite many advances in deep-learning based semantic segmentation,

performance drop due to distribution mismatch is often encountered in the real

world. Recently, a few domain adaptation and active learning approaches have

been proposed to mitigate the performance drop. However, very little attention

has been made toward leveraging information in videos which are naturally

captured in most camera systems. In this work, we propose to leverage "motion

prior" in videos for improving human segmentation in a weakly-supervised active

learning setting. By extracting motion information using optical flow in

videos, we can extract candidate foreground motion segments (referred to as

motion prior) potentially corresponding to human segments. We propose to learn

a memory-network-based policy model to select strong candidate segments

(referred to as strong motion prior) through reinforcement learning. The

selected segments have high precision and are directly used to finetune the

model. In a newly collected surveillance camera dataset and a publicly

available UrbanStreet dataset, our proposed method improves the performance of

human segmentation across multiple scenes and modalities (i.e., RGB to Infrared

(IR)). Last but not least, our method is empirically complementary to existing

domain adaptation approaches such that additional performance gain is achieved

by combining our weakly-supervised active learning approach with domain

adaptation approaches.

Reflections in natural images commonly cause false positives in automated

detection systems. These false positives can lead to significant impairment of

accuracy in the tasks of detection, counting and segmentation. Here, inspired

by the recent panoptic approach to segmentation, we show how fusing instance

and semantic segmentation can automatically identify reflection false

positives, without explicitly needing to have the reflective regions labelled.

We explore in detail how state of the art two-stage detectors suffer a loss of

broader contextual features, and hence are unable to learn to ignore these

reflections. We then present an approach to fuse instance and semantic

segmentations for this application, and subsequently show how this reduces

false positive detections in a real world surveillance data with a large number

of reflective surfaces. This demonstrates how panoptic segmentation and related

work, despite being in its infancy, can already be useful in real world

computer vision problems.

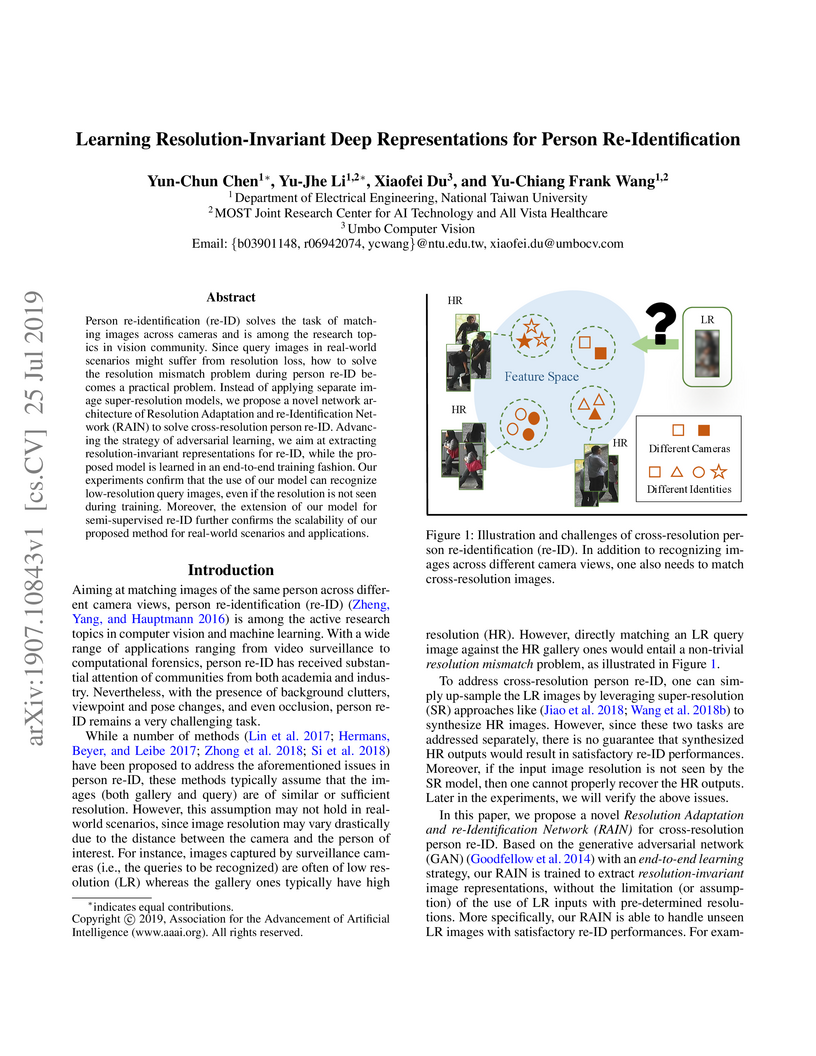

Person re-identification (re-ID) solves the task of matching images across

cameras and is among the research topics in vision community. Since query

images in real-world scenarios might suffer from resolution loss, how to solve

the resolution mismatch problem during person re-ID becomes a practical

problem. Instead of applying separate image super-resolution models, we propose

a novel network architecture of Resolution Adaptation and re-Identification

Network (RAIN) to solve cross-resolution person re-ID. Advancing the strategy

of adversarial learning, we aim at extracting resolution-invariant

representations for re-ID, while the proposed model is learned in an end-to-end

training fashion. Our experiments confirm that the use of our model can

recognize low-resolution query images, even if the resolution is not seen

during training. Moreover, the extension of our model for semi-supervised re-ID

further confirms the scalability of our proposed method for real-world

scenarios and applications.

Person re-identification (re-ID) aims at matching images of the same identity

across camera views. Due to varying distances between cameras and persons of

interest, resolution mismatch can be expected, which would degrade person re-ID

performance in real-world scenarios. To overcome this problem, we propose a

novel generative adversarial network to address cross-resolution person re-ID,

allowing query images with varying resolutions. By advancing adversarial

learning techniques, our proposed model learns resolution-invariant image

representations while being able to recover the missing details in

low-resolution input images. The resulting features can be jointly applied for

improving person re-ID performance due to preserving resolution invariance and

recovering re-ID oriented discriminative details. Our experiments on five

benchmark datasets confirm the effectiveness of our approach and its

superiority over the state-of-the-art methods, especially when the input

resolutions are unseen during training.

There are no more papers matching your filters at the moment.