active-learning

05 Dec 2025

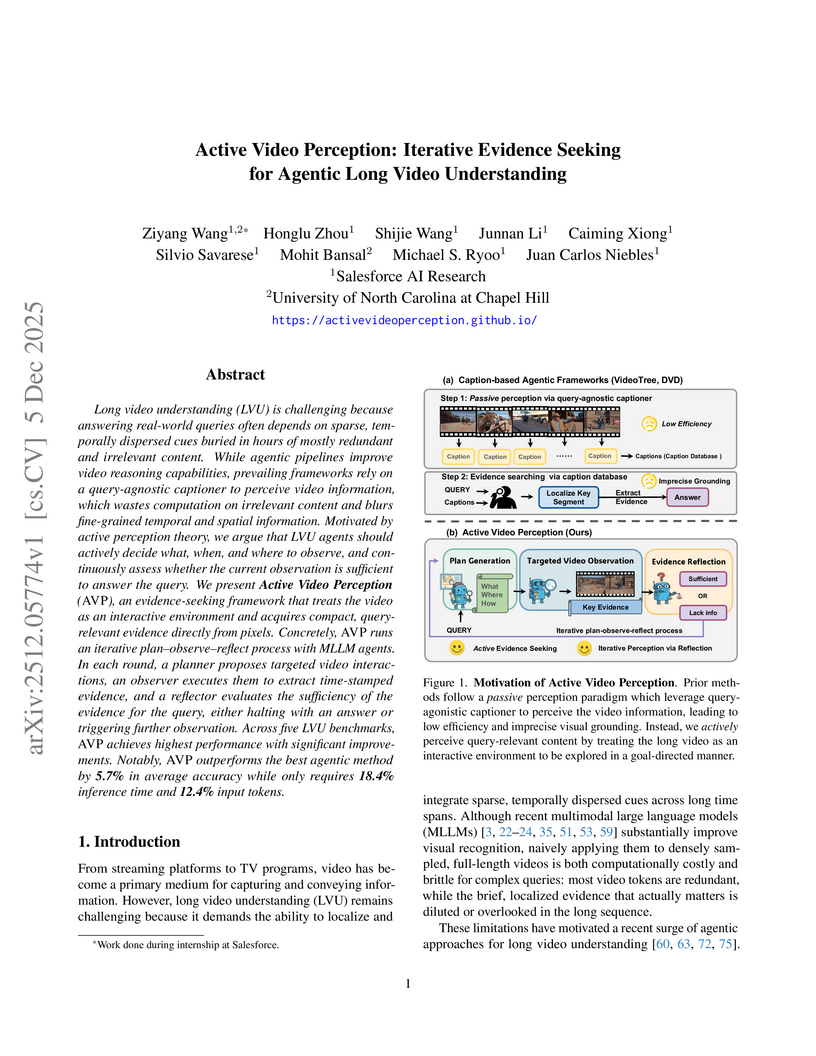

Salesforce AI Research and UNC Chapel Hill developed Active Video Perception (AVP), an iterative evidence-seeking framework for long video understanding that leverages MLLMs in a "Plan–Observe–Reflect" loop. AVP achieves state-of-the-art accuracy across five benchmarks while dramatically reducing inference time by 81.6% and token usage by 87.6% compared to prior agentic methods.

03 Dec 2025

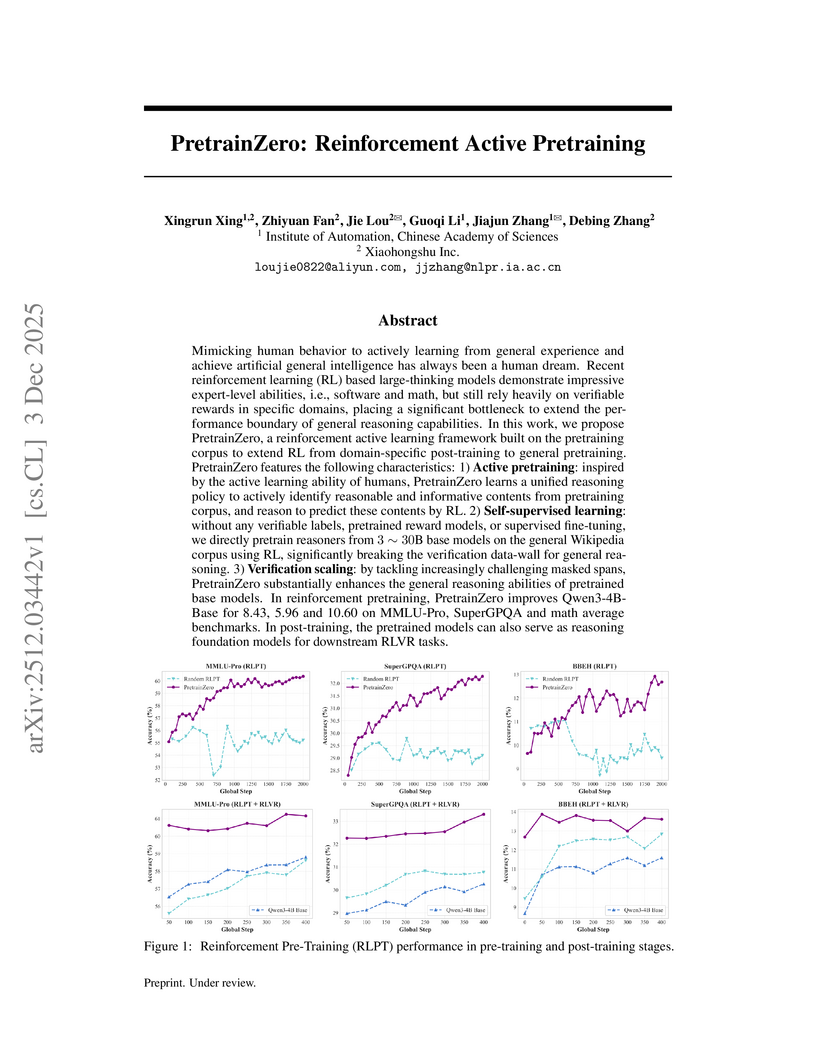

PretrainZero introduces a self-supervised reinforcement active pretraining framework that enables large language models to acquire general reasoning capabilities directly from real-world, noisy data like Wikipedia. This method achieved substantial performance improvements across diverse reasoning benchmarks, including MMLU-Pro and various math tasks, by allowing models to actively learn from self-generated, verifiable challenges.

Meyer et al. introduced a simplified active learning pipeline for 3D point cloud semantic segmentation, employing spatial columns for region separation and deep ensemble uncertainty for selection. This methodology reduces human annotation effort, quantified by a novel "annotated area" metric, and achieves competitive or improved mean Intersection-over-Union performance on urban and indoor datasets.

08 Dec 2025

When should an autonomous agent commit resources to a task? We introduce the Agent Capability Problem (ACP), a framework for predicting whether an agent can solve a problem under resource constraints. Rather than relying on empirical heuristics, ACP frames problem-solving as information acquisition: an agent requires \Itotal bits to identify a solution and gains \Istep bits per action at cost \Cstep, yielding an effective cost \Ceff=(\Itotal/\Istep),\Cstep that predicts resource requirements before search. We prove that \Ceff lower-bounds expected cost and provide tight probabilistic upper bounds. Experimental validation shows that ACP predictions closely track actual agent performance, consistently bounding search effort while improving efficiency over greedy and random strategies. The framework generalizes across LLM-based and agentic workflows, linking principles from active learning, Bayesian optimization, and reinforcement learning through a unified information-theoretic lens. \

08 Dec 2025

Active learning improves annotation efficiency by selecting the most informative samples for annotation and model training. While most prior work has focused on selecting informative images for classification tasks, we investigate the more challenging setting of dense prediction, where annotations are more costly and time-intensive, especially in medical imaging. Region-level annotation has been shown to be more efficient than image-level annotation for these tasks. However, existing methods for representative annotation region selection suffer from high computational and memory costs, irrelevant region choices, and heavy reliance on uncertainty sampling. We propose decomposition sampling (DECOMP), a new active learning sampling strategy that addresses these limitations. It enhances annotation diversity by decomposing images into class-specific components using pseudo-labels and sampling regions from each class. Class-wise predictive confidence further guides the sampling process, ensuring that difficult classes receive additional annotations. Across ROI classification, 2-D segmentation, and 3-D segmentation, DECOMP consistently surpasses baseline methods by better sampling minority-class regions and boosting performance on these challenging classes. Code is in this https URL.

The continuous growth of the global human population is leading to the expansion of human habitats, resulting in decreasing wildlife spaces and increasing human-wildlife interactions. These interactions can range from minor disturbances, such as raccoons in urban waste bins, to more severe consequences, including species extinction. As a result, the monitoring of wildlife is gaining significance in various contexts. Artificial intelligence (AI) offers a solution by automating the recognition of animals in images and videos, thereby reducing the manual effort required for wildlife monitoring. Traditional AI training involves three main stages: image collection, labelling, and model training. However, the variability, for example, in the landscape (e.g., mountains, open fields, forests), weather (e.g., rain, fog, sunshine), lighting (e.g., day, night), and camera-animal distances presents significant challenges to model robustness and adaptability in real-world scenarios.

In this work, we propose a unified framework, called ShadowWolf, designed to address these challenges by integrating and optimizing the stages of AI model training and evaluation. The proposed framework enables dynamic model retraining to adjust to changes in environmental conditions and application requirements, thereby reducing labelling efforts and allowing for on-site model adaptation. This adaptive and unified approach enhances the accuracy and efficiency of wildlife monitoring systems, promoting more effective and scalable conservation efforts.

We consider an adaptive experiment for treatment choice and design a minimax and Bayes optimal adaptive experiment with respect to regret. Given binary treatments, the experimenter's goal is to choose the treatment with the highest expected outcome through an adaptive experiment, in order to maximize welfare. We consider adaptive experiments that consist of two phases, the treatment allocation phase and the treatment choice phase. The experiment starts with the treatment allocation phase, where the experimenter allocates treatments to experimental subjects to gather observations. During this phase, the experimenter can adaptively update the allocation probabilities using the observations obtained in the experiment. After the allocation phase, the experimenter proceeds to the treatment choice phase, where one of the treatments is selected as the best. For this adaptive experimental procedure, we propose an adaptive experiment that splits the treatment allocation phase into two stages, where we first estimate the standard deviations and then allocate each treatment proportionally to its standard deviation. We show that this experiment, often referred to as Neyman allocation, is minimax and Bayes optimal in the sense that its regret upper bounds exactly match the lower bounds that we derive. To show this optimality, we derive minimax and Bayes lower bounds for the regret using change-of-measure arguments. Then, we evaluate the corresponding upper bounds using the central limit theorem and large deviation bounds.

Despite advancements in machine learning for security, rule-based detection remains prevalent in Security Operations Centers due to the resource intensiveness and skill gap associated with ML solutions. While traditional rule-based methods offer efficiency, their rigidity leads to high false positives or negatives and requires continuous manual maintenance. This paper proposes a novel, two-stage hybrid framework to democratize ML-based threat detection. The first stage employs intentionally loose YARA rules for coarse-grained filtering, optimized for high recall. The second stage utilizes an ML classifier to filter out false positives from the first stage's output. To overcome data scarcity, the system leverages Simula, a seedless synthetic data generation framework, enabling security analysts to create high-quality training datasets without extensive data science expertise or pre-labeled examples. A continuous feedback loop incorporates real-time investigation results to adaptively tune the ML model, preventing rule degradation.

This proposed model with active learning has been rigorously tested for a prolonged time in a production environment spanning tens of thousands of systems. The system handles initial raw log volumes often reaching 250 billion events per day, significantly reducing them through filtering and ML inference to a handful of daily tickets for human investigation. Live experiments over an extended timeline demonstrate a general improvement in the model's precision over time due to the active learning feature. This approach offers a self-sustained, low-overhead, and low-maintenance solution, allowing security professionals to guide model learning as expert ``teachers''.

A common and effective means for improving language model capabilities involves finetuning a ``student'' language model's parameters on generations from a more proficient ``teacher'' model. Termed ``synthetic data'', these generations are often produced before any student finetuning, but some work has considered generating new synthetic samples as training progresses. This paper studies and advocates for the latter case, where data are generated in an iterative, closed-loop fashion that is guided by the current state of the student model. For a fixed budget of generated samples, or a budget in terms of compute spent querying a teacher, we show that this curation of finetuning data affords improved student performance over static generation. Further, while there have been several LLM-specific methods proposed that operate in this regime, we find that simple, inexpensive selection criteria from the active learning literature tend to be most performant. We validate these claims across four mathematical and logical reasoning datasets using four different small language models.

Despite the notable success of graph convolutional networks (GCNs) in skeleton-based action recognition, their performance often depends on large volumes of labeled data, which are frequently scarce in practical settings. To address this limitation, we propose a novel label-efficient GCN model. Our work makes two primary contributions. First, we develop a novel acquisition function that employs an adversarial strategy to identify a compact set of informative exemplars for labeling. This selection process balances representativeness, diversity, and uncertainty. Second, we introduce bidirectional and stable GCN architectures. These enhanced networks facilitate a more effective mapping between the ambient and latent data spaces, enabling a better understanding of the learned exemplar distribution. Extensive evaluations on two challenging skeleton-based action recognition benchmarks reveal significant improvements achieved by our label-efficient GCNs compared to prior work.

This research introduces DEAL-300K, a 300K-scale dataset specifically for localizing mask-free diffusion-based image manipulations, and proposes the Multi-Frequency Prompt Tuning (MFPT) framework. MFPT, which adapts frozen Vision Foundation Models with frequency-domain prompts, establishes new state-of-the-art performance, achieving 70.30% IoU on single-round edits and strong robustness against image degradations.

04 Dec 2025

Active learning (AL) accelerates scientific discovery by prioritizing the most informative experiments, but traditional machine learning (ML) models used in AL suffer from cold-start limitations and domain-specific feature engineering, restricting their generalizability. Large language models (LLMs) offer a new paradigm by leveraging their pretrained knowledge and universal token-based representations to propose experiments directly from text-based descriptions. Here, we introduce an LLM-based active learning framework (LLM-AL) that operates in an iterative few-shot setting and benchmark it against conventional ML models across four diverse materials science datasets. We explored two prompting strategies: one using concise numerical inputs suited for datasets with more compositional and structured features, and another using expanded descriptive text suited for datasets with more experimental and procedural features to provide additional context. Across all datasets, LLM-AL could reduce the number of experiments needed to reach top-performing candidates by over 70% and consistently outperformed traditional ML models. We found that LLM-AL performs broader and more exploratory searches while still reaching the optima with fewer iterations. We further examined the stability boundaries of LLM-AL given the inherent non-determinism of LLMs and found its performance to be broadly consistent across runs, within the variability range typically observed for traditional ML approaches. These results demonstrate that LLM-AL can serve as a generalizable alternative to conventional AL pipelines for more efficient and interpretable experiment selection and potential LLM-driven autonomous discovery.

We study the problem of learning hypergraphs with shortest-path queries (SP-queries), and present the first provably optimal online algorithm for a broad and natural class of hypertrees that we call orderly hypertrees. Our online algorithm can be transformed into a provably optimal offline algorithm. Orderly hypertrees can be positioned within the Fagin hierarchy of acyclic hypergraph (well-studied in database theory), and strictly encompass the broadest class in this hierarchy that is learnable with subquadratic SP-query complexity.

Recognizing that in some contexts, such as evolutionary tree reconstruction, distance measurements can degrade with increased distance, we also consider a learning model that uses bounded distance queries. In this model, we demonstrate asymptotically tight complexity bounds for learning general hypertrees.

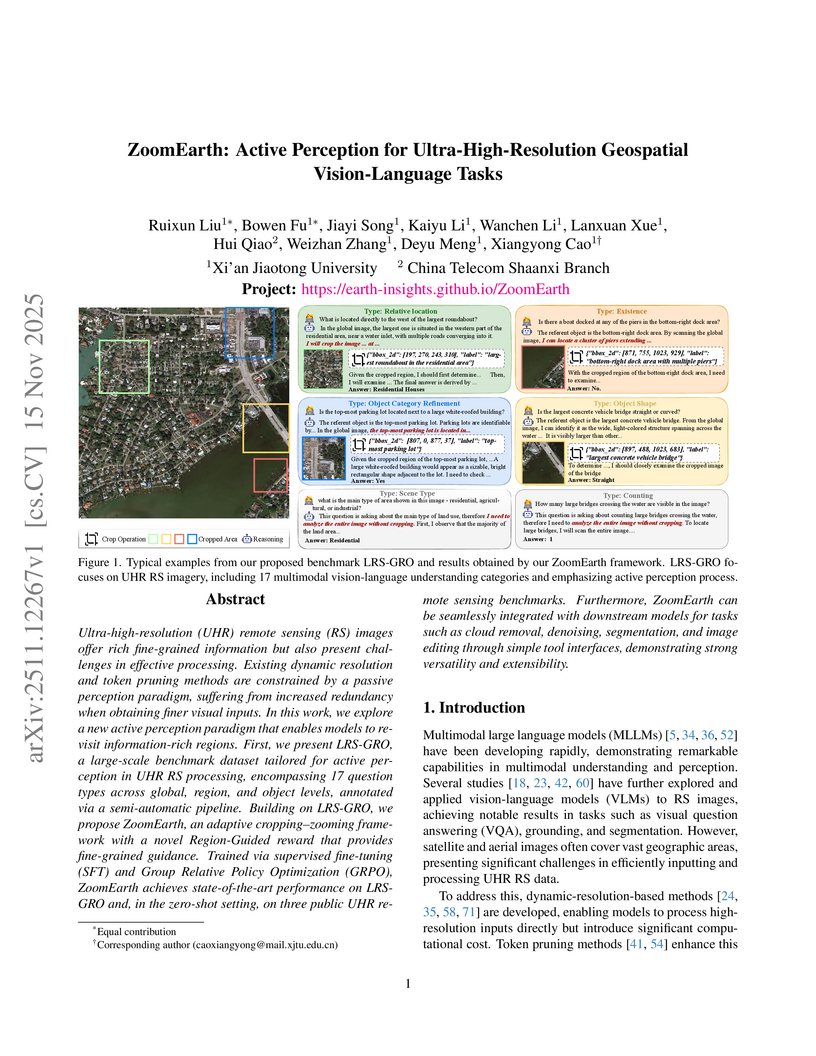

ZoomEarth presents an active perception framework enabling Vision-Language Models to dynamically identify and zoom into relevant regions within ultra-high-resolution remote sensing images. This approach achieves state-of-the-art performance on geospatial visual question answering tasks while enhancing processing efficiency by reducing redundant information handling.

29 Oct 2025

Skeleton-based human action recognition aims to classify human skeletal sequences, which are spatiotemporal representations of actions, into predefined categories. To reduce the reliance on costly annotations of skeletal sequences while maintaining competitive recognition accuracy, the task of 3D Action Recognition with Limited Training Samples, also known as semi-supervised 3D Action Recognition, has been proposed. In addition, active learning, which aims to proactively select the most informative unlabeled samples for annotation, has been explored in semi-supervised 3D Action Recognition for training sample selection. Specifically, researchers adopt an encoder-decoder framework to embed skeleton sequences into a latent space, where clustering information, combined with a margin-based selection strategy using a multi-head mechanism, is utilized to identify the most informative sequences in the unlabeled set for annotation. However, the most representative skeleton sequences may not necessarily be the most informative for the action recognizer, as the model may have already acquired similar knowledge from previously seen skeleton samples. To solve it, we reformulate Semi-supervised 3D action recognition via active learning from a novel perspective by casting it as a Markov Decision Process (MDP). Built upon the MDP framework and its training paradigm, we train an informative sample selection model to intelligently guide the selection of skeleton sequences for annotation. To enhance the representational capacity of the factors in the state-action pairs within our method, we project them from Euclidean space to hyperbolic space. Furthermore, we introduce a meta tuning strategy to accelerate the deployment of our method in real-world scenarios. Extensive experiments on three 3D action recognition benchmarks demonstrate the effectiveness of our method.

Preference learning from pairwise feedback is a widely adopted framework in applications such as reinforcement learning with human feedback and recommendations. In many practical settings, however, user interactions are limited or costly, making offline preference learning necessary. Moreover, real-world preference learning often involves users with different preferences. For example, annotators from different backgrounds may rank the same responses differently. This setting presents two central challenges: (1) identifying similarity across users to effectively aggregate data, especially under scenarios where offline data is imbalanced across dimensions, and (2) handling the imbalanced offline data where some preference dimensions are underrepresented. To address these challenges, we study the Offline Clustering of Preference Learning problem, where the learner has access to fixed datasets from multiple users with potentially different preferences and aims to maximize utility for a test user. To tackle the first challenge, we first propose Off-C2PL for the pure offline setting, where the learner relies solely on offline data. Our theoretical analysis provides a suboptimality bound that explicitly captures the tradeoff between sample noise and bias. To address the second challenge of inbalanced data, we extend our framework to the setting with active-data augmentation where the learner is allowed to select a limited number of additional active-data for the test user based on the cluster structure learned by Off-C2PL. In this setting, our second algorithm, A2-Off-C2PL, actively selects samples that target the least-informative dimensions of the test user's preference. We prove that these actively collected samples contribute more effectively than offline ones. Finally, we validate our theoretical results through simulations on synthetic and real-world datasets.

We propose an adaptive sampling method for the training of Physics Informed Neural Networks (PINNs) which allows for sampling based on an arbitrary problem-specific heuristic which may depend on the network and its gradients. In particular we focus our analysis on the Allen-Cahn equations, attempting to accurately resolve the characteristic interfacial regions using a PINN without any post-hoc resampling. In experiments, we show the effectiveness of these methods over residual-adaptive frameworks.

We introduce active generation of Pareto sets (A-GPS), a new framework for online discrete black-box multi-objective optimization (MOO). A-GPS learns a generative model of the Pareto set that supports a-posteriori conditioning on user preferences. The method employs a class probability estimator (CPE) to predict non-dominance relations and to condition the generative model toward high-performing regions of the search space. We also show that this non-dominance CPE implicitly estimates the probability of hypervolume improvement (PHVI). To incorporate subjective trade-offs, A-GPS introduces preference direction vectors that encode user-specified preferences in objective space. At each iteration, the model is updated using both Pareto membership and alignment with these preference directions, producing an amortized generative model capable of sampling across the Pareto front without retraining. The result is a simple yet powerful approach that achieves high-quality Pareto set approximations, avoids explicit hypervolume computation, and flexibly captures user preferences. Empirical results on synthetic benchmarks and protein design tasks demonstrate strong sample efficiency and effective preference incorporation.

Obtaining high-quality labels for large datasets is expensive, requiring massive annotations from human experts. While AI models offer a cost-effective alternative by predicting labels, their label quality is compromised by the unavoidable labeling errors. Existing methods mitigate this issue through selective labeling, where AI labels a subset and human labels the remainder. However, these methods lack theoretical guarantees on the quality of AI-assigned labels, often resulting in unacceptably high labeling error within the AI-labeled subset. To address this, we introduce \textbf{Conformal Labeling}, a novel method to identify instances where AI predictions can be provably trusted. This is achieved by controlling the false discovery rate (FDR), the proportion of incorrect labels within the selected subset. In particular, we construct a conformal p-value for each test instance by comparing AI models' predicted confidence to those of calibration instances mislabeled by AI models. Then, we select test instances whose p-values are below a data-dependent threshold, certifying AI models' predictions as trustworthy. We provide theoretical guarantees that Conformal Labeling controls the FDR below the nominal level, ensuring that a predefined fraction of AI-assigned labels is correct on average. Extensive experiments demonstrate that our method achieves tight FDR control with high power across various tasks, including image and text labeling, and LLM QA.

We develop an active inference route-planning method for the autonomous control of intelligent agents. The aim is to reconnoiter a geographical area to maintain a common operational picture. To achieve this, we construct an evidence map that reflects our current understanding of the situation, incorporating both positive and "negative" sensor observations of possible target objects collected over time, and diffusing the evidence across the map as time progresses. The generative model of active inference uses Dempster-Shafer theory and a Gaussian sensor model, which provides input to the agent. The generative process employs a Bayesian approach to update a posterior probability distribution. We calculate the variational free energy for all positions within the area by assessing the divergence between a pignistic probability distribution of the evidence map and a posterior probability distribution of a target object based on the observations, including the level of surprise associated with receiving new observations. Using the free energy, we direct the agents' movements in a simulation by taking an incremental step toward a position that minimizes the free energy. This approach addresses the challenge of exploration and exploitation, allowing agents to balance searching extensive areas of the geographical map while tracking identified target objects.

There are no more papers matching your filters at the moment.