string

02 Sep 2025

California Institute of Technology

California Institute of Technology University of VirginiaNational Radio Astronomy ObservatoryJet Propulsion LaboratoryCentro de Astrobiología (CAB)IPACCSIC-INTAUniversity of ToledoitemstypedescriptionCentro de Astrobiolog

I have to be careful with the context provided, which seems to include a schema definition twice. The critical instruction isarrayArray of organization names where the research was directly involved by the organizationstring

University of VirginiaNational Radio Astronomy ObservatoryJet Propulsion LaboratoryCentro de Astrobiología (CAB)IPACCSIC-INTAUniversity of ToledoitemstypedescriptionCentro de Astrobiolog

I have to be careful with the context provided, which seems to include a schema definition twice. The critical instruction isarrayArray of organization names where the research was directly involved by the organizationstringThe integrated luminosity from the features of the polycyclic aromatic hydrocarbons (PAHs) exceeds the luminosity from atomic and molecular emission lines in the star-forming regions in galaxies and is a potential tracer of galaxy-scale star formation and molecular gas content of the high-redshift universe. We simulate the observable PAH spectra using the PRobe far-Infrared Mission for Astrophysics far-infrared enhanced survey spectrometer (FIRESS) and investigate the capability of the FIRESS low-resolution spectroscopy for observing PAH emission spectrum from high-redshift galaxies. Our investigation suggests that (1) PRIMA observations of PAH emission are ≳10 times more efficient at detecting galaxies than the VLA observations of CO(1-0) for galaxies with the same infrared luminosity, (2) PRIMA/FIRESS can detect the PAH emission from galaxies with LIR∼1012L⊙ up to the end of reionization (and possibly beyond, if LIR∼1013L⊙), (3) the PAH band ratios measured from a full spectral fitting and from a simple flux "clipping" method are different and vary depending on the interstellar radiation field strength, and (4) PRIMA/FIRESS can also be used as the PAH mapping instrument to measure star formation and redshift of the galaxies in high-redshift protoclusters.

03 Mar 2025

Renmin University of China

Renmin University of China Peking UniversityitemstypedescriptionpropertiesarrayArray of organization names where the research was directly involved by the organizationstringHuawei Noah

About the tutorial on improving retrospective language agents via joint policy gradient optimization: The research paperdescriptionSchemaexample

Peking UniversityitemstypedescriptionpropertiesarrayArray of organization names where the research was directly involved by the organizationstringHuawei Noah

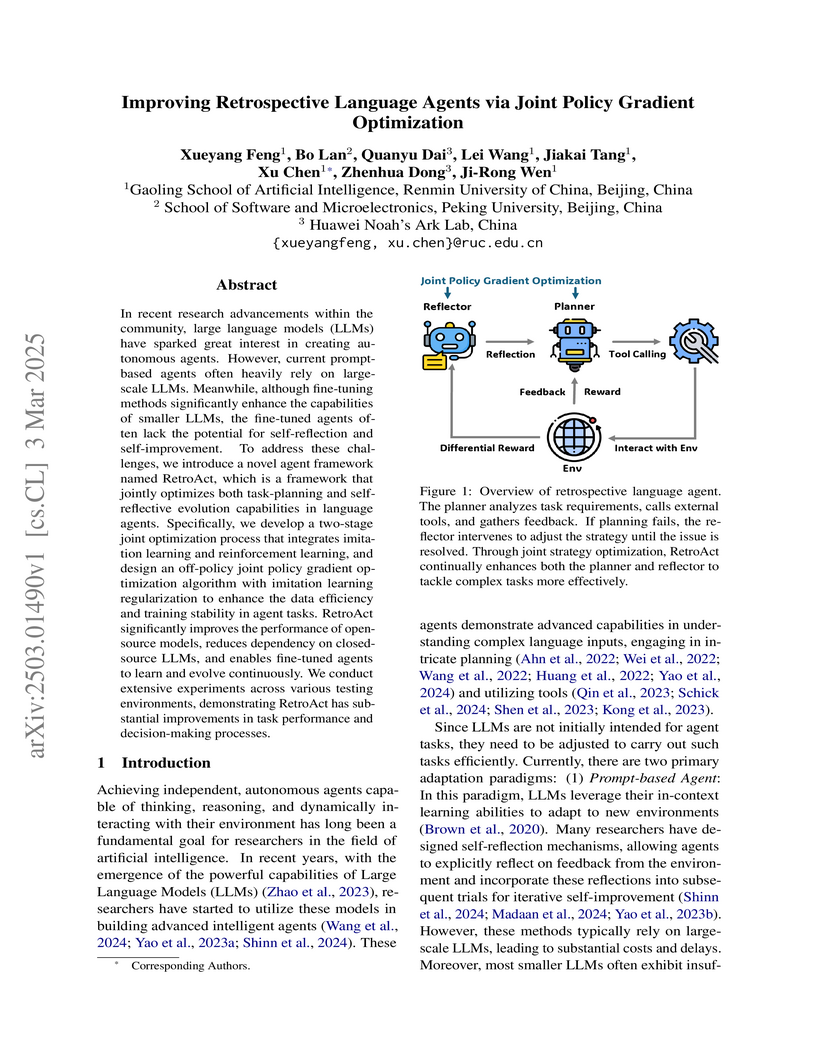

About the tutorial on improving retrospective language agents via joint policy gradient optimization: The research paperdescriptionSchemaexampleIn recent research advancements within the community, large language models (LLMs) have sparked great interest in creating autonomous agents. However, current prompt-based agents often heavily rely on large-scale LLMs. Meanwhile, although fine-tuning methods significantly enhance the capabilities of smaller LLMs, the fine-tuned agents often lack the potential for self-reflection and self-improvement. To address these challenges, we introduce a novel agent framework named RetroAct, which is a framework that jointly optimizes both task-planning and self-reflective evolution capabilities in language agents. Specifically, we develop a two-stage joint optimization process that integrates imitation learning and reinforcement learning, and design an off-policy joint policy gradient optimization algorithm with imitation learning regularization to enhance the data efficiency and training stability in agent tasks. RetroAct significantly improves the performance of open-source models, reduces dependency on closed-source LLMs, and enables fine-tuned agents to learn and evolve continuously. We conduct extensive experiments across various testing environments, demonstrating RetroAct has substantial improvements in task performance and decision-making processes.

There are no more papers matching your filters at the moment.