Building 4.0 CRC

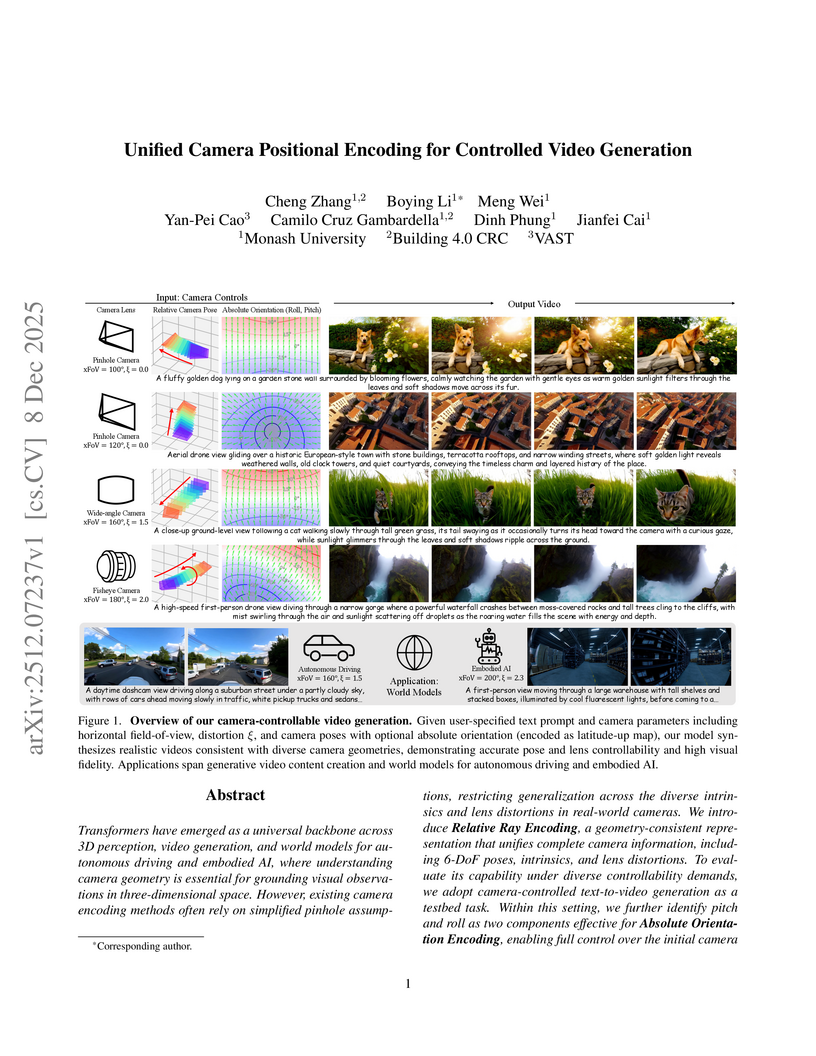

This work from Monash University introduces Unified Camera Positional Encoding (UCPE), a framework that enables fine-grained control over diverse camera geometries, including 6-DoF poses, intrinsics, and lens distortions, in video generation. UCPE integrates into Diffusion Transformers using a lightweight adapter, leading to superior control accuracy in lens, absolute orientation, and relative pose, while maintaining high visual fidelity.

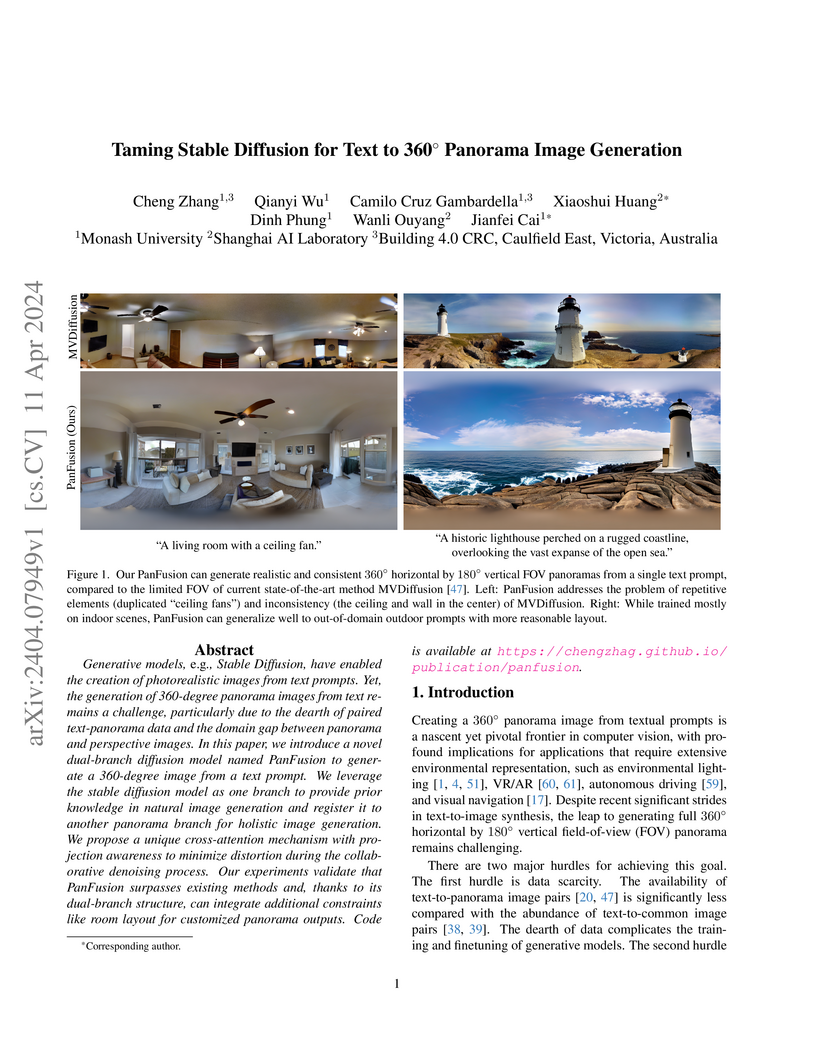

PanFusion, a dual-branch diffusion model from Monash University, Shanghai AI Laboratory, and Building 4.0 CRC, generates 360-degree panoramic images from text by integrating global panoramic context with local perspective view generation, addressing issues like loop inconsistency and repetitive elements. The model demonstrates superior consistency and quality, achieving a FAED of 6.04 and improving 3D IoU to 68.46 for layout conditioning.

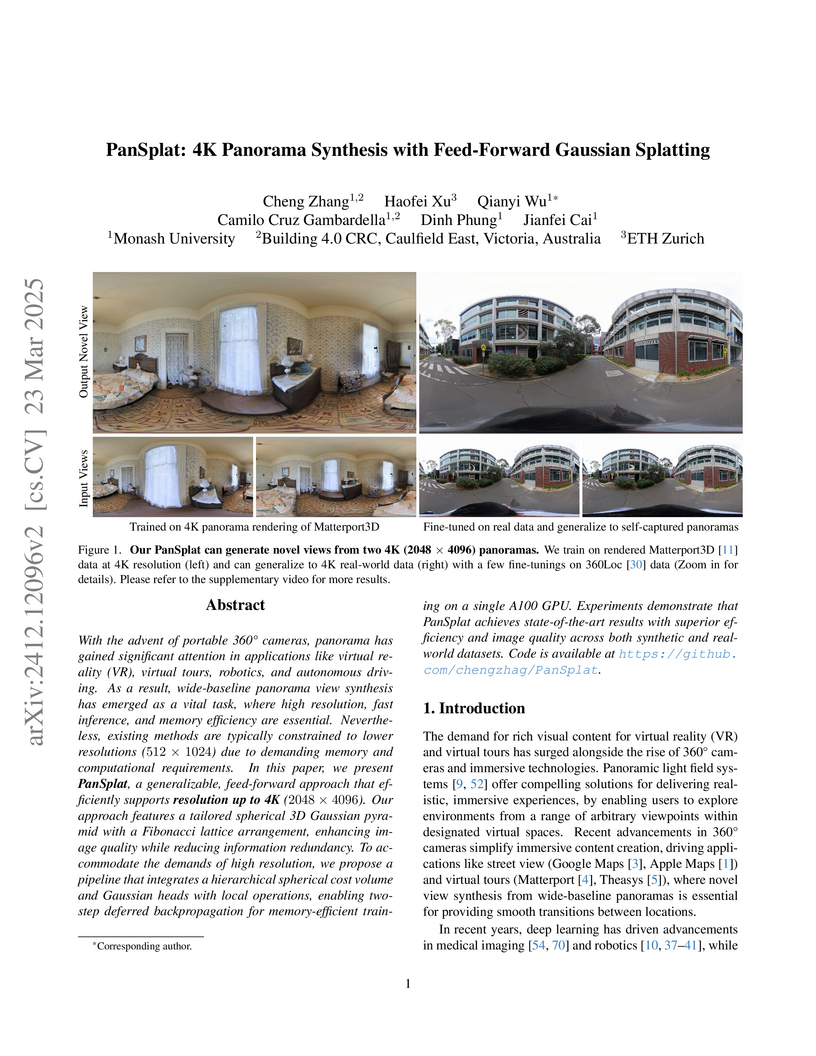

With the advent of portable 360{\deg} cameras, panorama has gained

significant attention in applications like virtual reality (VR), virtual tours,

robotics, and autonomous driving. As a result, wide-baseline panorama view

synthesis has emerged as a vital task, where high resolution, fast inference,

and memory efficiency are essential. Nevertheless, existing methods are

typically constrained to lower resolutions (512 × 1024) due to demanding

memory and computational requirements. In this paper, we present PanSplat, a

generalizable, feed-forward approach that efficiently supports resolution up to

4K (2048 × 4096). Our approach features a tailored spherical 3D Gaussian

pyramid with a Fibonacci lattice arrangement, enhancing image quality while

reducing information redundancy. To accommodate the demands of high resolution,

we propose a pipeline that integrates a hierarchical spherical cost volume and

Gaussian heads with local operations, enabling two-step deferred

backpropagation for memory-efficient training on a single A100 GPU. Experiments

demonstrate that PanSplat achieves state-of-the-art results with superior

efficiency and image quality across both synthetic and real-world datasets.

Code is available at this https URL

PanFlow presents a framework that generates 360-degree videos from a single input image with precise optical flow-conditioned motion control. The method employs a decoupled motion control mechanism and specialized spherical consistency techniques, resulting in improved video quality and motion fidelity compared to existing approaches.

Together with industry experts, we are exploring the potential of head-mounted augmented reality to facilitate safety inspections on high-rise construction sites. A particular concern in the industry is inspecting perimeter safety screens on higher levels of construction sites, intended to prevent falls of people and objects. We aim to support workers performing this inspection task by tracking which parts of the safety screens have been inspected. We use machine learning to automatically detect gaps in the perimeter screens that require closer inspection and remediation and to automate reporting. This work-in-progress paper describes the problem, our early progress, concerns around worker privacy, and the possibilities to mitigate these.

There are no more papers matching your filters at the moment.