Centre for RoboticsMines Paris - PSL

23 Feb 2018

The Simultaneous Localization And Mapping (SLAM) problem has been well

studied in the robotics community, especially using mono, stereo cameras or

depth sensors. 3D depth sensors, such as Velodyne LiDAR, have proved in the

last 10 years to be very useful to perceive the environment in autonomous

driving, but few methods exist that directly use these 3D data for odometry. We

present a new low-drift SLAM algorithm based only on 3D LiDAR data. Our method

relies on a scan-to-model matching framework. We first have a specific sampling

strategy based on the LiDAR scans. We then define our model as the previous

localized LiDAR sweeps and use the Implicit Moving Least Squares (IMLS) surface

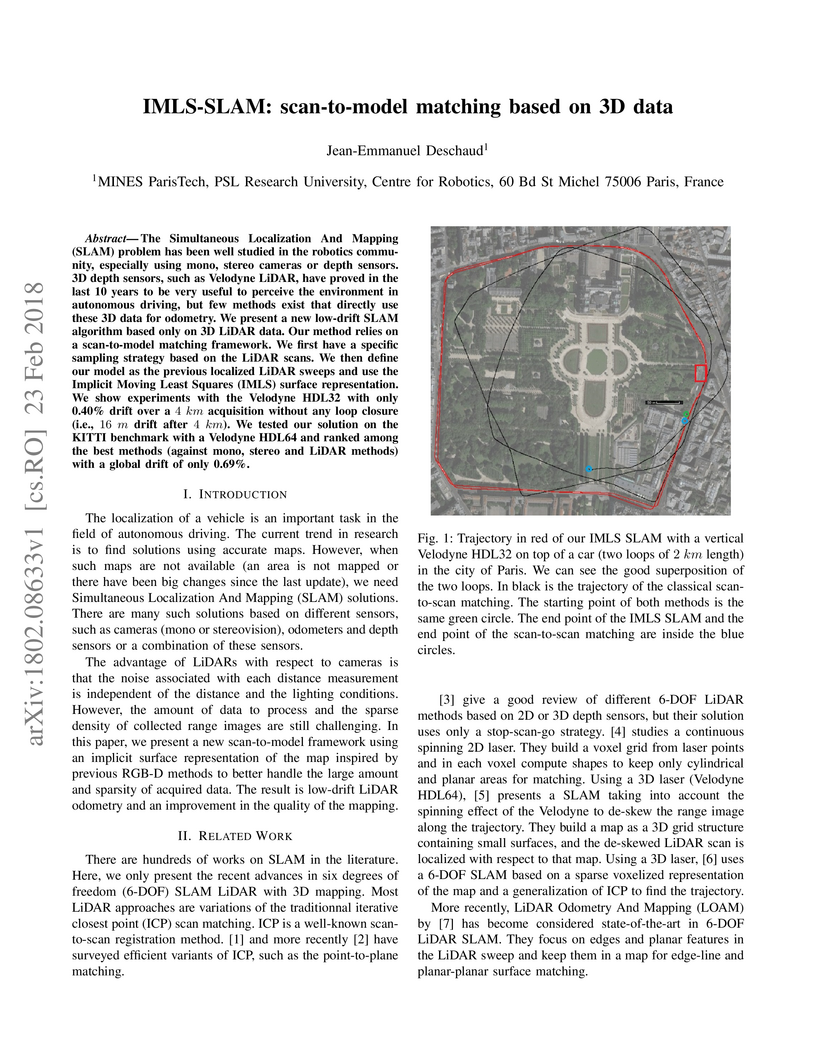

representation. We show experiments with the Velodyne HDL32 with only 0.40%

drift over a 4 km acquisition without any loop closure (i.e., 16 m drift after

4 km). We tested our solution on the KITTI benchmark with a Velodyne HDL64 and

ranked among the best methods (against mono, stereo and LiDAR methods) with a

global drift of only 0.69%.

Transfer learning is a proven technique in 2D computer vision to leverage the

large amount of data available and achieve high performance with datasets

limited in size due to the cost of acquisition or annotation. In 3D, annotation

is known to be a costly task; nevertheless, pre-training methods have only

recently been investigated. Due to this cost, unsupervised pre-training has

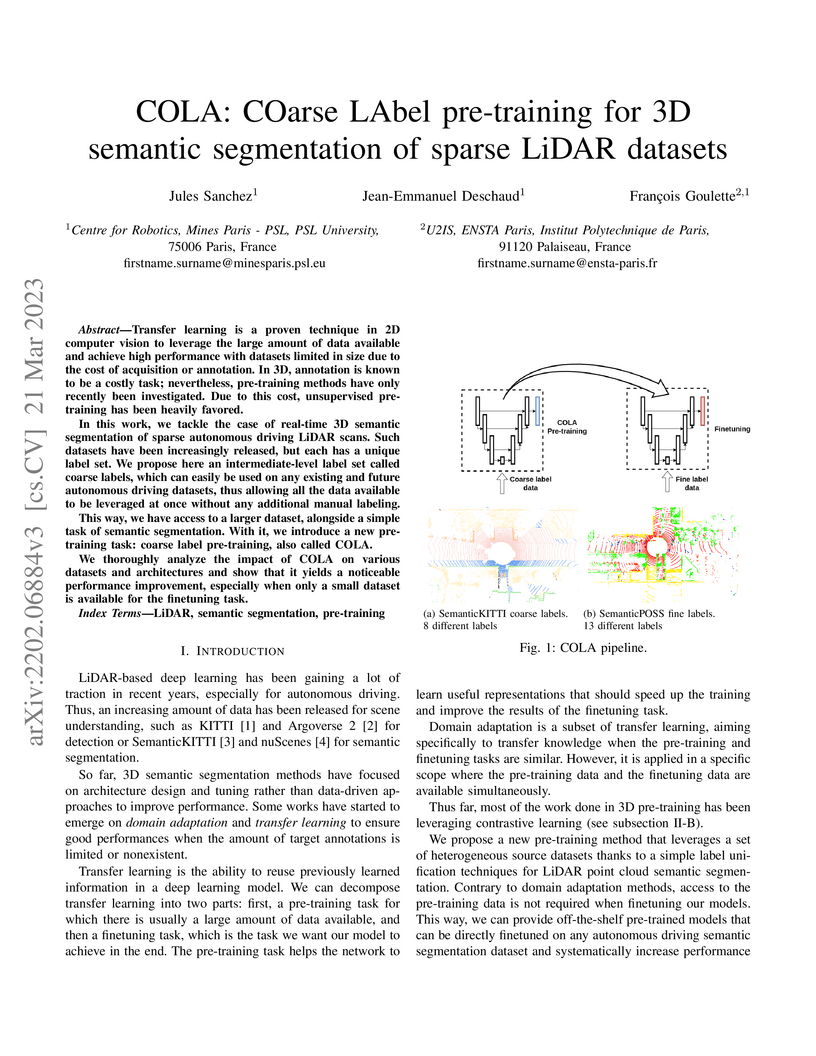

been heavily favored. In this work, we tackle the case of real-time 3D semantic

segmentation of sparse autonomous driving LiDAR scans. Such datasets have been

increasingly released, but each has a unique label set. We propose here an

intermediate-level label set called coarse labels, which can easily be used on

any existing and future autonomous driving datasets, thus allowing all the data

available to be leveraged at once without any additional manual labeling. This

way, we have access to a larger dataset, alongside a simple task of semantic

segmentation. With it, we introduce a new pre-training task: coarse label

pre-training, also called COLA. We thoroughly analyze the impact of COLA on

various datasets and architectures and show that it yields a noticeable

performance improvement, especially when only a small dataset is available for

the finetuning task.

This paper develops probabilistic PV forecasters by taking advantage of recent breakthroughs in deep learning. It tailored forecasting tool, named encoder-decoder, is implemented to compute intraday multi-output PV quantiles forecasts to efficiently capture the time correlation. The models are trained using quantile regression, a non-parametric approach that assumes no prior knowledge of the probabilistic forecasting distribution. The case study is composed of PV production monitored on-site at the University of Liège (ULiège), Belgium. The weather forecasts from the regional climate model provided by the Laboratory of Climatology are used as inputs of the deep learning models. The forecast quality is quantitatively assessed by the continuous ranked probability and interval scores. The results indicate this architecture improves the forecast quality and is computationally efficient to be incorporated in an intraday decision-making tool for robust optimization.

There are no more papers matching your filters at the moment.