Researchers at ETH Zürich used a reinforcement learning agent to investigate how feedback influences skill acquisition in a complex physical fluid system. Their work demonstrated that learning high-performance skills, particularly those involving non-minimum phase dynamics, can require substantially richer sensory information during training than is necessary for their execution.

Lightweight, real-time text-to-speech systems are crucial for accessibility. However, the most efficient TTS models often rely on lightweight phonemizers that struggle with context-dependent challenges. In contrast, more advanced phonemizers with a deeper linguistic understanding typically incur high computational costs, which prevents real-time performance.

This paper examines the trade-off between phonemization quality and inference speed in G2P-aided TTS systems, introducing a practical framework to bridge this gap. We propose lightweight strategies for context-aware phonemization and a service-oriented TTS architecture that executes these modules as independent services. This design decouples heavy context-aware components from the core TTS engine, effectively breaking the latency barrier and enabling real-time use of high-quality phonemization models. Experimental results confirm that the proposed system improves pronunciation soundness and linguistic accuracy while maintaining real-time responsiveness, making it well-suited for offline and end-device TTS applications.

A two-stage self-supervised framework integrates the Joint-Embedding Predictive Architecture (JEPA) with Density Adaptive Attention Mechanisms (DAAM) to learn robust speech representations. This approach generates efficient, reversible discrete speech tokens at an ultra-low rate of 47.5 tokens/sec, designed for seamless integration with large language models.

08 Dec 2025

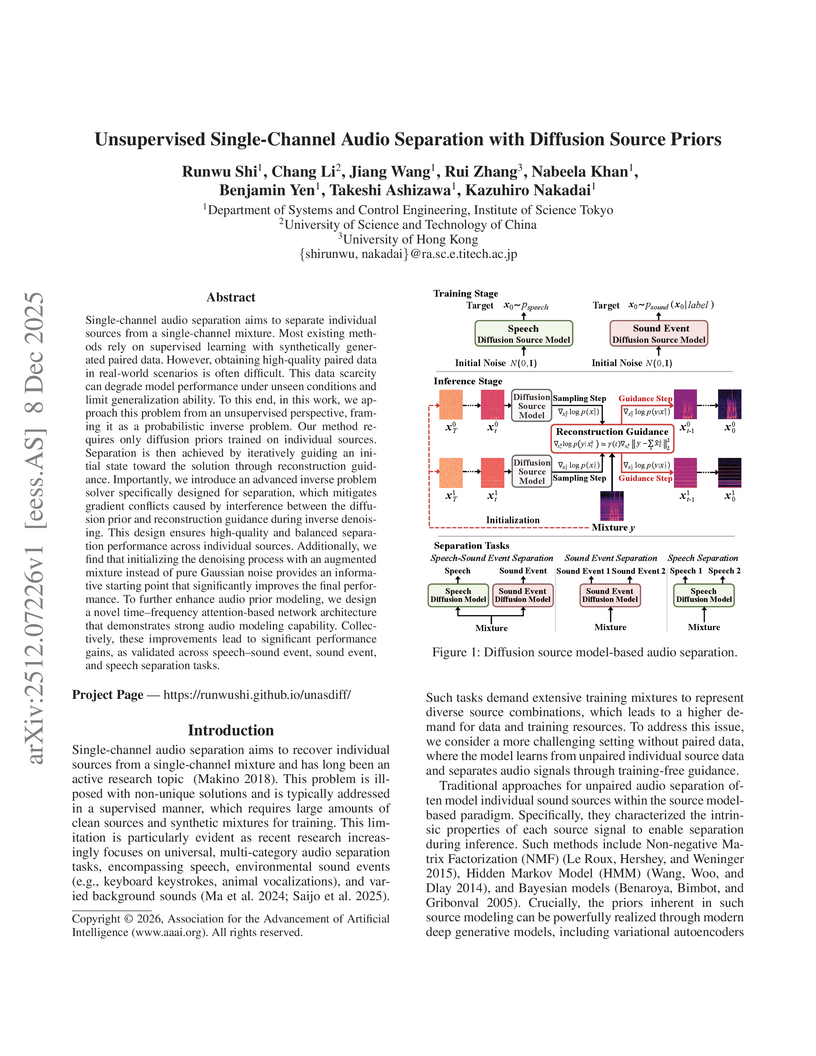

An unsupervised framework for single-channel audio separation, developed at the Institute of Science Tokyo, employs diffusion models as source priors for disentangling sound sources from a single-microphone recording. The method incorporates a novel hybrid guidance schedule and noise-augmented mixture initialization, achieving separation quality comparable to the supervised Conv-TasNet model and outperforming previous unsupervised methods without requiring paired training data.

MPDiffuser is a model-based diffusion framework that addresses dynamic infeasibility in offline decision-making by employing an alternating sampling scheme between a planner and a forward dynamics model. This approach generates dynamically feasible, task-aligned, and constraint-compliant trajectories, demonstrating improved performance across D4RL and DSRL benchmarks and successful real-world robot deployment.

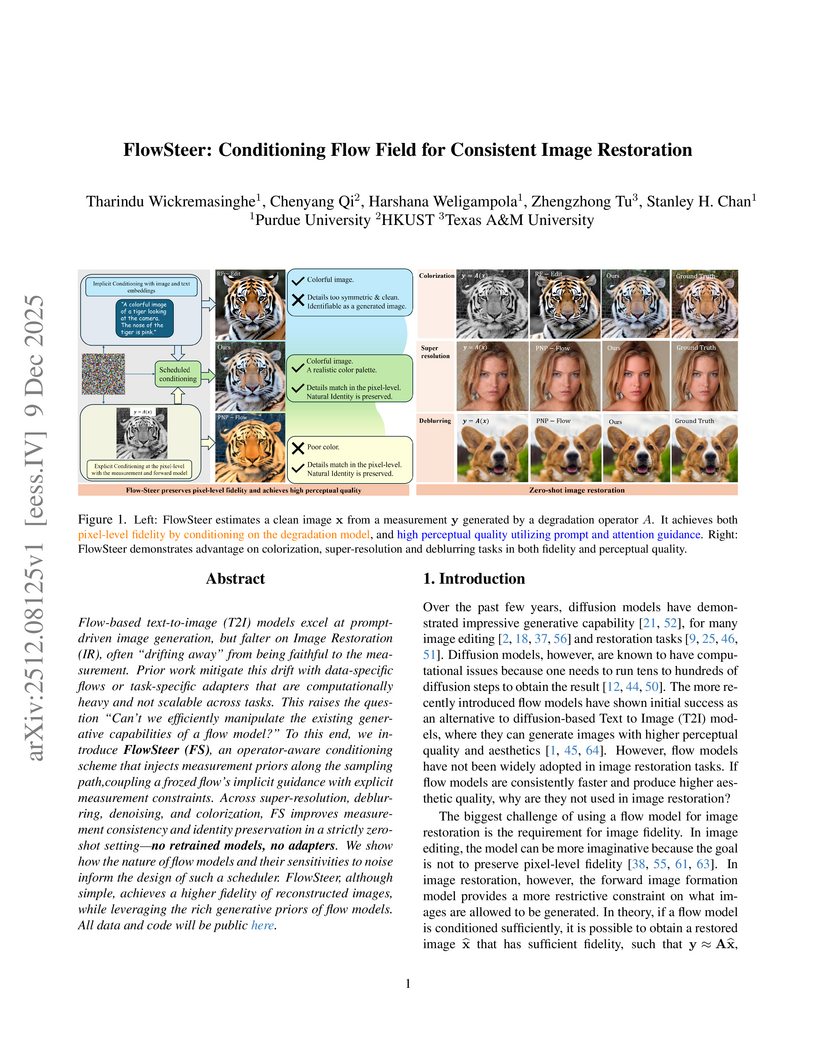

Flow-based text-to-image (T2I) models excel at prompt-driven image generation, but falter on Image Restoration (IR), often "drifting away" from being faithful to the measurement. Prior work mitigate this drift with data-specific flows or task-specific adapters that are computationally heavy and not scalable across tasks. This raises the question "Can't we efficiently manipulate the existing generative capabilities of a flow model?" To this end, we introduce FlowSteer (FS), an operator-aware conditioning scheme that injects measurement priors along the sampling path,coupling a frozed flow's implicit guidance with explicit measurement constraints. Across super-resolution, deblurring, denoising, and colorization, FS improves measurement consistency and identity preservation in a strictly zero-shot setting-no retrained models, no adapters. We show how the nature of flow models and their sensitivities to noise inform the design of such a scheduler. FlowSteer, although simple, achieves a higher fidelity of reconstructed images, while leveraging the rich generative priors of flow models.

Audio deepfakes have reached a level of realism that makes it increasingly difficult to distinguish between human and artificial voices, which poses risks such as identity theft or spread of disinformation. Despite these concerns, research on humans' ability to identify deepfakes is limited, with most studies focusing on English and very few exploring the reasons behind listeners' perceptual decisions. This study addresses this gap through a perceptual experiment in which 54 listeners (28 native Spanish speakers and 26 native Japanese speakers) classified voices as natural or synthetic, and justified their choices. The experiment included 80 stimuli (50% artificial), organized according to three variables: language (Spanish/Japanese), speech style (audiobooks/interviews), and familiarity with the voice (familiar/unfamiliar). The goal was to examine how these variables influence detection and to analyze qualitatively the reasoning behind listeners' perceptual decisions. Results indicate an average accuracy of 59.11%, with higher performance on authentic samples. Judgments of vocal naturalness rely on a combination of linguistic and non-linguistic cues. Comparing Japanese and Spanish listeners, our qualitative analysis further reveals both shared cues and notable cross-linguistic differences in how listeners conceptualize the "humanness" of speech. Overall, participants relied primarily on suprasegmental and higher-level or extralinguistic characteristics - such as intonation, rhythm, fluency, pauses, speed, breathing, and laughter - over segmental features. These findings underscore the complexity of human perceptual strategies in distinguishing natural from artificial speech and align partly with prior research emphasizing the importance of prosody and phenomena typical of spontaneous speech, such as disfluencies.

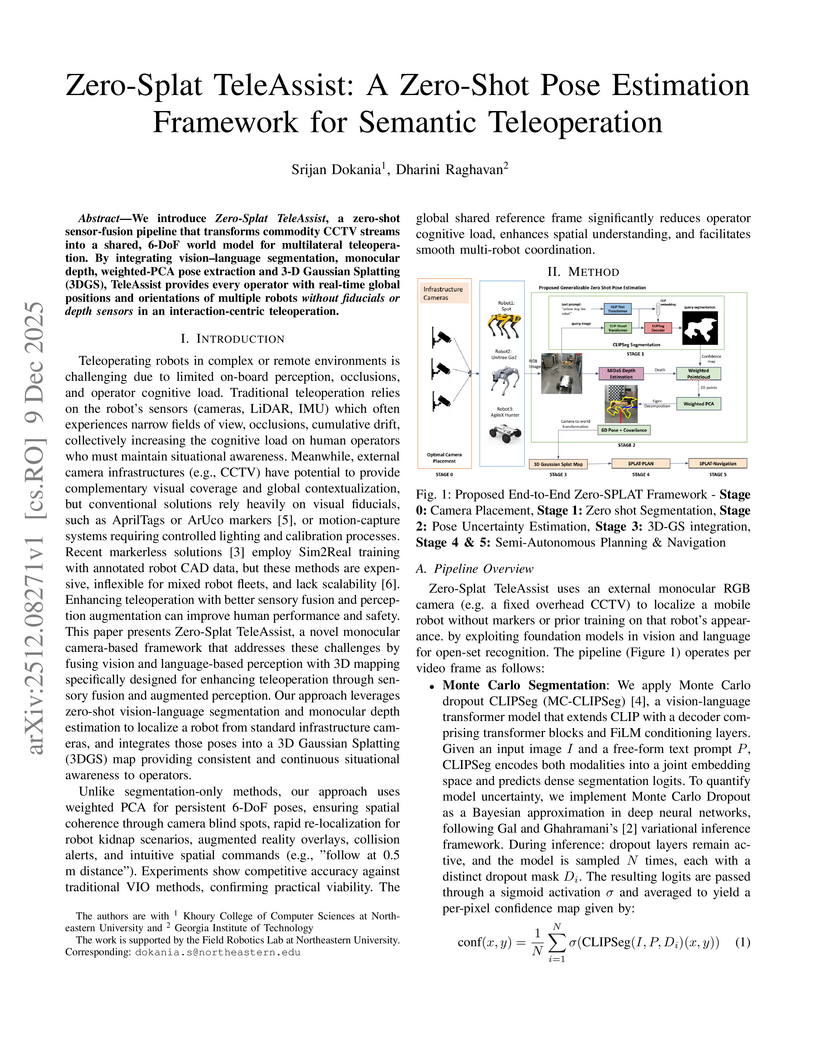

Zero-Splat TeleAssist introduces a zero-shot pose estimation framework for semantic teleoperation that utilizes commodity CCTV streams and advanced AI models to provide real-time 6-DoF robot poses. This system reduces operator cognitive load by 27% and task completion time by 32% in human-robot interaction tasks.

Researchers at HTWG Konstanz and the University of Freiburg developed a Gauss-Newton accelerated Model Predictive Path Integral (MPPI) control method that enhances the scalability and convergence speed of sampling-based optimal control. This approach integrates Jacobian reconstruction via Gaussian smoothing with a Generalized Gauss-Newton method, resulting in up to 9 times fewer iterations in numerical experiments while maintaining robustness for black-box and nonsmooth dynamics.

We present a novel approach to EEG decoding for non-invasive brain machine interfaces (BMIs), with a focus on motor-behavior classification. While conventional convolutional architectures such as EEGNet and DeepConvNet are effective in capturing local spatial patterns, they are markedly less suited for modeling long-range temporal dependencies and nonlinear dynamics. To address this limitation, we integrate an Echo State Network (ESN), a prominent paradigm in reservoir computing into the decoding pipeline. ESNs construct a high-dimensional, sparsely connected recurrent reservoir that excels at tracking temporal dynamics, thereby complementing the spatial representational power of CNNs. Evaluated on a skateboard-trick EEG dataset preprocessed via the PREP pipeline and implemented in MNE-Python, our ESNNet achieves 83.2% within-subject and 51.3% LOSO accuracies, surpassing widely used CNN-based baselines. Code is available at this https URL

Learned image reconstruction has become a pillar in computational imaging and inverse problems. Among the most successful approaches are learned iterative networks, which are formulated by unrolling classical iterative optimisation algorithms for solving variational problems. While the underlying algorithm is usually formulated in the functional analytic setting, learned approaches are often viewed as purely discrete. In this chapter we present a unified operator view for learned iterative networks. Specifically, we formulate a learned reconstruction operator, defining how to compute, and separately the learning problem, which defines what to compute. In this setting we present common approaches and show that many approaches are closely related in their core. We review linear as well as nonlinear inverse problems in this framework and present a short numerical study to conclude.

Expectation Propagation (EP) is a widely used message-passing algorithm that decomposes a global inference problem into multiple local ones. It approximates marginal distributions (beliefs) using intermediate functions (messages). While beliefs must be proper probability distributions that integrate to one, messages may have infinite integral values. In Gaussian-projected EP, such messages take a Gaussian form and appear as if they have "negative" variances. Although allowed within the EP framework, these negative-variance messages can impede algorithmic progress.

In this paper, we investigate EP in linear models and analyze the relationship between the corresponding beliefs. Based on the analysis, we propose both non-persistent and persistent approaches that prevent the algorithm from being blocked by messages with infinite integral values.

Furthermore, by examining the relationship between the EP messages in linear models, we develop an additional approach that avoids the occurrence of messages with infinite integral values.

Always-on sensors are increasingly expected to embark a variety of tiny neural networks and to continuously perform inference on time-series of the data they sense. In order to fit lifetime and energy consumption requirements when operating on battery, such hardware uses microcontrollers (MCUs) with tiny memory budget e.g., 128kB of RAM. In this context, optimizing data flows across neural network layers becomes crucial. In this paper, we introduce TinyDéjàVu, a new framework and novel algorithms we designed to drastically reduce the RAM footprint required by inference using various tiny ML models for sensor data time-series on typical microcontroller hardware. We publish the implementation of TinyDéjàVu as open source, and we perform reproducible benchmarks on hardware. We show that TinyDéjàVu can save more than 60% of RAM usage and eliminate up to 90% of redundant compute on overlapping sliding window inputs.

Accurate segmentation of cancerous lesions from 3D computed tomography (CT) scans is essential for automated treatment planning and response assessment. However, even state-of-the-art models combining self-supervised learning (SSL) pretrained transformers with convolutional decoders are susceptible to out-of-distribution (OOD) inputs, generating confidently incorrect tumor segmentations, posing risks for safe clinical deployment. Existing logit-based methods suffer from task-specific model biases, while architectural enhancements to explicitly detect OOD increase parameters and computational costs. Hence, we introduce a plug-and-play and lightweight post-hoc random forests-based OOD detection framework called RF-Deep that leverages deep features with limited outlier exposure. RF-Deep enhances generalization to imaging variations by repurposing the hierarchical features from the pretrained-then-finetuned backbone encoder, providing task-relevant OOD detection by extracting the features from multiple regions of interest anchored to the predicted tumor segmentations. Hence, it scales to images of varying fields-of-view. We compared RF-Deep against existing OOD detection methods using 1,916 CT scans across near-OOD (pulmonary embolism, negative COVID-19) and far-OOD (kidney cancer, healthy pancreas) datasets. RF-Deep achieved AUROC > 93.50 for the challenging near-OOD datasets and near-perfect detection (AUROC > 99.00) for the far-OOD datasets, substantially outperforming logit-based and radiomics approaches. RF-Deep maintained similar performance consistency across networks of different depths and pretraining strategies, demonstrating its effectiveness as a lightweight, architecture-agnostic approach to enhance the reliability of tumor segmentation from CT volumes.

The Heyland circle diagram is a classical graphical method for representing the steady--state behavior of induction machines using no--load and blocked--rotor test data. Despite its long pedagogical history, the traditional geometric construction has not been formalized within a closed analytic framework. This note develops a complete Euclidean reconstruction of the diagram using only the two measured phasors and elementary geometric operations, yielding a unique circle, a torque chord, a slip scale, and a maximum--torque point. We prove that this constructed circle coincides precisely with the analytic steady--state current locus obtained from the per--phase equivalent circuit. A Möbius transformation interpretation reveals the complex--analytic origin of the diagram's circularity and offers a compact explanation of its geometric structure.

Estimation of differences in conditional independence graphs (CIGs) of two time series Gaussian graphical models (TSGGMs) is investigated where the two TSGGMs are known to have similar structure. The TSGGM structure is encoded in the inverse power spectral density (IPSD) of the time series. In several existing works, one is interested in estimating the difference in two precision matrices to characterize underlying changes in conditional dependencies of two sets of data consisting of independent and identically distributed (i.i.d.) observations. In this paper we consider estimation of the difference in two IPSDs to characterize the underlying changes in conditional dependencies of two sets of time-dependent data. Our approach accounts for data time dependencies unlike past work. We analyze a penalized D-trace loss function approach in the frequency domain for differential graph learning, using Wirtinger calculus. We consider both convex (group lasso) and non-convex (log-sum and SCAD group penalties) penalty/regularization functions. An alternating direction method of multipliers (ADMM) algorithm is presented to optimize the objective function. We establish sufficient conditions in a high-dimensional setting for consistency (convergence of the inverse power spectral density to true value in the Frobenius norm) and graph recovery. Both synthetic and real data examples are presented in support of the proposed approaches. In synthetic data examples, our log-sum-penalized differential time-series graph estimator significantly outperformed our lasso based differential time-series graph estimator which, in turn, significantly outperformed an existing lasso-penalized i.i.d. modeling approach, with F1 score as the performance metric.

This paper describes the BUT submission to the ESDD 2026 Challenge, specifically focusing on Track 1: Environmental Sound Deepfake Detection with Unseen Generators. To address the critical challenge of generalizing to audio generated by unseen synthesis algorithms, we propose a robust ensemble framework leveraging diverse Self-Supervised Learning (SSL) models. We conduct a comprehensive analysis of general audio SSL models (including BEATs, EAT, and Dasheng) and speech-specific SSLs. These front-ends are coupled with a lightweight Multi-Head Factorized Attention (MHFA) back-end to capture discriminative representations. Furthermore, we introduce a feature domain augmentation strategy based on distribution uncertainty modeling to enhance model robustness against unseen spectral distortions. All models are trained exclusively on the official EnvSDD data, without using any external resources. Experimental results demonstrate the effectiveness of our approach: our best single system achieved Equal Error Rates (EER) of 0.00\%, 4.60\%, and 4.80\% on the Development, Progress (Track 1), and Final Evaluation sets, respectively. The fusion system further improved generalization, yielding EERs of 0.00\%, 3.52\%, and 4.38\% across the same partitions.

Semantic Soft Bootstrapping (SSB), an RL-free self-distillation framework developed at the University of Maryland, enhances large language model reasoning by having the model act as both teacher and student. It boosted pass@1 accuracy on the MATH500 benchmark by 10.6% and on AIME2024 by 10% over a GRPO baseline, while utilizing a smaller dataset and maintaining concise response lengths.

Estimation of the conditional independence graph (CIG) of high-dimensional multivariate Gaussian time series from multi-attribute data is considered. Existing methods for graph estimation for such data are based on single-attribute models where one associates a scalar time series with each node. In multi-attribute graphical models, each node represents a random vector or vector time series. In this paper we provide a unified theoretical analysis of multi-attribute graph learning for dependent time series using a penalized log-likelihood objective function formulated in the frequency domain using the discrete Fourier transform of the time-domain data. We consider both convex (sparse-group lasso) and non-convex (log-sum and SCAD group penalties) penalty/regularization functions. We establish sufficient conditions in a high-dimensional setting for consistency (convergence of the inverse power spectral density to true value in the Frobenius norm), local convexity when using non-convex penalties, and graph recovery. We do not impose any incoherence or irrepresentability condition for our convergence results. We also empirically investigate selection of the tuning parameters based on the Bayesian information criterion, and illustrate our approach using numerical examples utilizing both synthetic and real data.

This paper provides a fundamental characterization of the discrete ambiguity functions (AFs) of random communication waveforms under arbitrary orthonormal modulation with random constellation symbols, which serve as a key metric for evaluating the delay-Doppler sensing performance in future ISAC applications. A unified analytical framework is developed for two types of AFs, namely the discrete periodic AF (DP-AF) and the fast-slow time AF (FST-AF), where the latter may be seen as a small-Doppler approximation of the DP-AF. By analyzing the expectation of squared AFs, we derive exact closed-form expressions for both the expected sidelobe level (ESL) and the expected integrated sidelobe level (EISL) under the DP-AF and FST-AF formulations. For the DP-AF, we prove that the normalized EISL is identical for all orthogonal waveforms. To gain structural insights, we introduce a matrix representation based on the finite Weyl-Heisenberg (WH) group, where each delay-Doppler shift corresponds to a WH operator acting on the ISAC signal. This WH-group viewpoint yields sharp geometric constraints on the lowest sidelobes: The minimum ESL can only occur along a one-dimensional cut or over a set of widely dispersed delay-Doppler bins. Consequently, no waveform can attain the minimum ESL over any compact two-dimensional region, leading to a no-optimality (no-go) result under the DP-AF framework. For the FST-AF, the closed-form ESL and EISL expressions reveal a constellation-dependent regime governed by its kurtosis: The OFDM modulation achieves the minimum ESL for sub-Gaussian constellations, whereas the OTFS waveform becomes optimal for super-Gaussian constellations. Finally, four representative waveforms, namely, SC, OFDM, OTFS, and AFDM, are examined under both frameworks, and all theoretical results are verified through numerical examples.

There are no more papers matching your filters at the moment.