Gaussian Robotics

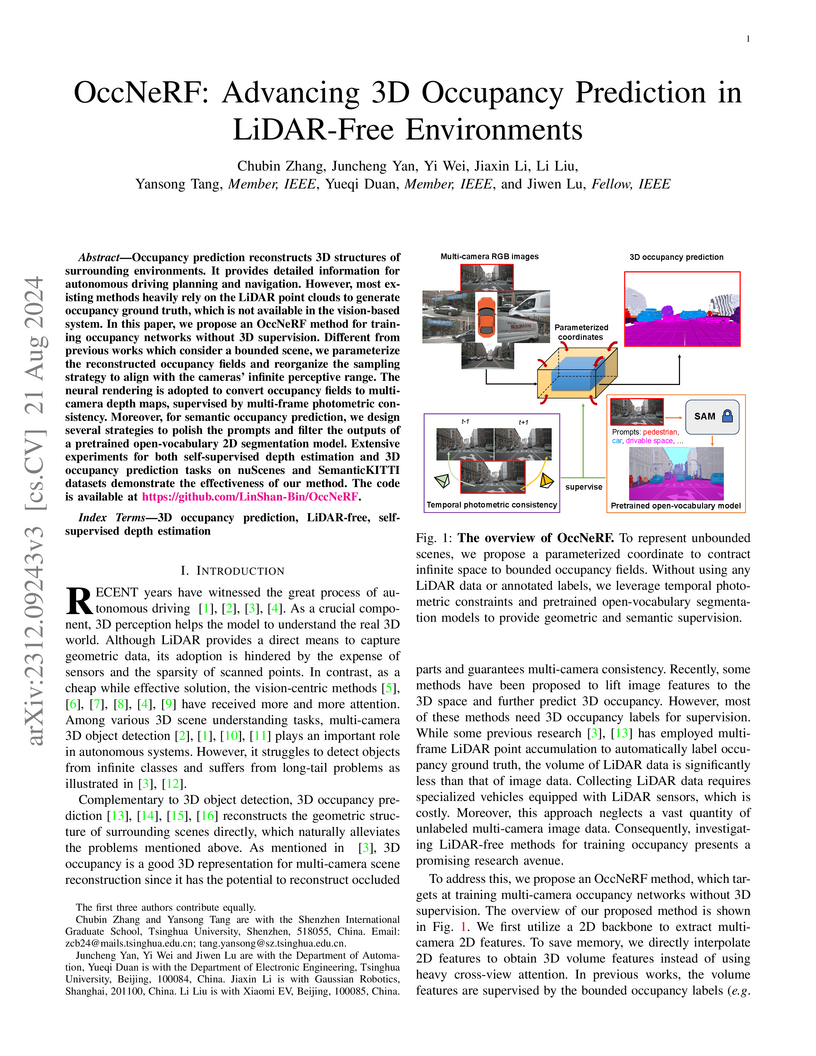

OccNeRF presents a LiDAR-free framework for multi-camera 3D occupancy prediction, leveraging self-supervised depth estimation and open-vocabulary 2D segmentation to reconstruct dense geometric and semantic scene information. The method achieves state-of-the-art results in self-supervised multi-camera depth estimation and competitive performance in 3D occupancy, particularly for large-scale scene elements.

22 Nov 2021

The environment of most real-world scenarios such as malls and supermarkets changes at all times. A pre-built map that does not account for these changes becomes out-of-date easily. Therefore, it is necessary to have an up-to-date model of the environment to facilitate long-term operation of a robot. To this end, this paper presents a general lifelong simultaneous localization and mapping (SLAM) framework. Our framework uses a multiple session map representation, and exploits an efficient map updating strategy that includes map building, pose graph refinement and sparsification. To mitigate the unbounded increase of memory usage, we propose a map-trimming method based on the Chow-Liu maximum-mutual-information spanning tree. The proposed SLAM framework has been comprehensively validated by over a month of robot deployment in real supermarket environment. Furthermore, we release the dataset collected from the indoor and outdoor changing environment with the hope to accelerate lifelong SLAM research in the community. Our dataset is available at this https URL.

In this paper, we propose the LiDAR Distillation to bridge the domain gap induced by different LiDAR beams for 3D object detection. In many real-world applications, the LiDAR points used by mass-produced robots and vehicles usually have fewer beams than that in large-scale public datasets. Moreover, as the LiDARs are upgraded to other product models with different beam amount, it becomes challenging to utilize the labeled data captured by previous versions' high-resolution sensors. Despite the recent progress on domain adaptive 3D detection, most methods struggle to eliminate the beam-induced domain gap. We find that it is essential to align the point cloud density of the source domain with that of the target domain during the training process. Inspired by this discovery, we propose a progressive framework to mitigate the beam-induced domain shift. In each iteration, we first generate low-beam pseudo LiDAR by downsampling the high-beam point clouds. Then the teacher-student framework is employed to distill rich information from the data with more beams. Extensive experiments on Waymo, nuScenes and KITTI datasets with three different LiDAR-based detectors demonstrate the effectiveness of our LiDAR Distillation. Notably, our approach does not increase any additional computation cost for inference.

Task allocation plays a vital role in multi-robot autonomous cleaning

systems, where multiple robots work together to clean a large area. However,

most current studies mainly focus on deterministic, single-task allocation for

cleaning robots, without considering hybrid tasks in uncertain working

environments. Moreover, there is a lack of datasets and benchmarks for relevant

research. In this paper, to address these problems, we formulate multi-robot

hybrid-task allocation under the uncertain cleaning environment as a robust

optimization problem. Firstly, we propose a novel robust mixed-integer linear

programming model with practical constraints including the task order

constraint for different tasks and the ability constraints of hybrid robots.

Secondly, we establish a dataset of \emph{100} instances made from floor plans,

each of which has 2D manually-labeled images and a 3D model. Thirdly, we

provide comprehensive results on the collected dataset using three traditional

optimization approaches and a deep reinforcement learning-based solver. The

evaluation results show that our solution meets the needs of multi-robot

cleaning task allocation and the robust solver can protect the system from

worst-case scenarios with little additional cost. The benchmark will be

available at

{this https URL}.

There are no more papers matching your filters at the moment.