Gideon Brothers

Traditional Image Quality Assessment~(IQA) focuses on quantifying technical degradations such as noise, blur, or compression artifacts, using both full-reference and no-reference objective metrics. However, evaluation of rendering aesthetics, a growing domain relevant to photographic editing, content creation, and AI-generated imagery, remains underexplored due to the lack of datasets that reflect the inherently subjective nature of style preference. In this work, a novel benchmark dataset designed to model human aesthetic judgments of image rendering styles is introduced: the Dataset for Evaluating the Aesthetics of Rendering (DEAR). Built upon the MIT-Adobe FiveK dataset, DEAR incorporates pairwise human preference scores collected via large-scale crowdsourcing, with each image pair evaluated by 25 distinct human evaluators with a total of 13,648 of them participating overall. These annotations capture nuanced, context-sensitive aesthetic preferences, enabling the development and evaluation of models that go beyond traditional distortion-based IQA, focusing on a new task: Evaluation of Aesthetics of Rendering (EAR). The data collection pipeline is described, human voting patterns are analyzed, and multiple use cases are outlined, including style preference prediction, aesthetic benchmarking, and personalized aesthetic modeling. To the best of the authors' knowledge, DEAR is the first dataset to systematically address image aesthetics of rendering assessment grounded in subjective human preferences. A subset of 100 images with markup for them is published on HuggingFace (this http URL).

Illumination estimation is the essential step of computational color

constancy, one of the core parts of various image processing pipelines of

modern digital cameras. Having an accurate and reliable illumination estimation

is important for reducing the illumination influence on the image colors. To

motivate the generation of new ideas and the development of new algorithms in

this field, the 2nd Illumination estimation challenge~(IEC\#2) was conducted.

The main advantage of testing a method on a challenge over testing in on some

of the known datasets is the fact that the ground-truth illuminations for the

challenge test images are unknown up until the results have been submitted,

which prevents any potential hyperparameter tuning that may be biased.

The challenge had several tracks: general, indoor, and two-illuminant with

each of them focusing on different parameters of the scenes. Other main

features of it are a new large dataset of images (about 5000) taken with the

same camera sensor model, a manual markup accompanying each image, diverse

content with scenes taken in numerous countries under a huge variety of

illuminations extracted by using the SpyderCube calibration object, and a

contest-like markup for the images from the Cube+ dataset that was used in

IEC\#1.

This paper focuses on the description of the past two challenges, algorithms

which won in each track, and the conclusions that were drawn based on the

results obtained during the 1st and 2nd challenge that can be useful for

similar future developments.

Pearson's chi-squared test is widely used to assess the uniformity of discrete histograms, typically relying on a continuous chi-squared distribution to approximate the test statistic, since computing the exact distribution is computationally too costly. While effective in many cases, this approximation allegedly fails when expected bin counts are low or tail probabilities are needed. Here, Zero-disparity Distribution Synthesis is presented, a fast dynamic programming approach for computing the exact distribution, enabling detailed analysis of approximation errors. The results dispel some existing misunderstandings and also reveal subtle, but significant pitfalls in approximation that are only apparent with exact values. The Python source code is available at this https URL.

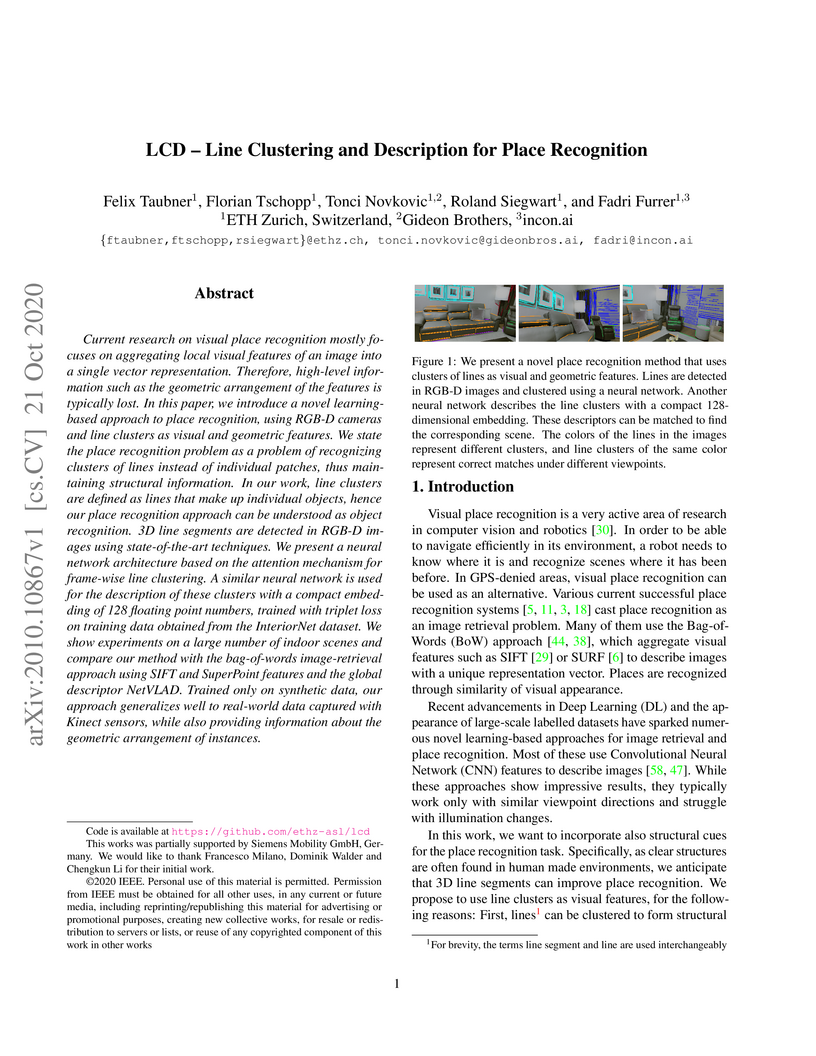

Current research on visual place recognition mostly focuses on aggregating local visual features of an image into a single vector representation. Therefore, high-level information such as the geometric arrangement of the features is typically lost. In this paper, we introduce a novel learning-based approach to place recognition, using RGB-D cameras and line clusters as visual and geometric features. We state the place recognition problem as a problem of recognizing clusters of lines instead of individual patches, thus maintaining structural information. In our work, line clusters are defined as lines that make up individual objects, hence our place recognition approach can be understood as object recognition. 3D line segments are detected in RGB-D images using state-of-the-art techniques. We present a neural network architecture based on the attention mechanism for frame-wise line clustering. A similar neural network is used for the description of these clusters with a compact embedding of 128 floating point numbers, trained with triplet loss on training data obtained from the InteriorNet dataset. We show experiments on a large number of indoor scenes and compare our method with the bag-of-words image-retrieval approach using SIFT and SuperPoint features and the global descriptor NetVLAD. Trained only on synthetic data, our approach generalizes well to real-world data captured with Kinect sensors, while also providing information about the geometric arrangement of instances.

There are no more papers matching your filters at the moment.