mathematical-software

Floating point arithmetic remains expensive on FPGA platforms due to wide datapaths and normalization logic, motivating alternative representations that preserve dynamic range at lower cost. This work introduces the Hybrid Residue Floating Numerical Architecture (HRFNA), a unified arithmetic system that combines carry free residue channels with a lightweight floating point scaling factor. We develop the full mathematical framework, derive bounded error normalization rules, and present FPGA optimized microarchitectures for modular multiplication, exponent management, and hybrid reconstruction. HRFNA is implemented on a Xilinx ZCU104, with Vitis simulation, RTL synthesis, and on chip ILA traces confirming cycle accurate correctness. The architecture achieves over 2.1 times throughput improvement and 38-52 percent LUT reduction compared to IEEE 754 single precision baselines while maintaining numerical stability across long iterative sequences. These results demonstrate that HRFNA offers an efficient and scalable alternative to floating point computation on modern FPGA devices.

Quantum circuit simulation remains essential for developing and validating quantum algorithms, especially as current quantum hardware is limited in scale and quality. However, the growing diversity of simulation methods and software tools creates a high barrier to selecting the most suitable backend for a given circuit. We introduce Maestro, a unified interface for quantum circuit simulation that integrates multiple simulation paradigms - state vector, MPS, tensor network, stabilizer, GPU-accelerated, and p-block methods - under a single API. Maestro includes a predictive runtime model that automatically selects the optimal simulator based on circuit structure and available hardware, and applies backend-specific optimizations such as multiprocessing, GPU execution, and improved sampling. Benchmarks across heterogeneous workloads demonstrate that Maestro outperforms individual simulators in both single-circuit and large batched settings, particularly in high-performance computing environments. Maestro provides a scalable, extensible platform for quantum algorithm research, hybrid quantum-classical workflows, and emerging distributed quantum computing architectures.

This paper discusses the challenges encountered when analyzing the energy efficiency of synthetic benchmarks and the Gromacs package on the Fritz and Alex HPC clusters. Experiments were conducted using MPI parallelism on full sockets of Intel Ice Lake and Sapphire Rapids CPUs, as well as Nvidia A40 and A100 GPUs. The metrics and measurements obtained with the Likwid and Nvidia profiling tools are presented, along with the results. The challenges and pitfalls encountered during experimentation and analysis are revealed and discussed. Best practices for future energy efficiency analysis studies are suggested.

CausalVerse: Benchmarking Causal Representation Learning with Configurable High-Fidelity Simulations

CausalVerse: Benchmarking Causal Representation Learning with Configurable High-Fidelity Simulations

Causal Representation Learning (CRL) aims to uncover the data-generating process and identify the underlying causal variables and relations, whose evaluation remains inherently challenging due to the requirement of known ground-truth causal variables and causal structure. Existing evaluations often rely on either simplistic synthetic datasets or downstream performance on real-world tasks, generally suffering a dilemma between realism and evaluative precision. In this paper, we introduce a new benchmark for CRL using high-fidelity simulated visual data that retains both realistic visual complexity and, more importantly, access to ground-truth causal generating processes. The dataset comprises around 200 thousand images and 3 million video frames across 24 sub-scenes in four domains: static image generation, dynamic physical simulations, robotic manipulations, and traffic situation analysis. These scenarios range from static to dynamic settings, simple to complex structures, and single to multi-agent interactions, offering a comprehensive testbed that hopefully bridges the gap between rigorous evaluation and real-world applicability. In addition, we provide flexible access to the underlying causal structures, allowing users to modify or configure them to align with the required assumptions in CRL, such as available domain labels, temporal dependencies, or intervention histories. Leveraging this benchmark, we evaluated representative CRL methods across diverse paradigms and offered empirical insights to assist practitioners and newcomers in choosing or extending appropriate CRL frameworks to properly address specific types of real problems that can benefit from the CRL perspective. Welcome to visit our: Project page:this https URL, Dataset:this https URL.

Signature-based methods have recently gained significant traction in machine learning for sequential data. In particular, signature kernels have emerged as powerful discriminators and training losses for generative models on time-series, notably in quantitative finance. However, existing implementations do not scale to the dataset sizes and sequence lengths encountered in practice. We present pySigLib, a high-performance Python library offering optimised implementations of signatures and signature kernels on CPU and GPU, fully compatible with PyTorch's automatic differentiation. Beyond an efficient software stack for large-scale signature-based computation, we introduce a novel differentiation scheme for signature kernels that delivers accurate gradients at a fraction of the runtime of existing libraries.

This work introduces ParamRF: a Python library for efficient, parametric modelling of radio frequency (RF) circuits. Built on top of the next-generation computational library JAX, as well as the object-oriented wrapper Equinox, the framework provides an easy-to-use, declarative modelling interface, without sacrificing performance. By representing circuits as JAX PyTrees and leveraging just-in-time compilation, models are compiled as pure functions into an optimized, algebraic graph. Since the resultant functions are JAX-native, this allows computation on CPUs, GPUs, or TPUs, providing integration with a wide range of solvers. Further, thanks to JAX's automatic differentiation, gradients with respect to both frequency and circuit parameters can be calculated for any circuit model outputs. This allows for more efficient optimization, as well as exciting new analysis opportunities. We showcase ParamRF's typical use-case of fitting a model to measured data via its built-in fitting engines, which include classical optimizers like L-BFGS and SLSQP, as well as modern Bayesian samplers such as PolyChord and BlackJAX. The result is a flexible framework for frequency-domain circuit modelling, fitting and analysis.

In this paper, a parallel symmetric eigensolver with very small matrices in

massively parallel processing is considered. We define very small matrices that

fit the sizes of caches per node in a supercomputer. We assume that the sizes

also fit the exa-scale computing requirements of current production runs of an

application. To minimize communication time, we added several communication

avoiding and communication reducing algorithms based on Message Passing

Interface (MPI) non-blocking implementations. A performance evaluation with up

to full nodes of the FX10 system indicates that (1) the MPI non-blocking

implementation is 3x as efficient as the baseline implementation, (2) the

hybrid MPI execution is 1.9x faster than the pure MPI execution, (3) our

proposed solver is 2.3x and 22x faster than a ScaLAPACK routine with optimized

blocking size and cyclic-cyclic distribution, respectively.

BlackJAX is a library implementing sampling and variational inference

algorithms commonly used in Bayesian computation. It is designed for ease of

use, speed, and modularity by taking a functional approach to the algorithms'

implementation. BlackJAX is written in Python, using JAX to compile and run

NumpPy-like samplers and variational methods on CPUs, GPUs, and TPUs. The

library integrates well with probabilistic programming languages by working

directly with the (un-normalized) target log density function. BlackJAX is

intended as a collection of low-level, composable implementations of basic

statistical 'atoms' that can be combined to perform well-defined Bayesian

inference, but also provides high-level routines for ease of use. It is

designed for users who need cutting-edge methods, researchers who want to

create complex sampling methods, and people who want to learn how these work.

Differentiable numerical simulations of physical systems have gained rising

attention in the past few years with the development of automatic

differentiation tools. This paper presents JAX-SSO, a differentiable finite

element analysis solver built with JAX, Google's high-performance computing

library, to assist efficient structural design in the built environment. With

the adjoint method and automatic differentiation feature, JAX-SSO can

efficiently evaluate gradients of physical quantities in an automatic way,

enabling accurate sensitivity calculation in structural optimization problems.

Written in Python and JAX, JAX-SSO is naturally within the machine learning

ecosystem so it can be seamlessly integrated with neural networks to train

machine learning models with inclusion of physics. Moreover, JAX-SSO supports

GPU acceleration to further boost finite element analysis. Several examples are

presented to showcase the capabilities and efficiency of JAX-SSO: i) shape

optimization of grid-shells and continuous shells; ii) size (thickness)

optimization of continuous shells; iii) simultaneous shape and topology

optimization of continuous shells; and iv) training of physics-informed neural

networks for structural optimization. We believe that JAX-SSO can facilitate

research related to differentiable physics and machine learning to further

address problems in structural and architectural design.

When estimating quantities and fields that are difficult to measure directly,

such as the fluidity of ice, from point data sources, such as satellite

altimetry, it is important to solve a numerical inverse problem that is

formulated with Bayesian consistency. Otherwise, the resultant probability

density function for the difficult to measure quantity or field will not be

appropriately clustered around the truth. In particular, the inverse problem

should be formulated by evaluating the numerical solution at the true point

locations for direct comparison with the point data source. If the data are

first fitted to a gridded or meshed field on the computational grid or mesh,

and the inverse problem formulated by comparing the numerical solution to the

fitted field, the benefits of additional point data values below the grid

density will be lost. We demonstrate, with examples in the fields of

groundwater hydrology and glaciology, that a consistent formulation can

increase the accuracy of results and aid discourse between modellers and

observationalists.

To do this, we bring point data into the finite element method ecosystem as

discontinuous fields on meshes of disconnected vertices. Point evaluation can

then be formulated as a finite element interpolation operation

(dual-evaluation). This new abstraction is well-suited to automation, including

automatic differentiation. We demonstrate this through implementation in

Firedrake, which generates highly optimised code for solving Partial

Differential Equations (PDEs) with the finite element method. Our solution

integrates with dolfin-adjoint/pyadjoint, allowing PDE-constrained optimisation

problems, such as data assimilation, to be solved through forward and adjoint

mode automatic differentiation.

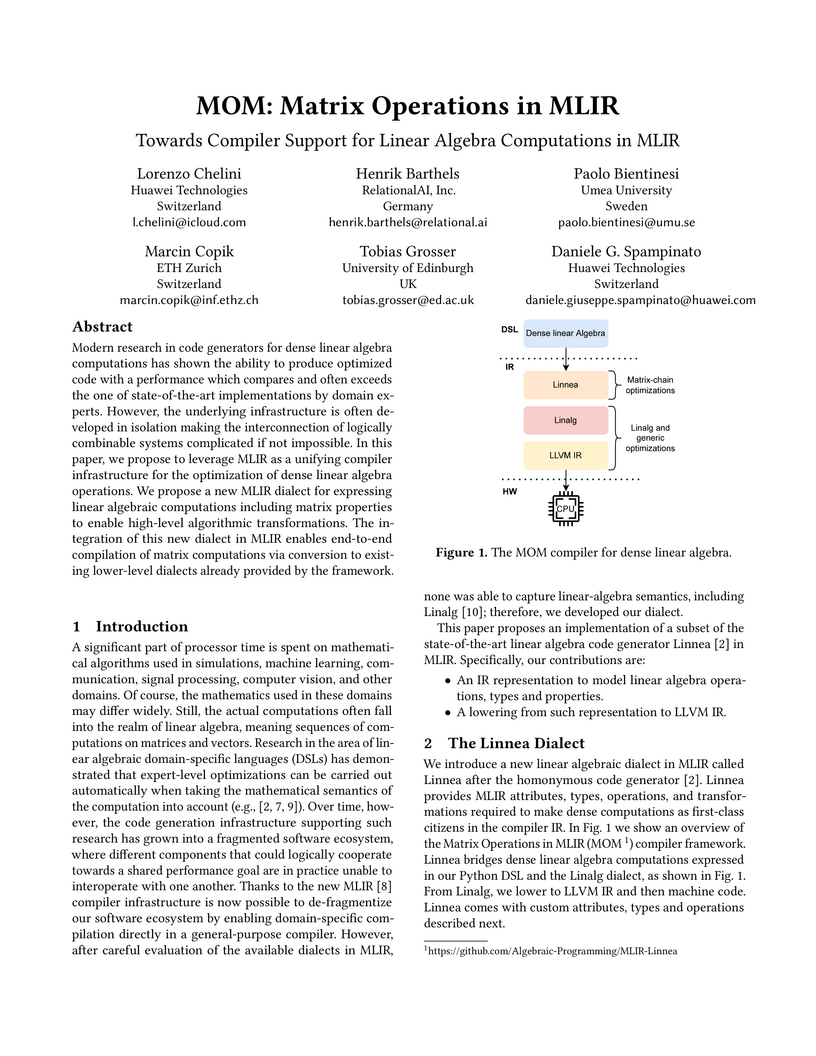

Modern research in code generators for dense linear algebra computations has

shown the ability to produce optimized code with a performance which compares

and often exceeds the one of state-of-the-art implementations by domain

experts. However, the underlying infrastructure is often developed in isolation

making the interconnection of logically combinable systems complicated if not

impossible. In this paper, we propose to leverage MLIR as a unifying compiler

infrastructure for the optimization of dense linear algebra operations. We

propose a new MLIR dialect for expressing linear algebraic computations

including matrix properties to enable high-level algorithmic transformations.

The integration of this new dialect in MLIR enables end-to-end compilation of

matrix computations via conversion to existing lower-level dialects already

provided by the framework.

RamanSPy is presented as an open-source Python package designed for integrative Raman spectroscopy data analysis, aiming to standardize workflows and facilitate the adoption of AI/ML. The package successfully streamlines data processing, enables label-free cell phenotyping through spectral unmixing, and seamlessly integrates deep learning denoisers and AI classification models, achieving high accuracy in bacterial identification and outperforming traditional methods.

Scientific Computing relies on executing computer algorithms coded in some

programming languages. Given a particular available hardware, algorithms speed

is a crucial factor. There are many scientific computing environments used to

code such algorithms. Matlab is one of the most tremendously successful and

widespread scientific computing environments that is rich of toolboxes,

libraries, and data visualization tools. OpenCV is a (C++)-based library

written primarily for Computer Vision and its related areas. This paper

presents a comparative study using 20 different real datasets to compare the

speed of Matlab and OpenCV for some Machine Learning algorithms. Although

Matlab is more convenient in developing and data presentation, OpenCV is much

faster in execution, where the speed ratio reaches more than 80 in some cases.

The best of two worlds can be achieved by exploring using Matlab or similar

environments to select the most successful algorithm; then, implementing the

selected algorithm using OpenCV or similar environments to gain a speed factor.

Iterative methods on irregular grids have been used widely in all areas of

comptational science and engineering for solving partial differential equations

with complex geometry. They provide the flexibility to express complex shapes

with relatively low computational cost. However, the direction of the evolution

of high-performance processors in the last two decades have caused serious

degradation of the computational efficiency of iterative methods on irregular

grids, because of relatively low memory bandwidth. Data compression can in

principle reduce the necessary memory memory bandwidth of iterative methods and

thus improve the efficiency. We have implemented several data compression

algorithms on the PEZY-SC processor, using the matrix generated for the HPCG

benchmark as an example. For the SpMV (Sparse Matrix-Vector multiplication)

part of the HPCG benchmark, the best implementation without data compression

achieved 11.6Gflops/chip, close to the theoretical limit due to the memory

bandwidth. Our implementation with data compression has achieved 32.4Gflops.

This is of course rather extreme case, since the grid used in HPCG is

geometrically regular and thus its compression efficiency is very high.

However, in real applications, it is in many cases possible to make a large

part of the grid to have regular geometry, in particular when the resolution is

high. Note that we do not need to change the structure of the program, except

for the addition of the data compression/decompression subroutines. Thus, we

believe the data compression will be very useful way to improve the performance

of many applications which rely on the use of irregular grids.

Recently, there has been growing interest in using standard language

constructs (e.g. C++'s Parallel Algorithms and Fortran's do concurrent) for

accelerated computing as an alternative to directive-based APIs (e.g. OpenMP

and OpenACC). These constructs have the potential to be more portable, and some

compilers already (or have plans to) support such standards. Here, we look at

the current capabilities, portability, and performance of replacing directives

with Fortran's do concurrent using a mini-app that currently implements OpenACC

for GPU-acceleration and OpenMP for multi-core CPU parallelism. We replace as

many directives as possible with do concurrent, testing various configurations

and compiler options within three major compilers: GNU's gfortran, NVIDIA's

nvfortran, and Intel's ifort. We find that with the right compiler versions and

flags, many directives can be replaced without loss of performance or

portability, and, in the case of nvfortran, they can all be replaced. We

discuss limitations that may apply to more complicated codes and future

language additions that may mitigate them. The software and Singularity

containers are publicly provided to allow the results to be reproduced.

The Python programming language is becoming increasingly popular for

scientific applications due to its simplicity, versatility, and the broad range

of its libraries. A drawback of this dynamic language, however, is its low

runtime performance which limits its applicability for large simulations and

for the analysis of large data sets, as is common in astrophysics and

cosmology. While various frameworks have been developed to address this

limitation, most focus on covering the complete language set, and either force

the user to alter the code or are not able to reach the full speed of an

optimised native compiled language. In order to combine the ease of Python and

the speed of C++, we developed HOPE, a specialised Python just-in-time (JIT)

compiler designed for numerical astrophysical applications. HOPE focuses on a

subset of the language and is able to translate Python code into C++ while

performing numerical optimisation on mathematical expressions at runtime. To

enable the JIT compilation, the user only needs to add a decorator to the

function definition. We assess the performance of HOPE by performing a series

of benchmarks and compare its execution speed with that of plain Python, C++

and the other existing frameworks. We find that HOPE improves the performance

compared to plain Python by a factor of 2 to 120, achieves speeds comparable to

that of C++, and often exceeds the speed of the existing solutions. We discuss

the differences between HOPE and the other frameworks, as well as future

extensions of its capabilities. The fully documented HOPE package is available

at http://hope.phys.ethz.ch and is published under the GPLv3 license on PyPI

and GitHub.

Computational fluid dynamics (CFD) is an important tool for the simulation of

the cardiovascular function and dysfunction. Due to the complexity of the

anatomy, the transitional regime of blood flow in the heart, and the strong

mutual influence between the flow and the physical processes involved in the

heart function, the development of accurate and efficient CFD solvers for

cardiovascular flows is still a challenging task. In this paper we present

lifex-cfd, an open-source CFD solver for cardiovascular simulations based on

the lifex finite element library, written in modern C++ and exploiting

distributed memory parallelism. We model blood flow in both physiological and

pathological conditions via the incompressible Navier-Stokes equations,

accounting for moving cardiac valves, moving domains, and

transition-to-turbulence regimes. In this paper, we provide an overview of the

underlying mathematical formulation, numerical discretization, implementation

details and examples on how to use lifex-cfd. We verify the code through

rigorous convergence analyses, and we show its almost ideal parallel speedup.

We demonstrate the accuracy and reliability of the numerical methods

implemented through a series of idealized and patient-specific vascular and

cardiac simulations, in different physiological flow regimes. The lifex-cfd

source code is available under the LGPLv3 license, to ensure its accessibility

and transparency to the scientific community, and to facilitate collaboration

and further developments.

Carnegie Mellon University

Carnegie Mellon University New York University

New York University University of Oxford

University of Oxford Cornell University

Cornell University NVIDIAIntel LabsSanta Fe InstituteSalesforce ResearchIntelUniversity of TübingenAlan Turing InstituteLawrence Berkeley National LabOak Ridge National LabInstitute for Simulation IntelligenceSLAC National Accelerator LabCharles River AnalyticsNeuralinkJPM AI ResearchU.S. Bank AI Innovation

NVIDIAIntel LabsSanta Fe InstituteSalesforce ResearchIntelUniversity of TübingenAlan Turing InstituteLawrence Berkeley National LabOak Ridge National LabInstitute for Simulation IntelligenceSLAC National Accelerator LabCharles River AnalyticsNeuralinkJPM AI ResearchU.S. Bank AI InnovationThe paper introduces "Simulation Intelligence (SI)" as a new interdisciplinary field that merges scientific computing, scientific simulation, and artificial intelligence. It proposes "Nine Motifs of Simulation Intelligence" as a roadmap for developing advanced methods, demonstrating capabilities such as accelerating complex simulations by orders of magnitude and enabling new forms of scientific inquiry like automated causal discovery.

There is a continuing interest in using standard language constructs for

accelerated computing in order to avoid (sometimes vendor-specific) external

APIs. For Fortran codes, the {\tt do concurrent} (DC) loop has been

successfully demonstrated on the NVIDIA platform. However, support for DC on

other platforms has taken longer to implement. Recently, Intel has added DC GPU

offload support to its compiler, as has HPE for AMD GPUs. In this paper, we

explore the current portability of using DC across GPU vendors using the

in-production solar surface flux evolution code, HipFT. We discuss

implementation and compilation details, including when/where using directive

APIs for data movement is needed/desired compared to using a unified memory

system. The performance achieved on both data center and consumer platforms is

shown.

Randomized numerical linear algebra - RandNLA, for short - concerns the use of randomization as a resource to develop improved algorithms for large-scale linear algebra computations.

The origins of contemporary RandNLA lay in theoretical computer science, where it blossomed from a simple idea: randomization provides an avenue for computing approximate solutions to linear algebra problems more efficiently than deterministic algorithms. This idea proved fruitful in the development of scalable algorithms for machine learning and statistical data analysis applications. However, RandNLA's true potential only came into focus upon integration with the fields of numerical analysis and "classical" numerical linear algebra. Through the efforts of many individuals, randomized algorithms have been developed that provide full control over the accuracy of their solutions and that can be every bit as reliable as algorithms that might be found in libraries such as LAPACK. Recent years have even seen the incorporation of certain RandNLA methods into MATLAB, the NAG Library, NVIDIA's cuSOLVER, and SciKit-Learn.

For all its success, we believe that RandNLA has yet to realize its full potential. In particular, we believe the scientific community stands to benefit significantly from suitably defined "RandBLAS" and "RandLAPACK" libraries, to serve as standards conceptually analogous to BLAS and LAPACK. This 200-page monograph represents a step toward defining such standards. In it, we cover topics spanning basic sketching, least squares and optimization, low-rank approximation, full matrix decompositions, leverage score sampling, and sketching data with tensor product structures (among others). Much of the provided pseudo-code has been tested via publicly available MATLAB and Python implementations.

There are no more papers matching your filters at the moment.