Institute for Quantum Computing

14 Oct 2025

A central aspect of quantum information is that correlations between spacelike separated observers sharing entangled states cannot be reproduced by local hidden variable (LHV) models, a phenomenon known as Bell nonlocality. If one wishes to explain such correlations by classical means, a natural possibility is to allow communication between the parties. In particular, LHV models augmented with two bits of classical communication can explain the correlations of any two-qubit state. Would this still hold if communication is restricted to measurement outcomes? While in certain scenarios with a finite number of inputs the answer is yes, we prove that if a model must reproduce all projective measurements, then for any qubit-qudit state the answer is no. In fact, a qubit-qudit under projective measurements admits an LHV model with outcome communication if and only if it already admits an LHV model without communication. On the other hand, we also show that when restricted sets of measurements are considered (for instance, when the qubit measurements are in the upper hemisphere of the Bloch ball), outcome communication does offer an advantage. This exemplifies that trivial properties in standard LHV scenarios, such as deterministic measurements and outcome-relabelling, play a crucial role in the outcome communication scenario.

27 Sep 2025

This research introduces a robust framework using chain complexes to define fault-tolerant equivalence between spacetime codes, enabling the rigorous transformation of any Clifford circuit into an equivalent measurement-based quantum computing protocol. The method ensures preservation of encoded qubits, fault distance, and decoding properties, broadening the applicability of MBQC to various quantum error-correcting codes.

17 Nov 2024

University of Amsterdam

University of Amsterdam University of WaterlooQuSoftUniversity of Zurich

University of WaterlooQuSoftUniversity of Zurich University of Southern California

University of Southern California National University of SingaporeIBM ResearchIBM QuantumLos Alamos National LaboratoryTilburg University

National University of SingaporeIBM ResearchIBM QuantumLos Alamos National LaboratoryTilburg University Perimeter Institute for Theoretical PhysicsUniversity of Latvia

Perimeter Institute for Theoretical PhysicsUniversity of Latvia Leiden UniversityZuse Institute BerlinTechnische Universität BerlinIBM Research Europe - DublinCzech Technical University in PragueIBM T.J. Watson Research CenterÉcole Polytechnique Fédérale de LausanneInstitute for Quantum ComputingWells FargoUniversity of the Bundeswehr MunichE.ON Digital Technology GmbHFraunhofer ITWMFraunhofer IKSHSBCUSRA Research Institute for Advanced Computer ScienceSTFCThe Hartree CentreVolkswagen AGErste Group BankQuantagoniaNASA, Ames Research CenterIBM Research Europe ","Zurich

Leiden UniversityZuse Institute BerlinTechnische Universität BerlinIBM Research Europe - DublinCzech Technical University in PragueIBM T.J. Watson Research CenterÉcole Polytechnique Fédérale de LausanneInstitute for Quantum ComputingWells FargoUniversity of the Bundeswehr MunichE.ON Digital Technology GmbHFraunhofer ITWMFraunhofer IKSHSBCUSRA Research Institute for Advanced Computer ScienceSTFCThe Hartree CentreVolkswagen AGErste Group BankQuantagoniaNASA, Ames Research CenterIBM Research Europe ","ZurichRecent advances in quantum computers are demonstrating the ability to solve

problems at a scale beyond brute force classical simulation. As such, a

widespread interest in quantum algorithms has developed in many areas, with

optimization being one of the most pronounced domains. Across computer science

and physics, there are a number of different approaches for major classes of

optimization problems, such as combinatorial optimization, convex optimization,

non-convex optimization, and stochastic extensions. This work draws on multiple

approaches to study quantum optimization. Provably exact versus heuristic

settings are first explained using computational complexity theory -

highlighting where quantum advantage is possible in each context. Then, the

core building blocks for quantum optimization algorithms are outlined to

subsequently define prominent problem classes and identify key open questions

that, if answered, will advance the field. The effects of scaling relevant

problems on noisy quantum devices are also outlined in detail, alongside

meaningful benchmarking problems. We underscore the importance of benchmarking

by proposing clear metrics to conduct appropriate comparisons with classical

optimization techniques. Lastly, we highlight two domains - finance and

sustainability - as rich sources of optimization problems that could be used to

benchmark, and eventually validate, the potential real-world impact of quantum

optimization.

For the centennial of quantum mechanics, we offer an overview of the central role played by quantum information and thermalization in problems involving fundamental properties of spacetime and gravitational physics. This is an open area of research still a century after the initial development of formal quantum mechanics, highlighting the effectiveness of quantum physics in the description of all natural phenomena. These remarkable connections can be highlighted with the tools of modern quantum optics, which effectively addresses the three-fold interplay of interacting atoms, fields, and spacetime backgrounds describing gravitational fields and noninertial systems. In this review article, we select aspects of these phenomena centered on quantum features of the acceleration radiation of particles in the presence of black holes. The ensuing horizon-brightened radiation (HBAR) provides a case study of the role played by quantum physics in nontrivial spacetime behavior, and also shows a fundamental correspondence with black hole thermodynamics.

25 May 2020

Device-independent quantum key distribution (DIQKD) is one of the most challenging tasks in quantum cryptography. The protocols and their security are based on the existence of Bell inequalities and the ability to violate them by measuring entangled states. We study the entanglement needed for DIQKD protocols in two different ways. Our first contribution is the derivation of upper bounds on the key rates of CHSH-based DIQKD protocols in terms of the violation of the inequality; this sets an upper limit on the possible DI key extraction rate from states with a given violation. Our upper bound improves on the previously known bound of Kaur et al. Our second contribution is the initiation of the study of the role of bound entangled states in DIQKD. We present a revised Peres conjecture stating that such states cannot be used as a resource for DIQKD. We give a first piece of evidence for the conjecture by showing that the bound entangled state found by Vertesi and Brunner, even though it can certify DI randomness, cannot be used to produce a key using protocols analogous to the well-studied CHSH-based DIQKD protocol.

An investigation into the twin paradox within quantum field theory reveals that at microscopic scales, measured elapsed time is influenced by a clock's specific quantum properties and its interaction with vacuum fluctuations, moving beyond sole dependence on spacetime trajectory. The work indicates that QFT corrections become relevant at timescales (around 10^-17 s) achievable by modern atomic clocks.

28 Oct 2024

Harnessing quantum correlations can enable sensing beyond the classical limits of precision, with the realization of such sensors poised for transformative impacts across science and engineering. Real devices, however, face the accumulated impacts of noise effects, architecture constraints, and finite sampling rates, making the design and success of practical quantum sensors challenging. Numerical and theoretical frameworks that support the optimization and analysis of imperfections from one end of a sensing protocol through to the other (i.e., from probe state preparation through to parameter estimation) are thus crucial for translating quantum advantage into widespread practice. Here, we present an end-to-end variational framework for quantum sensing protocols, where parameterized quantum circuits and neural networks form trainable, adaptive models for quantum sensor dynamics and estimation, respectively. The framework is general and can be adapted towards arbitrary qubit architectures, as we demonstrate with experimentally-relevant ansätze for trapped-ion and photonic systems, and enables to directly quantify the impacts that noisy state preparation/measurement and finite data sampling have on parameter estimation. End-to-end variational frameworks can thus underpin powerful design and analysis tools for realizing quantum advantage in practical, robust sensors.

13 Oct 2025

We study the problem of exact synthesis for the Clifford+R gate set and give the explicit structure of the underlying Bruhat-Tits building for this group. In this process, we also give an alternative proof of the arithmetic nature of the Clifford+R gate set.

We study properties of quantum strategies, which are complete specifications

of a given party's actions in any multiple-round interaction involving the

exchange of quantum information with one or more other parties. In particular,

we focus on a representation of quantum strategies that generalizes the

Choi-Jamio{\l}kowski representation of quantum operations. This new

representation associates with each strategy a positive semidefinite operator

acting only on the tensor product of its input and output spaces. Various facts

about such representations are established, and two applications are discussed:

the first is a new and conceptually simple proof of Kitaev's lower bound for

strong coin-flipping, and the second is a proof of the exact characterization

QRG = EXP of the class of problems having quantum refereed games.

01 Aug 2025

Quantum computers promise to solve problems that are intractable for classical computers, but qubits are vulnerable to many sources of error, limiting the depth of the circuits that can be reliably executed on today's quantum hardware. Quantum error correction has been proposed as a solution to this problem, whereby quantum information is protected by encoding it into a quantum error-correcting code. But protecting quantum information is not enough, we must also process the information using logic gates that are robust to faults that occur during their execution. One method for processing information fault-tolerantly is to use quantum error-correcting codes that have logical gates with a tensor product structure (transversal gates), making them naturally fault-tolerant. Here, we test the performance of a code with such transversal gates, the [[8,3,2]] color code, using trapped-ion and superconducting hardware. We observe improved performance (compared to no encoding) for encoded circuits implementing non-Clifford gates, a class of gates that are essential for achieving universal quantum computing. In particular, we find improved performance for an encoded circuit implementing the control-control Z gate, a key gate in Shor's algorithm. Our results illustrate the potential of using codes with transversal gates to implement non-trivial algorithms on near-term quantum hardware.

09 Jun 2014

We give an algorithm which produces a unique element of the Clifford group

Cn on n qubits from an integer 0\le i < |\mathcal{C}_n| (the

number of elements in the group). The algorithm involves O(n3) operations.

It is a variant of the subgroup algorithm by Diaconis and Shahshahani which is

commonly applied to compact Lie groups. We provide an adaption for the

symplectic group Sp(2n,F2) which provides, in addition to a

canonical mapping from the integers to group elements g, a factorization of

g into a sequence of at most 4n symplectic transvections. The algorithm can

be used to efficiently select random elements of Cn which is often

useful in quantum information theory and quantum computation. We also give an

algorithm for the inverse map, indexing a group element in time O(n3).

Researchers developed a quantum protocol allowing the creation of multiple encrypted clones of an unknown qubit, where any single clone can perfectly reproduce the original state using a shared quantum key. This scheme enables redundant and secure quantum information storage and distribution while remaining consistent with the no-cloning theorem.

21 Oct 2025

Quantum networks are a backbone of future quantum technologies thanks to their role in communication and scalable quantum computing. However, their performance is challenged by noise and decoherence. We propose a self-configuring approach that integrates superposed quantum paths with variational quantum optimization techniques. This allows networks to dynamically optimize the superposition of noisy paths across multiple nodes to establish high-fidelity connections between different parties. Our framework acts as a black box, capable of adapting to unknown noise without requiring characterization or benchmarking of the corresponding quantum channels. We also discuss the role of vacuum coherence, a quantum effect central to path superposition that impacts protocol performance. Additionally, we demonstrate that our approach remains beneficial even in the presence of imperfections in the generation of path superposition.

27 Apr 2012

We describe and expand upon the scalable randomized benchmarking protocol

proposed in Phys. Rev. Lett. 106, 180504 (2011) which provides a method for

benchmarking quantum gates and estimating the gate-dependence of the noise. The

protocol allows the noise to have weak time and gate-dependence, and we provide

a sufficient condition for the applicability of the protocol in terms of the

average variation of the noise. We discuss how state preparation and

measurement errors are taken into account and provide a complete proof of the

scalability of the protocol. We establish a connection in special cases between

the error rate provided by this protocol and the error strength measured using

the diamond norm distance.

We investigate entanglement harvesting in AdS4. Applying the general results of [Phys. Rev. D 97, 125011 (2018)], we consider two scenarios: one where two particle detectors are geodesic, with equal redshift; and one where both are static, at unequal redshift. As expected, at large AdS length L, our results approximate flat space. However at smaller L we observe non-trivial effects for various field boundary conditions. Furthermore, in the static case we observe a novel feature of the entanglement as a function of switching time delay, which we attribute to different (coordinate) frequencies of the detectors. We also find an island of separability in parameter space, analogous that that observed in AdS3, to which we compare and contrast our other results. The variety of features observed in both cases suggest further study in other spacetimes.

15 Dec 2023

This research demonstrates that quantum machine learning models can exhibit benign overfitting, where overparameterized single-layer quantum circuits perfectly interpolate noisy data yet achieve good generalization. The study shows how the data-encoding Hamiltonian and initial quantum state control effective Fourier feature weights, which in turn determine the model's generalization behavior.

Quantum energy teleportation (QET) exploits the existence of correlations to

enable remote energy transfer without the need for physical energy carriers

between emitter and receiver. This paper presents a review of the thermodynamic

foundations of QET and reviews its first experimental demonstration (performed

using Nuclear Magnetic Resonance), along with its implementation on publicly

available superconducting quantum hardware. Additionally, we review an

application of QET in the field of quantum thermodynamics as an efficient

algorithmic cooling technique to cool down individual parts of interacting

systems. Finally, we will review how QET can be employed to optimally generate

exotic quantum states characterized by negative average stress-energy

densities, offering a new operational approach to engineering such states which

are promising in the context of semiclassical gravity.

09 Jul 2025

With the significance of continuous-variable quantum computing increasing thanks to the achievements of light-based quantum hardware, making it available to learner audiences outside physics has been an important yet seldom-tackled challenge. Similarly, the rising focus on fault-tolerant quantum computing has shed light on quantum error correction schemes, turning it into the locus of attention for industry and academia alike. In this paper, we explore the widely adopted framework of quantum error correction based on continuous variable systems and suggest a guide on building a self-contained learning session targeting the famous Gottesman-Kitaev-Preskill (GKP) code through its geometric intuition.

03 Dec 2012

The Born rule is at the foundation of quantum mechanics and transforms our classical way of understanding probabilities by predicting that interference occurs between pairs of independent paths of a single object. One consequence of the Born rule is that three way (or three paths) quantum interference does not exist. In order to test the consistency of the Born rule, we examine detection probabilities in three path intereference using an ensemble of spin-1/2 quantum registers in liquid state nuclear magnetic resonance (LSNMR). As a measure of the consistency, we evaluate the ratio of three way interference to two way interference. Our experiment bounded the ratio to the order of 10−3±10−3, and hence it is consistent with Born's rule.

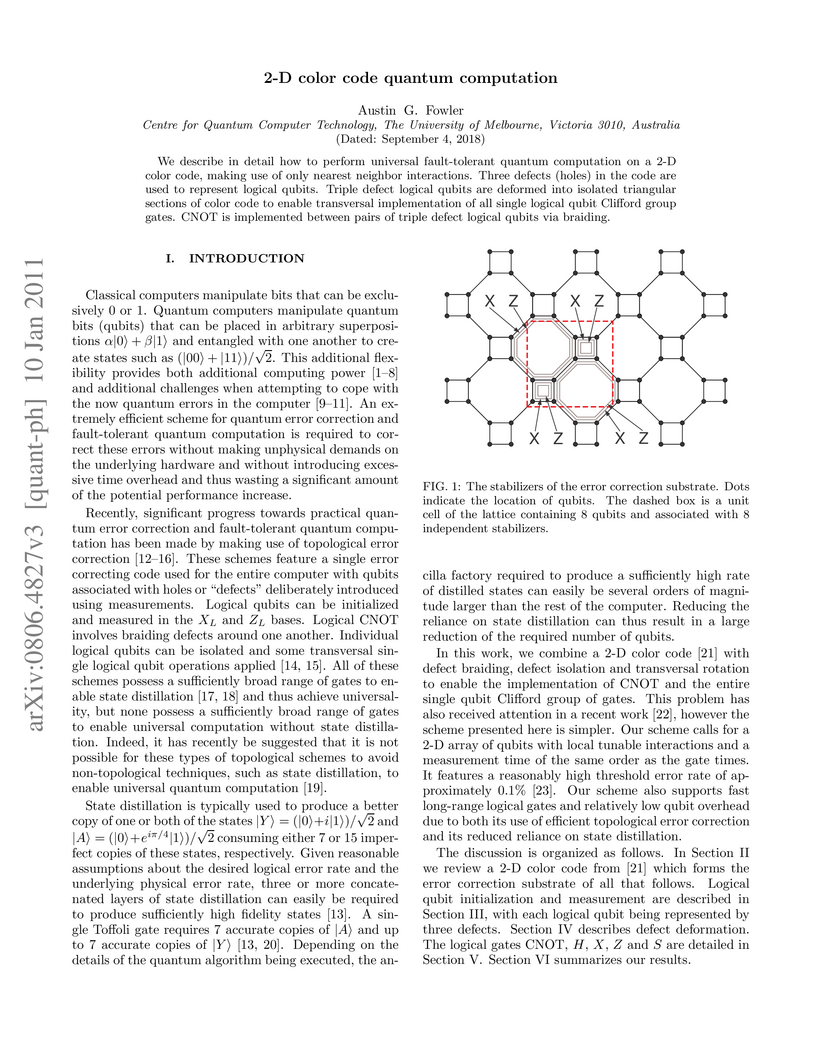

10 Jan 2011

We describe in detail how to perform universal fault-tolerant quantum computation on a 2-D color code, making use of only nearest neighbor interactions. Three defects (holes) in the code are used to represent logical qubits. Triple defect logical qubits are deformed into isolated triangular sections of color code to enable transversal implementation of all single logical qubit Clifford group gates. CNOT is implemented between pairs of triple defect logical qubits via braiding.

There are no more papers matching your filters at the moment.