emerging-technologies

The 2025 Nobel Prize in Chemistry for Metal-Organic Frameworks (MOFs) and recent breakthroughs by Huanting Wang's team at Monash University establish angstrom-scale channels as promising post-silicon substrates with native integrate-and-fire (IF) dynamics. However, utilizing these stochastic, analog materials for deterministic, bit-exact AI workloads (e.g., FP8) remains a paradox. Existing neuromorphic methods often settle for approximation, failing Transformer precision standards. To traverse the gap "from stochastic ions to deterministic floats," we propose a Native Spiking Microarchitecture. Treating noisy neurons as logic primitives, we introduce a Spatial Combinational Pipeline and a Sticky-Extra Correction mechanism. Validation across all 16,129 FP8 pairs confirms 100% bit-exact alignment with PyTorch. Crucially, our architecture reduces Linear layer latency to O(log N), yielding a 17x speedup. Physical simulations further demonstrate robustness against extreme membrane leakage (beta approx 0.01), effectively immunizing the system against the stochastic nature of the hardware.

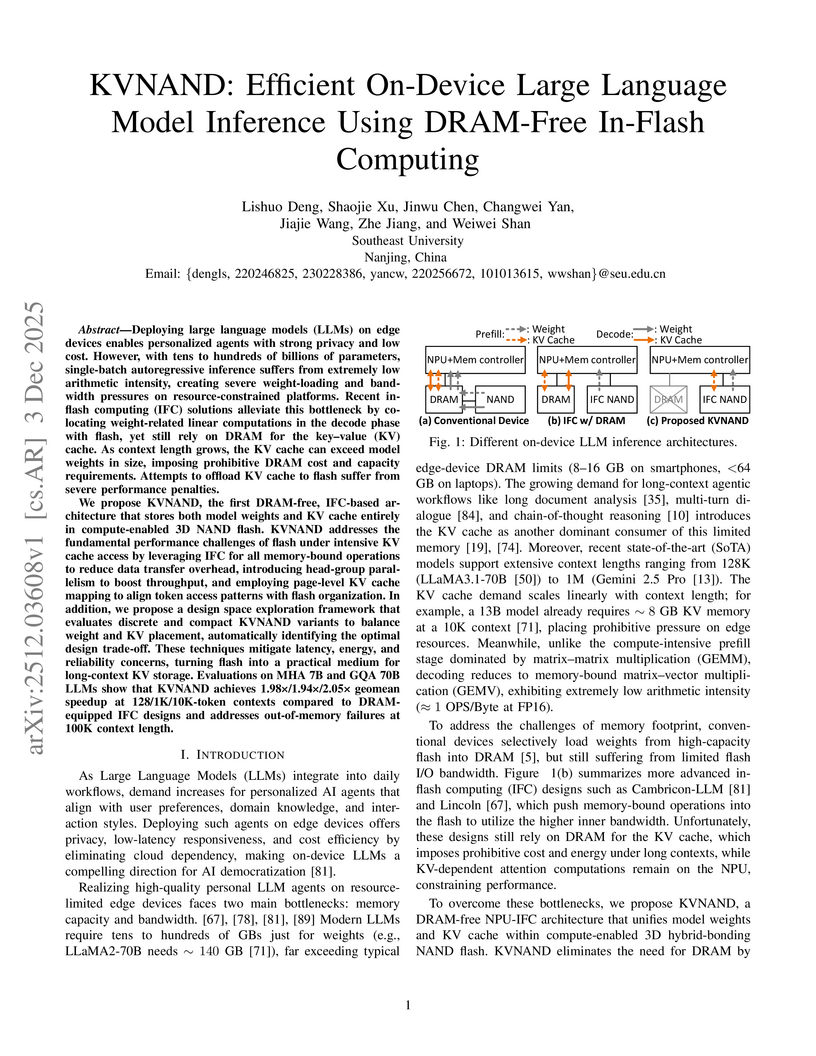

KVNAND is the first architecture to enable entirely DRAM-free, on-device Large Language Model (LLM) inference by integrating both model weights and the dynamic Key-Value (KV) cache within compute-enabled 3D NAND flash. This approach resolves out-of-memory issues for long contexts up to 100K tokens, achieving geomean speedups up to 2.05x and reducing memory costs by 69% compared to DRAM-based solutions.

Resistive random-access memory (RRAM) provides an excellent platform for analog matrix computing (AMC), enabling both matrix-vector multiplication (MVM) and the solution of matrix equations through open-loop and closed-loop circuit architectures. While RRAM-based AMC has been widely explored for accelerating neural networks, its application to signal processing in massive multiple-input multiple-output (MIMO) wireless communication is rapidly emerging as a promising direction. In this Review, we summarize recent advances in applying AMC to massive MIMO, including DFT/IDFT computation for OFDM modulation and demodulation using MVM circuits; MIMO detection and precoding using MVM-based iterative algorithms; and rapid one-step solutions enabled by matrix inversion (INV) and generalized inverse (GINV) circuits. We also highlight additional opportunities, such as AMC-based compressed-sensing recovery for channel estimation and eigenvalue circuits for leakage-based precoding. Finally, we outline key challenges, including RRAM device reliability, analog circuit precision, array scalability, and data conversion bottlenecks, and discuss the opportunities for overcoming these barriers. With continued progress in device-circuit-algorithm co-design, RRAM-based AMC holds strong promise for delivering high-efficiency, high-reliability solutions to (ultra)massive MIMO signal processing in the 6G era.

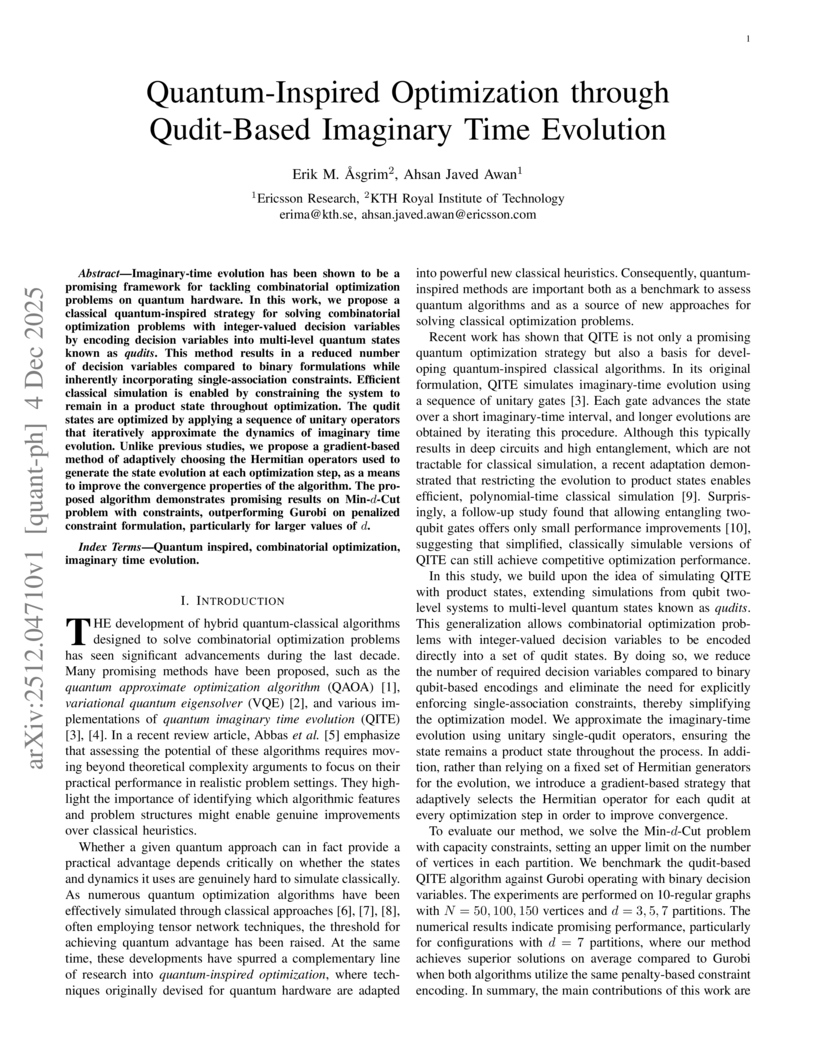

Imaginary-time evolution has been shown to be a promising framework for tackling combinatorial optimization problems on quantum hardware. In this work, we propose a classical quantum-inspired strategy for solving combinatorial optimization problems with integer-valued decision variables by encoding decision variables into multi-level quantum states known as qudits. This method results in a reduced number of decision variables compared to binary formulations while inherently incorporating single-association constraints. Efficient classical simulation is enabled by constraining the system to remain in a product state throughout optimization. The qudit states are optimized by applying a sequence of unitary operators that iteratively approximate the dynamics of imaginary time evolution. Unlike previous studies, we propose a gradient-based method of adaptively choosing the Hermitian operators used to generate the state evolution at each optimization step, as a means to improve the convergence properties of the algorithm. The proposed algorithm demonstrates promising results on Min-d-Cut problem with constraints, outperforming Gurobi on penalized constraint formulation, particularly for larger values of d.

A new benchmark evaluates Large Language Model (LLM) agents in planning and real-time execution within a dynamic Blocksworld simulation, integrating them via the Model Context Protocol (MCP). It demonstrates that an LLM agent achieved 80% success on basic tasks and 100% in identifying impossible scenarios, but its performance dropped to 60% with partial observability and increased significantly in execution time and token consumption for more complex challenges.

The prevailing view is that quantum phenomena can be harnessed to tackle certain problems beyond the reach of classical approaches. Quantifying this capability as a quantum-classical separation and demonstrating it on current quantum processors has remained elusive. Using a superconducting qubit processor, we show that quantum contextuality enables certain tasks to be performed with success probabilities beyond classical limits. With a few qubits, we illustrate quantum contextuality with the magic square game, as well as quantify it through a Kochen--Specker--Bell inequality violation. To examine many-body contextuality, we implement the N-player GHZ game and separately solve a 2D hidden linear function problem, exceeding classical success rate in both. Our work proposes novel ways to benchmark quantum processors using contextuality-based algorithms.

Sheaf Neural Networks equip graph structures with a cellular sheaf: a geometric structure which assigns local vector spaces (stalks) and a linear learnable restriction/transport maps to nodes and edges, yielding an edge-aware inductive bias that handles heterophily and limits oversmoothing. However, common Neural Sheaf Diffusion implementations rely on SVD-based sheaf normalization and dense per-edge restriction maps, which scale with stalk dimension, require frequent Laplacian rebuilds, and yield brittle gradients. To address these limitations, we introduce Polynomial Neural Sheaf Diffusion (PolyNSD), a new sheaf diffusion approach whose propagation operator is a degree-K polynomial in a normalised sheaf Laplacian, evaluated via a stable three-term recurrence on a spectrally rescaled operator. This provides an explicit K-hop receptive field in a single layer (independently of the stalk dimension), with a trainable spectral response obtained as a convex mixture of K+1 orthogonal polynomial basis responses. PolyNSD enforces stability via convex mixtures, spectral rescaling, and residual/gated paths, reaching new state-of-the-art results on both homophilic and heterophilic benchmarks, inverting the Neural Sheaf Diffusion trend by obtaining these results with just diagonal restriction maps, decoupling performance from large stalk dimension, while reducing runtime and memory requirements.

The growing demand for low-power and area-efficient TinyML inference on AIoT devices necessitates memory architectures that minimise data movement while sustaining high computational efficiency. This paper presents FERMI-ML, a Flexible and Resource-Efficient Memory-In-Situ (MIS) SRAM macro designed for TinyML acceleration. The proposed 9T XNOR-based RX9T bit-cell integrates a 5T storage cell with a 4T XNOR compute unit, enabling variable-precision MAC and CAM operations within the same array. A 22-transistor (C22T) compressor-tree-based accumulator facilitates logarithmic 1-64-bit MAC computation with reduced delay and power compared to conventional adder trees. The 4 KB macro achieves dual functionality for in-situ computation and CAM-based lookup operations, supporting Posit-4 or FP-4 precision. Post-layout results at 65 nm show operation at 350 MHz with 0.9 V, delivering a throughput of 1.93 TOPS and an energy efficiency of 364 TOPS/W, while maintaining a Quality-of-Result (QoR) above 97.5% with InceptionV4 and ResNet-18. FERMI-ML thus demonstrates a compact, reconfigurable, and energy-aware digital Memory-In-Situ macro capable of supporting mixed-precision TinyML workloads.

Geospatial decentralization is essential for blockchains, ensuring regulatory resilience, robustness, and fairness. We empirically analyze five major Proof of Stake (PoS) blockchains: Aptos, Avalanche, Ethereum, Solana, and Sui, revealing that a few geographic regions dominate consensus voting power, resulting in limited geospatial decentralization. To address this, we propose Geospatially aware Proof of Stake (GPoS), which integrates geospatial diversity with stake-based voting power. Experimental evaluation demonstrates an average 45% improvement in geospatial decentralization, as measured by the Gini coefficient of Eigenvector centrality, while incurring minimal performance overhead in BFT protocols, including HotStuff and CometBFT. These results demonstrate that GPoS can improve geospatial decentralization {while, in our experiments, incurring minimal overhead} to consensus performance.

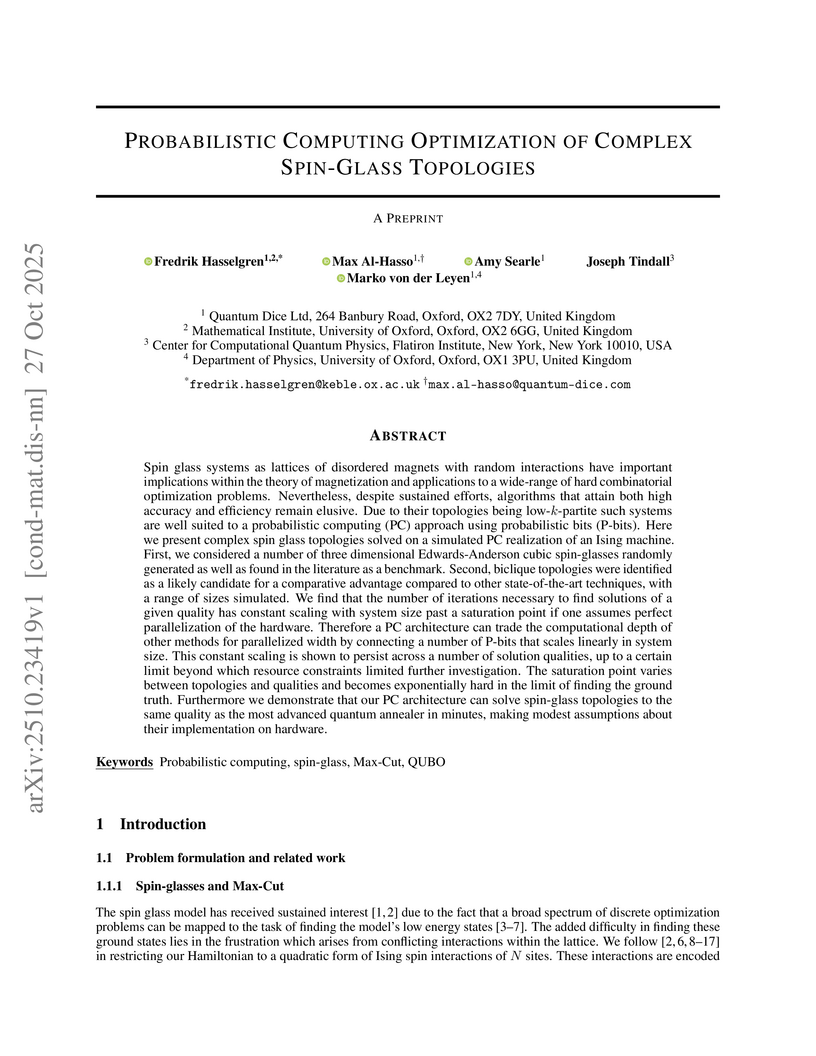

Spin glass systems as lattices of disordered magnets with random interactions have important implications within the theory of magnetization and applications to a wide-range of hard combinatorial optimization problems. Nevertheless, despite sustained efforts, algorithms that attain both high accuracy and efficiency remain elusive. Due to their topologies being low-k-partite such systems are well suited to a probabilistic computing (PC) approach using probabilistic bits (P-bits). Here we present complex spin glass topologies solved on a simulated PC realization of an Ising machine. First, we considered a number of three dimensional Edwards-Anderson cubic spin-glasses randomly generated as well as found in the literature as a benchmark. Second, biclique topologies were identified as a likely candidate for a comparative advantage compared to other state-of-the-art techniques, with a range of sizes simulated. We find that the number of iterations necessary to find solutions of a given quality has constant scaling with system size past a saturation point if one assumes perfect parallelization of the hardware. Therefore a PC architecture can trade the computational depth of other methods for parallelized width by connecting a number of P-bits that scales linearly in system size. This constant scaling is shown to persist across a number of solution qualities, up to a certain limit beyond which resource constraints limited further investigation. The saturation point varies between topologies and qualities and becomes exponentially hard in the limit of finding the ground truth. Furthermore we demonstrate that our PC architecture can solve spin-glass topologies to the same quality as the most advanced quantum annealer in minutes, making modest assumptions about their implementation on hardware.

09 Oct 2025

An intent-aware semantic router for vLLM dynamically applies reasoning only when required, improving overall LLM accuracy by 10.24 percentage points and reducing inference latency by 47.1% and token consumption by 48.5%. This system, integrated with cloud-native routing frameworks and open-sourced, enables more efficient and sustainable LLM deployments.

As Large Language Models (LLMs) continue to evolve, Mixture of Experts (MoE) architecture has emerged as a prevailing design for achieving state-of-the-art performance across a wide range of tasks. MoE models use sparse gating to activate only a handful of expert sub-networks per input, achieving billion-parameter capacity with inference costs akin to much smaller models. However, such models often pose challenges for hardware deployment due to the massive data volume introduced by the MoE layers. To address the challenges of serving MoE models, we propose Stratum, a system-hardware co-design approach that combines the novel memory technology Monolithic 3D-Stackable DRAM (Mono3D DRAM), near-memory processing (NMP), and GPU acceleration. The logic and Mono3D DRAM dies are connected through hybrid bonding, whereas the Mono3D DRAM stack and GPU are interconnected via silicon interposer. Mono3D DRAM offers higher internal bandwidth than HBM thanks to the dense vertical interconnect pitch enabled by its monolithic structure, which supports implementations of higher-performance near-memory processing. Furthermore, we tackle the latency differences introduced by aggressive vertical scaling of Mono3D DRAM along the z-dimension by constructing internal memory tiers and assigning data across layers based on access likelihood, guided by topic-based expert usage prediction to boost NMP throughput. The Stratum system achieves up to 8.29x improvement in decoding throughput and 7.66x better energy efficiency across various benchmarks compared to GPU baselines.

Quantum networks (QNs) supported by terahertz (THz) wireless links present a transformative alternative to fiber-based infrastructures, particularly in mobile and infrastructure-scarce environments. However, signal attenuation, molecular absorption, and severe propagation losses in THz channels pose significant challenges to reliable quantum state transmission and entanglement distribution. To overcome these limitations, we propose a dynamic reconfigurable intelligent surface (RIS)-assisted wireless QN architecture that leverages adaptive RIS elements capable of switching between active and passive modes based on the incident signal-to-noise ratio (SNR). These dynamic RIS elements enhance beamforming control over amplitude and phase, enabling robust redirection and compensation for THz-specific impairments. We develop a detailed analytical model that incorporates key physical layer phenomena in THz quantum links, including path loss, fading, thermal noise, and alignment variations. A secure optimization framework is formulated to jointly determine RIS placement and entanglement generation rate (EGR) allocation, while satisfying fidelity, security, and fairness constraints under diverse quality of service (QoS) demands. The model also includes an exploration of side-channel vulnerabilities arising from dynamic RIS switching patterns. Simulation results demonstrate that the proposed architecture yields up to 87\% fidelity enhancement and 65\% fairness improvement compared to static RIS baselines, while maintaining robustness under realistic THz channel conditions. These results underscore the promise of dynamic RIS technology in enabling scalable and adaptive quantum communications over wireless THz links.

Quantum computing promises significant speed-ups for certain algorithms but the practical use of current noisy intermediate-scale quantum (NISQ) era computers remains limited by resources constraints (e.g., noise, qubits, gates, and circuit depth). Quantum circuit optimization is a key mitigation strategy. In this context, ZX-calculus has emerged as an alternative framework that allows for semantics-preserving quantum circuit optimization.

We review ZX-based optimization of quantum circuits, categorizing them by optimization techniques, target metrics and intended quantum computing architecture. In addition, we outline critical challenges and future research directions, such as multi-objective optimization, scalable algorithms, and enhanced circuit extraction methods. This survey is valuable for researchers in both combinatorial optimization and quantum computing. For researchers in combinatorial optimization, we provide the background to understand a new challenging combinatorial problem: ZX-based quantum circuit optimization. For researchers in quantum computing, we classify and explain existing circuit optimization techniques.

While simulators exist for vehicular IoT nodes communicating with the Cloud through Edge nodes in a fully-simulated osmotic architecture, they often lack support for dynamic agent planning and optimisation to minimise vehicular battery consumption while ensuring fair communication times. Addressing these challenges requires extending current simulator architectures with AI algorithms for both traffic prediction and dynamic agent planning. This paper presents an extension of SimulatorOrchestrator (SO) to meet these requirements. Preliminary results over a realistic urban dataset show that utilising vehicular planning algorithms can lead to improved battery and QoS performance compared with traditional shortest path algorithms. The additional inclusion of desirability areas enabled more ambulances to be routed to their target destinations while utilising less energy to do so, compared to traditional and weighted algorithms without desirability considerations.

Browser fingerprinting defenses have historically focused on detecting JavaScript(JS)-based tracking techniques. However, the widespread adoption of WebAssembly (WASM) introduces a potential blind spot, as adversaries can convert JS to WASM's low-level binary format to obfuscate malicious logic. This paper presents the first systematic evaluation of how such WASM-based obfuscation impacts the robustness of modern fingerprinting defenses. We develop an automated pipeline that translates real-world JS fingerprinting scripts into functional WASM-obfuscated variants and test them against two classes of defenses: state-of-the-art detectors in research literature and commercial, in-browser tools. Our findings reveal a notable divergence: detectors proposed in the research literature that rely on feature-based analysis of source code show moderate vulnerability, stemming from outdated datasets or a lack of WASM compatibility. In contrast, defenses such as browser extensions and native browser features remained completely effective, as their API-level interception is agnostic to the script's underlying implementation. These results highlight a gap between academic and practical defense strategies and offer insights into strengthening detection approaches against WASM-based obfuscation, while also revealing opportunities for more evasive techniques in future attacks.

29 Aug 2025

Evaluating large language models (LLMs) on their ability to generate high-quality, accurate, situationally aware answers to clinical questions requires going beyond conventional benchmarks to assess how these systems behave in complex, high-stake clincal scenarios. Traditional evaluations are often limited to multiple-choice questions that fail to capture essential competencies such as contextual reasoning, awareness and uncertainty handling etc. To address these limitations, we evaluate our agentic, RAG-based clinical support assistant, this http URL, using HealthBench, a rubric-driven benchmark composed of open-ended, expert-annotated health conversations. On the Hard subset of 1,000 challenging examples, this http URL achieves a HealthBench score of 0.51, substantially outperforming leading frontier LLMs (GPT-5, o3, Grok 3, GPT-4, Gemini 2.5, etc.) across all behavioral axes (accuracy, completeness, instruction following, etc.). In a separate 100-sample evaluation against similar agentic RAG assistants (OpenEvidence, this http URL), it maintains a performance lead with a health-bench score of 0.54. These results highlight this http URL strengths in communication, instruction following, and accuracy, while also revealing areas for improvement in context awareness and completeness of a response. Overall, the findings underscore the utility of behavior-level, rubric-based evaluation for building a reliable and trustworthy AI-enabled clinical support assistant.

Living systems exhibit a range of fundamental characteristics: they are active, self-referential, self-modifying systems. This paper explores how these characteristics create challenges for conventional scientific approaches and why they require new theoretical and formal frameworks. We introduce a distinction between 'natural time', the continuing present of physical processes, and 'representational time', with its framework of past, present and future that emerges with life itself. Representational time enables memory, learning and prediction, functions of living systems essential for their survival. Through examples from evolution, embryogenesis and metamorphosis we show how living systems navigate the apparent contradictions arising from self-reference as natural time unwinds self-referential loops into developmental spirals. Conventional mathematical and computational formalisms struggle to model self-referential and self-modifying systems without running into paradox. We identify promising new directions for modelling self-referential systems, including domain theory, co-algebra, genetic programming, and self-modifying algorithms. There are broad implications for biology, cognitive science and social sciences, because self-reference and self-modification are not problems to be avoided but core features of living systems that must be modelled to understand life's open-ended creativity.

Agentic AI systems capable of reasoning, planning, and executing actions present fundamentally distinct governance challenges compared to traditional AI models. Unlike conventional AI, these systems exhibit emergent and unexpected behaviors during runtime, introducing novel agent-related risks that cannot be fully anticipated through pre-deployment governance alone. To address this critical gap, we introduce MI9, the first fully integrated runtime governance framework designed specifically for safety and alignment of agentic AI systems. MI9 introduces real-time controls through six integrated components: agency-risk index, agent-semantic telemetry capture, continuous authorization monitoring, Finite-State-Machine (FSM)-based conformance engines, goal-conditioned drift detection, and graduated containment strategies. Operating transparently across heterogeneous agent architectures, MI9 enables the systematic, safe, and responsible deployment of agentic systems in production environments where conventional governance approaches fall short, providing the foundational infrastructure for safe agentic AI deployment at scale. Detailed analysis through a diverse set of scenarios demonstrates MI9's systematic coverage of governance challenges that existing approaches fail to address, establishing the technical foundation for comprehensive agentic AI oversight.

All-day smart glasses are likely to emerge as platforms capable of continuous contextual sensing, uniquely positioning them for unprecedented assistance in our daily lives. Integrating the multi-modal AI agents required for human memory enhancement while performing continuous sensing, however, presents a major energy efficiency challenge for all-day usage. Achieving this balance requires intelligent, context-aware sensor management. Our approach, EgoTrigger, leverages audio cues from the microphone to selectively activate power-intensive cameras, enabling efficient sensing while preserving substantial utility for human memory enhancement. EgoTrigger uses a lightweight audio model (YAMNet) and a custom classification head to trigger image capture from hand-object interaction (HOI) audio cues, such as the sound of a drawer opening or a medication bottle being opened. In addition to evaluating on the QA-Ego4D dataset, we introduce and evaluate on the Human Memory Enhancement Question-Answer (HME-QA) dataset. Our dataset contains 340 human-annotated first-person QA pairs from full-length Ego4D videos that were curated to ensure that they contained audio, focusing on HOI moments critical for contextual understanding and memory. Our results show EgoTrigger can use 54% fewer frames on average, significantly saving energy in both power-hungry sensing components (e.g., cameras) and downstream operations (e.g., wireless transmission), while achieving comparable performance on datasets for an episodic memory task. We believe this context-aware triggering strategy represents a promising direction for enabling energy-efficient, functional smart glasses capable of all-day use -- supporting applications like helping users recall where they placed their keys or information about their routine activities (e.g., taking medications).

There are no more papers matching your filters at the moment.