Ask or search anything...

Jiangsu Province Collaborative Innovation Center of Modern Urban Traffic Technologies

Adaptive Testing Environment Generation for Connected and Automated Vehicles with Dense Reinforcement Learning

29 Feb 2024

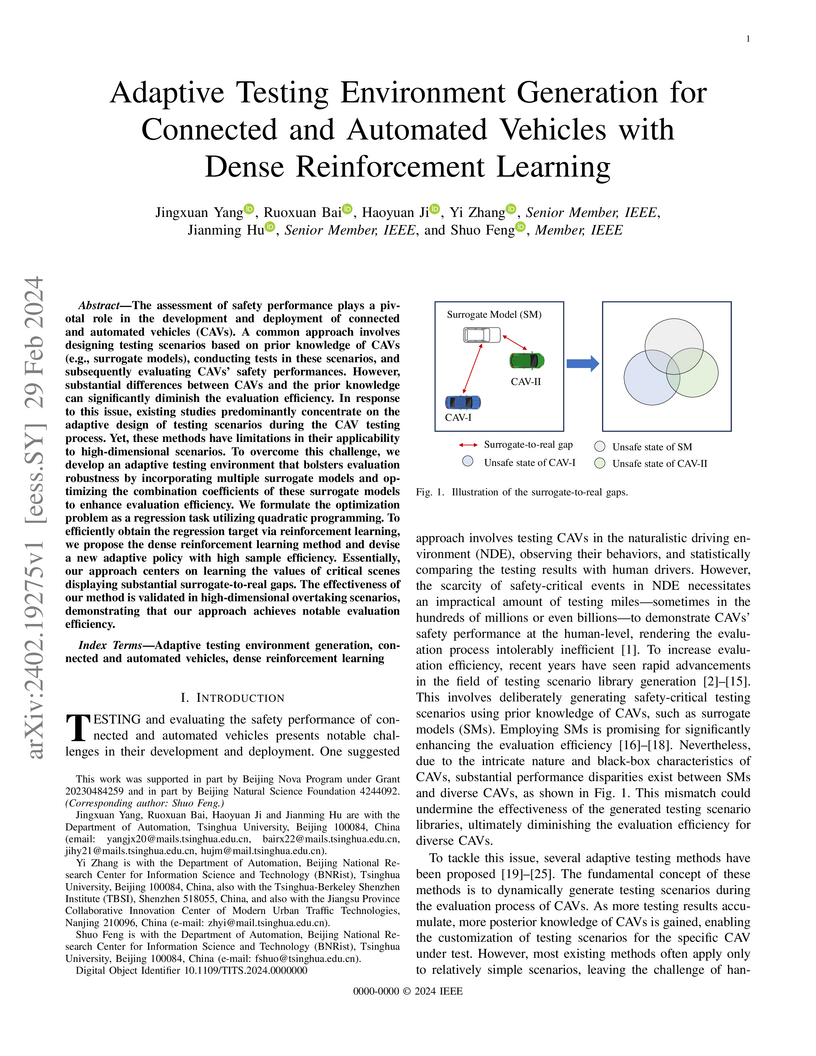

The assessment of safety performance plays a pivotal role in the development

and deployment of connected and automated vehicles (CAVs). A common approach

involves designing testing scenarios based on prior knowledge of CAVs (e.g.,

surrogate models), conducting tests in these scenarios, and subsequently

evaluating CAVs' safety performances. However, substantial differences between

CAVs and the prior knowledge can significantly diminish the evaluation

efficiency. In response to this issue, existing studies predominantly

concentrate on the adaptive design of testing scenarios during the CAV testing

process. Yet, these methods have limitations in their applicability to

high-dimensional scenarios. To overcome this challenge, we develop an adaptive

testing environment that bolsters evaluation robustness by incorporating

multiple surrogate models and optimizing the combination coefficients of these

surrogate models to enhance evaluation efficiency. We formulate the

optimization problem as a regression task utilizing quadratic programming. To

efficiently obtain the regression target via reinforcement learning, we propose

the dense reinforcement learning method and devise a new adaptive policy with

high sample efficiency. Essentially, our approach centers on learning the

values of critical scenes displaying substantial surrogate-to-real gaps. The

effectiveness of our method is validated in high-dimensional overtaking

scenarios, demonstrating that our approach achieves notable evaluation

efficiency.

A Multi-scale Perimeter Control and Route Guidance System for Large-scale Road Networks

Perimeter control and route guidance are effective ways to reduce traffic congestion and improve traffic efficiency by controlling the spatial and temporal traffic distribution on the network. This paper presents a multi-scale joint perimeter control and route guidance (MSJC) framework for controlling traffic in large-scale networks. The network is first partitioned into several subnetworks (regions) with traffic in each region governed by its macroscopic fundamental diagram (MFD), which forms the macroscale network (upper level). Each subnetwork, comprised of actual road links and signalized intersections, forms the microscale network (lower level). At the upper level, a joint perimeter control and route guidance model solves the region-based inflow rate and hyper-path flows to control the accumulation of each region and thus maximize the throughput of each region. At the lower level, a perimeter control strategy integrated with a backpressure policy determines the optimal signal phases of the intersections at the regional boundary. At the same time, a route choice model for vehicles is constructed to meet hyper-path flows and ensure the intra-region homogeneity of traffic density. The case study results demonstrate that the proposed MSJC outperforms other benchmarks in regulating regional accumulation, thereby improving throughput.

Joint Optimization of Transfer Location and Capacity in a Multimodal Transport Network: Bilevel Modeling and Paradoxes

With the growing attention towards developing the multimodal transport system to enhance urban mobility, there is an increasing need to construct new, rebuild or expand existing infrastructure to facilitate existing and accommodate newly generated travel demand. Therefore, this paper develops a bilevel model to simultaneously determine the location and capacity of the transfer infrastructure to be built considering elastic demand in a multimodal transport network. The upper level problem is formulated as a mixed integer linear programming problem, while the lower level problem is the capacitated combined trip distribution assignment model that depicts both destination and route choices of travelers via the multinomial logit formula. To solve the model, the paper develops a matheuristics algorithm that integrates a Genetic Algorithm and a successive linear programming solution approach. Numerical studies are conducted to demonstrate the existence and examine two Braess like paradox phenomena in a multimodal transport network. The first one states that under fixed demand constructing parking spaces to stimulate the usage of Park and Ride service could deteriorate the system performance, measured by the total passengers travel time, while the second one reveals that under variable demand increasing the parking capacity for the Park and Ride services to promote the usages may fail, represented by the decline in its modal share. Meanwhile, the last experiment suggests that constructing transfer infrastructures at distributed stations outperforms building a large transfer center in terms of attracting travelers using sustainable transit modes.

Optimal Cooperative Driving at Signal-Free Intersections with Polynomial-Time Complexity

28 Apr 2021

Cooperative driving at signal-free intersections, which aims to improve

driving safety and efficiency for connected and automated vehicles, has

attracted increasing interest in recent years. However, existing cooperative

driving strategies either suffer from computational complexity or cannot

guarantee global optimality. To fill this research gap, this paper proposes an

optimal and computationally efficient cooperative driving strategy with the

polynomial-time complexity. By modeling the conflict relations among the

vehicles, the solution space of the cooperative driving problem is completely

represented by a newly designed small-size state space. Then, based on dynamic

programming, the globally optimal solution can be searched inside the state

space efficiently. It is proved that the proposed strategy can reduce the time

complexity of computation from exponential to a small-degree polynomial.

Simulation results further demonstrate that the proposed strategy can obtain

the globally optimal solution within a limited computation time under various

traffic demand settings.

There are no more papers matching your filters at the moment.

Tsinghua University

Tsinghua University

University of California, Davis

University of California, Davis

University of Michigan

University of Michigan