Rakuten

VARiant presents a supernet framework with a progressive training strategy for Visual Autoregressive (VAR) models, allowing dynamic depth adjustment during inference within a single model. This method reduces KV cache consumption by up to 80% and increases inference speed by up to 3.5x compared to the base VAR model, while maintaining high image generation quality on ImageNet-1K.

01 Oct 2025

University of Michigan

University of Michigan RIKEN

RIKEN Technical University of Munich

Technical University of Munich Chalmers University of TechnologyForschungszentrum Jülich GmbHNational Institute of Advanced Industrial Science and Technology (AIST)IBM Research EuropeUnitary FundPlaksha UniversityAberystwyth UniversityZurich InstrumentsRakutenUniversity of GdañskIBM, T.J. Watson Research CenterUniversit

de Sherbrooke

Chalmers University of TechnologyForschungszentrum Jülich GmbHNational Institute of Advanced Industrial Science and Technology (AIST)IBM Research EuropeUnitary FundPlaksha UniversityAberystwyth UniversityZurich InstrumentsRakutenUniversity of GdañskIBM, T.J. Watson Research CenterUniversit

de SherbrookeQuTiP, the Quantum Toolbox in Python, has been at the forefront of open-source quantum software for the past 13 years. It is used as a research, teaching, and industrial tool, and has been downloaded millions of times by users around the world. Here we introduce the latest developments in QuTiP v5, which are set to have a large impact on the future of QuTiP and enable it to be a modern, continuously developed and popular tool for another decade and more. We summarize the code design and fundamental data layer changes as well as efficiency improvements, new solvers, applications to quantum circuits with QuTiP-QIP, and new quantum control tools with QuTiP-QOC. Additional flexibility in the data layer underlying all ``quantum objects'' in QuTiP allows us to harness the power of state-of-the-art data formats and packages like JAX, CuPy, and more. We explain these new features with a series of both well-known and new examples. The code for these examples is available in a static form on GitHub and as continuously updated and documented notebooks in the qutip-tutorials package.

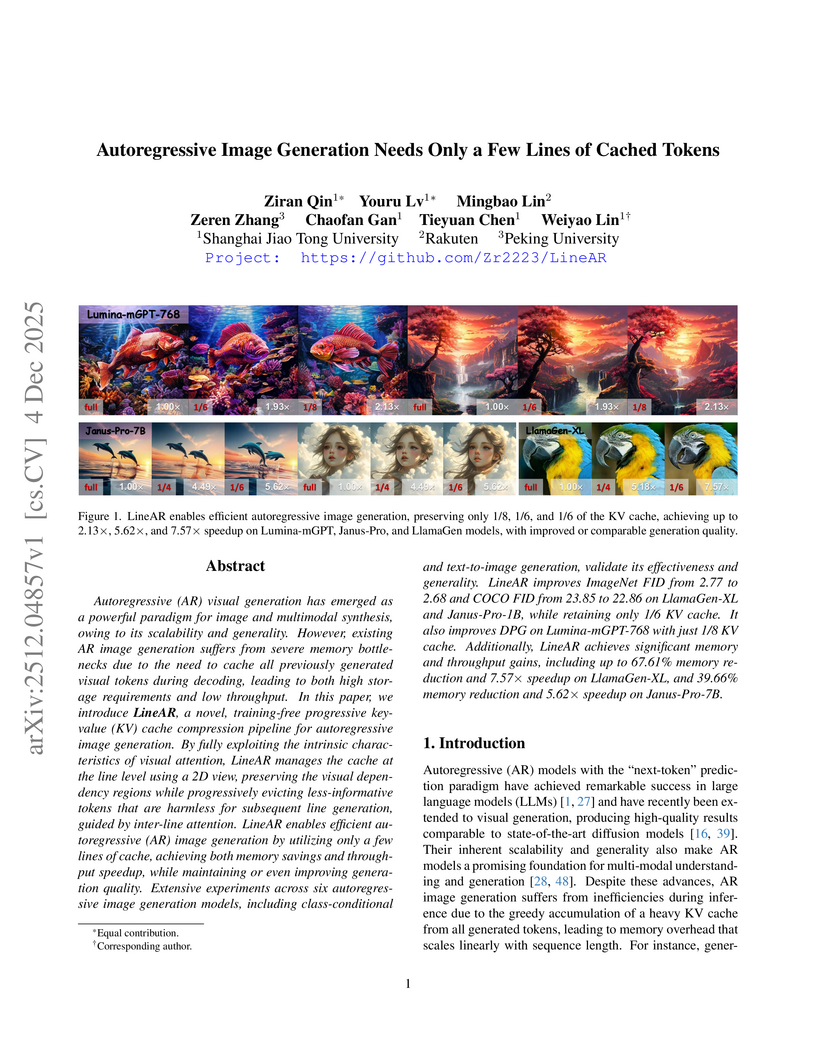

Autoregressive (AR) visual generation has emerged as a powerful paradigm for image and multimodal synthesis, owing to its scalability and generality. However, existing AR image generation suffers from severe memory bottlenecks due to the need to cache all previously generated visual tokens during decoding, leading to both high storage requirements and low throughput. In this paper, we introduce \textbf{LineAR}, a novel, training-free progressive key-value (KV) cache compression pipeline for autoregressive image generation. By fully exploiting the intrinsic characteristics of visual attention, LineAR manages the cache at the line level using a 2D view, preserving the visual dependency regions while progressively evicting less-informative tokens that are harmless for subsequent line generation, guided by inter-line attention. LineAR enables efficient autoregressive (AR) image generation by utilizing only a few lines of cache, achieving both memory savings and throughput speedup, while maintaining or even improving generation quality. Extensive experiments across six autoregressive image generation models, including class-conditional and text-to-image generation, validate its effectiveness and generality. LineAR improves ImageNet FID from 2.77 to 2.68 and COCO FID from 23.85 to 22.86 on LlamaGen-XL and Janus-Pro-1B, while retaining only 1/6 KV cache. It also improves DPG on Lumina-mGPT-768 with just 1/8 KV cache. Additionally, LineAR achieves significant memory and throughput gains, including up to 67.61% memory reduction and 7.57x speedup on LlamaGen-XL, and 39.66% memory reduction and 5.62x speedup on Janus-Pro-7B.

Deep Supervision Networks exhibit significant efficacy for the medical imaging community. Nevertheless, existing work merely supervises either the coarse-grained semantic features or fine-grained detailed features in isolation, which compromises the fact that these two types of features hold vital relationships in medical image analysis. We advocate the powers of complementary feature supervision for medical image segmentation, by proposing a Detail-Semantic Deep Supervision Network (DS2Net). DS2Net navigates both low-level detailed and high-level semantic feature supervision through Detail Enhance Module (DEM) and Semantic Enhance Module (SEM). DEM and SEM respectively harness low-level and high-level feature maps to create detail and semantic masks for enhancing feature supervision. This is a novel shift from single-view deep supervision to multi-view deep supervision. DS2Net is also equipped with a novel uncertainty-based supervision loss that adaptively assigns the supervision strength of features within distinct scales based on their uncertainty, thus circumventing the sub-optimal heuristic design that typifies previous works. Through extensive experiments on six benchmarks captured under either colonoscopy, ultrasound and microscope, we demonstrate that DS2Net consistently outperforms state-of-the-art methods for medical image analysis.

There are no more papers matching your filters at the moment.